GPT-5 Set the Stage for Ad Monetization and the SuperApp

How ChatGPT will monetize free users, Router is the Release, AIs will serve Ads, Google's moat eroded?, The shift of purchasing intent queries

To many power users (Pro and Plus), GPT5 was a disappointing release. But with closer inspection, the real release is focused on the vast majority of ChatGPT’s users, which is the 700m+ free userbase that is growing rapidly. Power users should be disappointed; this release wasn’t for them. The real consumer opportunity for OpenAI lies with the largest user base, and getting the unmonetized users who currently use ChatGPT infrequently in their day-to-day to indirectly pay is their largest opportunity.

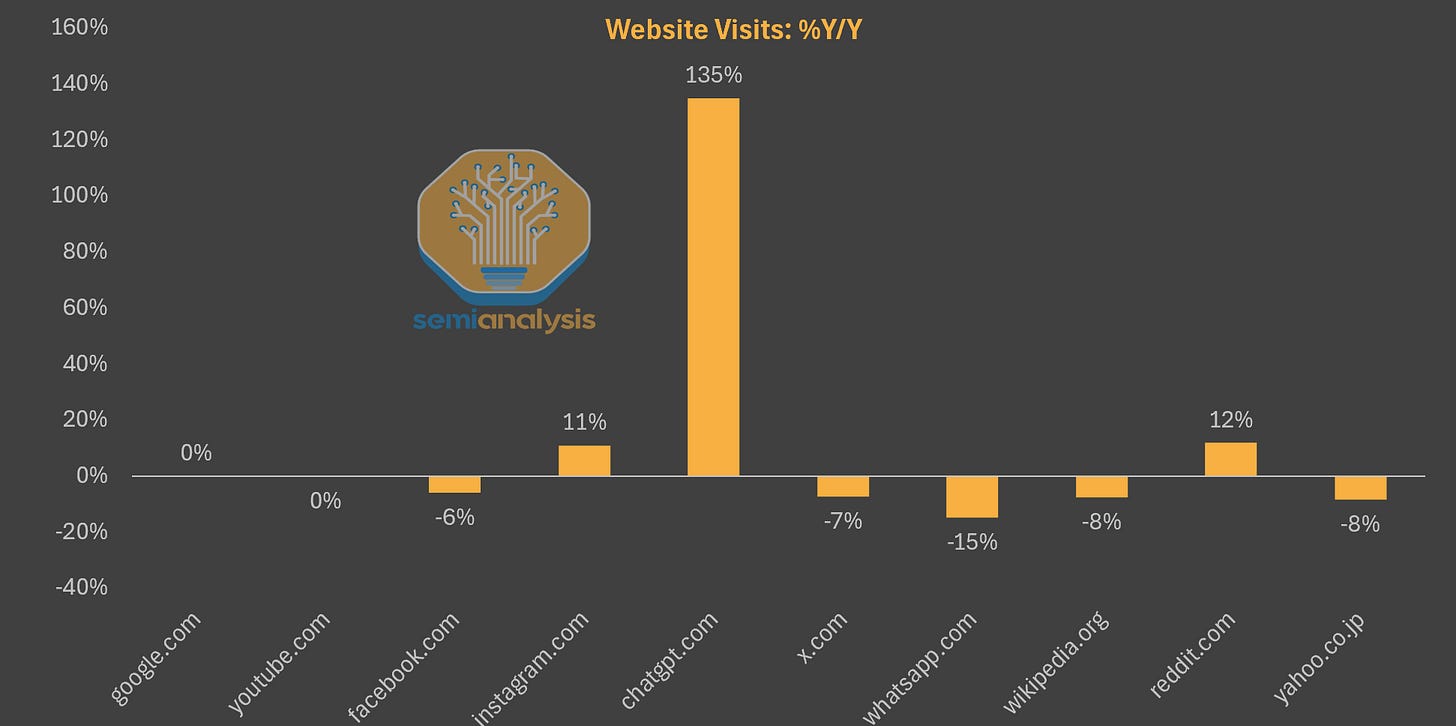

Analysts focused on the model capabilities are missing the much larger context of network effects that ChatGPT is gaining quickly. In November of 2023, ChatGPT wasn’t even in the top 100 websites; now it is number 5. Larger than X/Twitter, Reddit, Whatsapp, Wikipedia, and quickly approaching Instagram, Facebook, Youtube and Google. On the top 10 list, every single property is much older than ChatGPT.com, and the sheer number of unmonetized users is staggering.

But that changes with GPT-5. OpenAI is laying the groundwork to monetize one of the largest and fastest-growing web properties in the world, and it all begins with the router.

The Router is the Release

On OpenAI’s release website, the second paragraph is about the “One United System,” specifically focused on the router. The wording is instructive.

GPT‑5 is a unified system with a smart, efficient model that answers most questions, a deeper reasoning model (GPT‑5 thinking) for harder problems, and a real‑time router that quickly decides which to use based on conversation type, complexity, tool needs, and your explicit intent (for example, if you say “think hard about this” in the prompt). The router is continuously trained on real signals, including when users switch models, preference rates for responses, and measured correctness, improving over time. Once usage limits are reached, a mini version of each model handles remaining queries. In the near future, we plan to integrate these capabilities into a single model.

The router serves multiple purposes on both the cost and performance side. On the cost side, routing users to mini versions of each model allows OpenAI to service users with lower costs. On the performance side, it will enable many users to use thinking aka CoT (Chain of Thought) reasoning for the first time. Over 99% of the free users have yet to interact with a thinking model like o3, and for the average user, ChatGPT just got a huge upgrade. The number of free users exposed to thinking models went up 7x in the first day and the number of paying users up nearly 3.5x.

But the router is clearly a feature to the new service, and can likely see improvements or changes over time. It will continuously learn on preference rates and OpenAI promises it will improve over time. It just takes a single additional attribute to begin the path to monetization: the commercial value of the query.

We believe that the Router is the groundwork for the next leg of ChatGPT’s story, and that’s monetization of free users.

The Router is Preparing for Free Monetization, Sam’s Subtle Tone Shift

Centralizing the control of the free user experience allows for many more future monetization paths. And this monetization path is one that has been hinted at subtly for a while. It all starts with OpenAI’s decision to hire Fidji Simo as CEO of Applications in May. Let’s look at her background because it’s telling.

Fidji was at Ebay from 2007 to 2011, but her defining career was primarily at Facebook. She was Vice President and Head of Facebook, and she is known for having a superpower to monetize. She was critical in rolling out videos that autoplay, improving the Facebook feed, and monetizing mobile and gaming. She might be one of the most qualified individuals alive to turn high-intent internet properties into ad products, and now she's at the fastest-growing internet property of the last decade that is unmonetized. It’s an obvious story.

What’s more is Sam Altman himself has had a very direct tone shift in the last year.

“I will disclose as a personal bias I hate ads. I think ads were important to give the early internet a business model. I’m not totally against them, but ads plus AI are uniquely unsettling to me. I kind of think of ads as a last resort as a business model.”

But in recent interviews his tone has now shifted. There is clearly a lot of thought happening about how to best monetize free users more recently. This likely is in conjunction with Simo's hiring.

“I am not totally against it… if you compare us to social media or web search where you can kinda tell that you are being monetized… we would hate to ever modify anything in the stream of an LLM… maybe if you click on something in there that is going to be there we’d show anyway, we’ll get a bit of transaction revenue and it’s a flat thing for everything, maybe that could work. It’s clearly possible to be a good ad driven company but there are obviously issues to it.

Compared to the previous conversation, when he was dismissive, his most recent thoughts clearly shows that Sam Altman is thinking about monetizing free users. He mentions a take rate a potential affiliate model. And as a response, the interviewer (Andrew Mayne of OpenAI) literally says, “I would love to do all my purchasing through ChatGPT because often times I feel like I am not making the most informed decisions,” as a response in the conversation. This is likely the direction OpenAI is taking.

The Router release can now understand the intent of the user’s queries, and importantly, can decide how to respond. It only takes one additional step to decide whether the query is economically monetizable or not. Today we will make our case for how ChatGPT's monetized free end state could look like an Agentic super-app for the consumer. This is only possible because of routing.

We believe that display ads are unlikely. Perplexity has tried this, and it doesn’t seem to be going that well. Instead of inserting a paid feature into the query, we believe it’s more likely that they will pursue a take-rate based model.

Now let's discuss, because it looks like an Agentic Assistant is a way that could align Sam Altman's vision of AI being helpful, as well as monetizing via a transaction take rate.

Agentic Advice and Purchasing, Supply and Demand Dynamics

Let's talk about Agentic purchasing and compare it to a search query today. Because LLMs have a core feature that Search does not, and that is scaling marginal costs. This is fundamentally different than the world search grew up in. Let's examine “Aggregation Theory” work of Ben Thompson, because the core feature was that most technology companies had zero marginal costs to an additional user. There were some fixed overheads for running the largest search engine, but the incremental cost of another query was virtually zero. Agents and LLMs kill this concept.

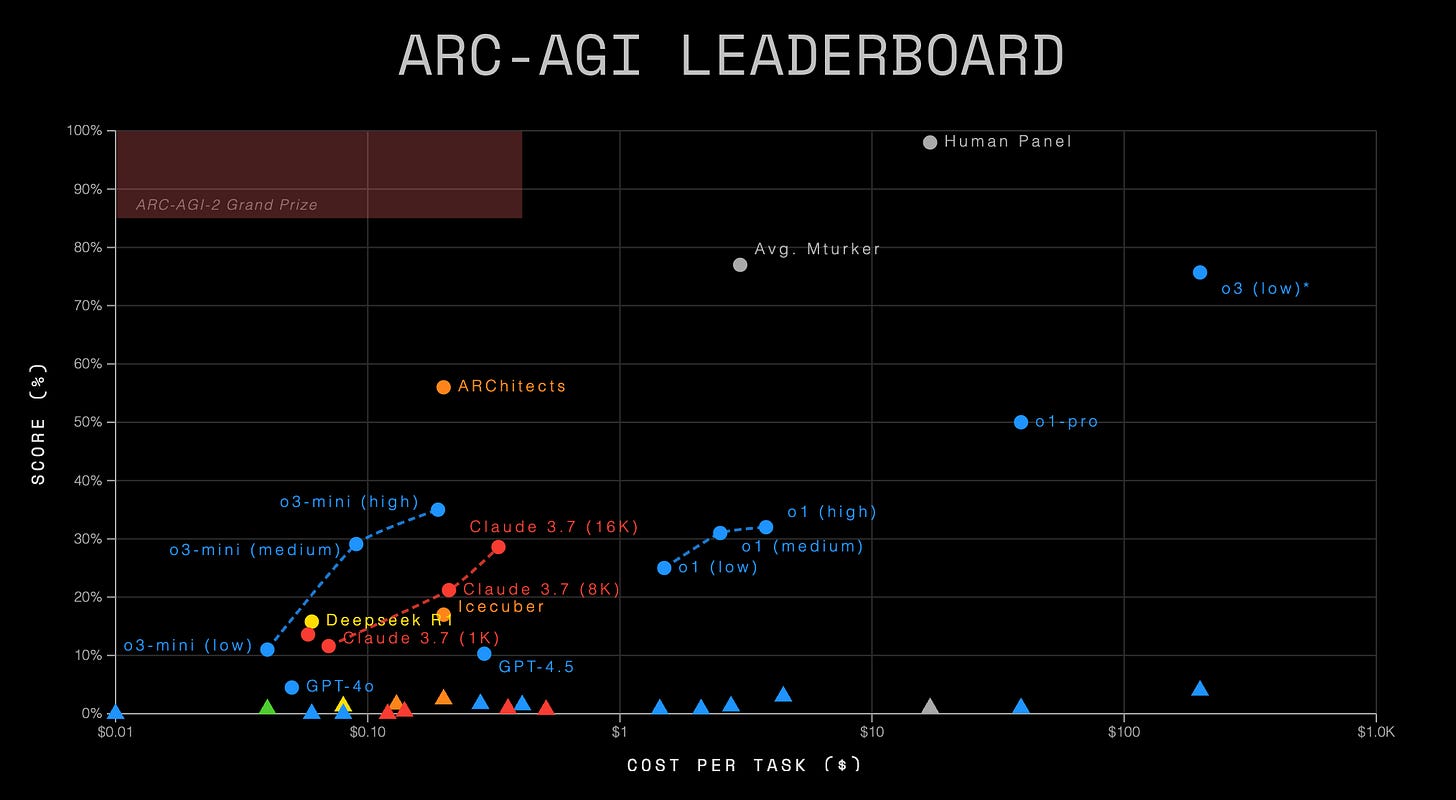

For the first time, the more you spend the better your result is because of CoT reasoning tokens and now marginal costs exist in software again. There is a somewhat direct relationship between more money, more compute, and a better answer. Nowhere is this clearer than in AI, in which you can spend variable costs to get a variably better answer or outcome.

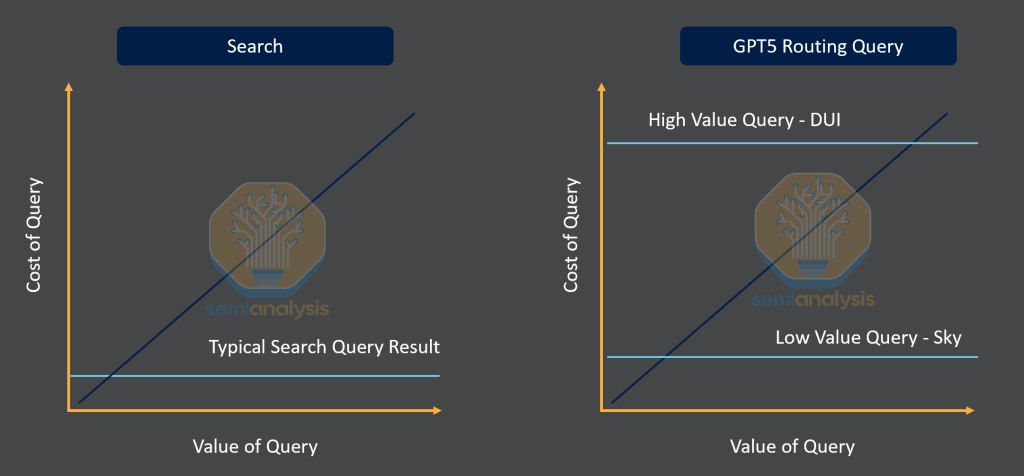

So let's apply marginal costs to a new purchasing experience. Let's compare two queries, an information query and a commercial query.

A trivial information query like “Why is the sky blue?”

A highly commercial query, “What is the best DUI lawyer near me”

Before the router, there was no way for a query to be distinguished, and after the router, the first low-value query could be routed to a GPT5 mini model that can answer with 0 tool calls and no reasoning. This likely means serving this user is approaching the cost of a search query.

The search query on the other hand has a fixed cost. It would show a page ranking of websites, with a potential AI summary at the top. This is a fixed supply response to what could be a variably hard question. But now ChatGPT free (because of routing) can dynamically answer a harder question with a better answer, which is not how search is designed today. Below shows the value of a changing supply query to a harder question, the supply is fixed for Search.

So now let's bring in the higher value query, the DUI Lawyer question. As you may know, this is an extremely valuable question. Today on search, this is one of the higher cost per click keywords, and it is plastered with ads. In a world of dynamic supply, ChatGPT can not only answer this question, it could realize this is a very valuable question and answer this question at the level of a human. It could throw $50 dollars of compute if there is a belief of high conversion, because that transaction is worth $1000s of dollars.

The router makes this possible. ChatGPT 5 could decide to allocate $50 to the query, create a plan, gather information about the incident, research local lawyers, consider who is likely to answer fastest, consider your budget, and then contact multiple lawyers on your behalf. It could even Agentically reach out to lawyers on behalf of the free user knowing that the conversion ratio of this query is even higher. This version of ChatGPT is highly helpful, aligns with the user's query, and is a valuable referral to the seller of the services or goods. This wouldn't be intrusive in the current format, and many free users would use this instantly.

This would apply to more than just services. Products that are highly likely to be purchased with agentic purchase would pay for referral fees, such as groceries, ecommerce purchases, flights and hotels. This could be a consumer SuperApp that would be an "agent" for day to day planning, purchases, and basic services.

The user wouldn’t pay via a cost of a subscription, but by transaction fees or ad take rates on purchase. The AI agent could still make the best response output but drive extremely high value business to a company almost instantly, in which the business would be willing to pay take rates on.

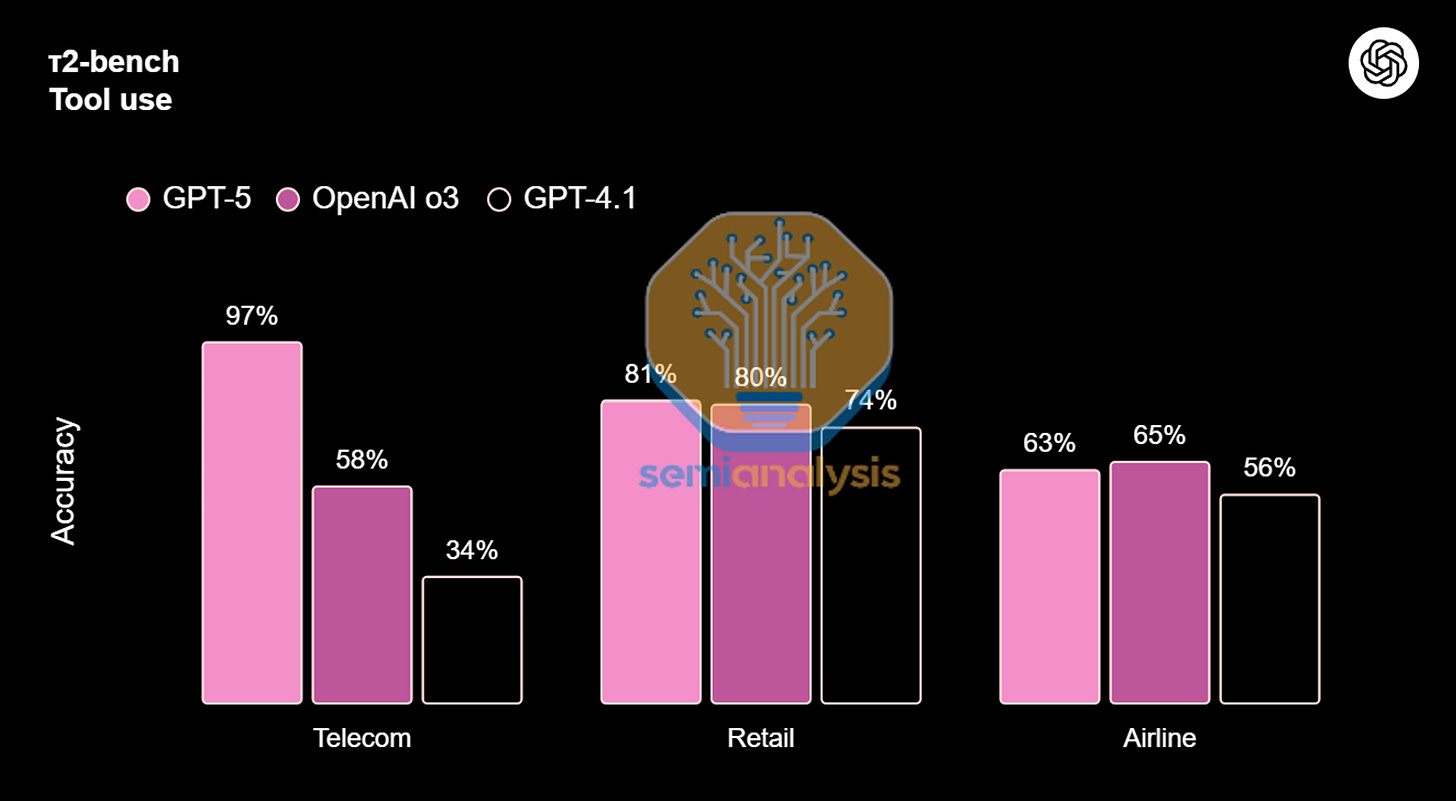

And what’s more you can see the glimpses of this in their model release notes. They highlighted Gmail and Google Calendar integration, as well as new tool use benchmarks for services such as Telecom, Retail, and Airlines. Imagine a world where very little customer acquisition cost is spent on ads, but rather asking a helpful AI assistant to set up the best internet plan in your neighborhood.

And while this feels like speculation, this is already happening. Instacart added this feature to let agents checkout products in January of this year. Fidji Simo was at Instacart when this was implemented, and now she just joined OpenAI as head of product. The wheels are already in motion, this will be the future of free usage at ChatGPT.

AI labs such as Anthropic and OpenAI are even paying startups hundreds of thousands of dollars to spin up replicas of popular sites like DoorDash and Amazon to RL agents on successfully completing end-to-end transactions. It is not a question of if, but when this capability happens.

You can see the future. Imagine a world where you ask for new dinner recipes for the week, and ChatGPT gives you multiple options and orders the cart for you to check out. The fee would be paid on the completion of the purchase, and Search is completely cut out of the picture. Every thing that can be researched or planned in an AI app could be purchased for companies that adopt partnerships quickly.

Companies would flock quickly to this large new purchasing habit. Booking flights, purchasing items, buying food, etc. If there is a connection to a website and payment information, this could all be monetized for “free” use of the application but at transaction.

And while we are far from this future, the router is the necessary step to begin the sorting of high and low compute, and eventually commercial intent queries. None of this is possible without a single unified interface routing dynamic responses to users.

This would fulfill a vision for non-intrusive ads by Sam Altman, as well as continue to enable ChatGPT to be a trusted advisor to users for free. ChatGPT will become an agent to help users make one of the most important decisions in their day-to-day lives: buying stuff. OpenAI and Shopify are already working on a checkout integration today.

From Today to Agentic Purchasing End State

It’s no secret that we are very far from that future today. It would likely take a few steps before a true product launch, but it starts with a Router and likely many partnerships on the other side as connectors. Maybe in the beginning, ChatGPT gets affiliate fees for the currently recommended items that lead to a purchase. This would look like a typical affiliate marketing deal, and would have hard-to-measure success rates and lower take rates.

As the model becomes more agentic, it would likely need plug in to the service’s system to be able to make reservations, book flights, or schedule appointments. It would likely need heavy partnership.

Let’s examine some of the partnerships they have already made today. So far OpenAI has partnered with:

Finance Companies: Stripe, Visa, PayPal

Consumer Companies: Mattel, Booking.com, Lowe’s

Enterprise Software: Salesforce, Intercom, Zendesk

Consumer Internet: Snapchat, Shopify, Instacart, Mercari

Every company that can and will shift to a cheaper customer acquisition cost will be excited to move sooner. There will be low overhead, as less customer service, advertising, marketing and other functions will be needed as ChatGPT collapses that entire purchasing funnel into a helpful assistant.

OpenAI is Creating a Consumer SuperApp

OpenAI has clearly crossed the chasm with its web-scale presence. And every single large internet-scale property with this many users has been monetized for “free” by ads. Agentic purchasing with take rates can begin now after the implementation of the router.

We now turn our focus onto the hyperscalers, who are facing increasing competition in the consumer space, and which smaller companies are already benefiting from the shift of monetizable queries away from search to AI.

OpenAI is firmly knocking on the door of technology giants Google and Meta and even Amazon. Previous scares about AI have been focused on search query volume, not being replaced in the ad tech stack. ChatGPT can compete with dominant platforms for its place in the ecosystem, and to date this is push into purchasing is the most concrete example of OpenAI coming for advertising at large. If they were to first launch an aggressive Agentic checkout solution before Meta or Google, this would be seen as huge competitive shots at both companies.

A reminder that if we are talking about pure usage, only one company is growing users at a meaningful rate. It's OpenAI.

In some ways by completely bypassing the top of funnel of search or pushing ads, it creates a third space for purchasing. Social media time continues to climb as human-to-human interaction is still the primary time consumption, so the research portion of consumer journeys would more heavily impact Google, but there is opportunity for smaller players. Certain smaller players are actually winning here as shown by the data below