Claude Code is the Inflection Point

What It Is, How We Use It, Industry Repercussions, Microsoft's Dilemma, Why Anthropic Is Winning

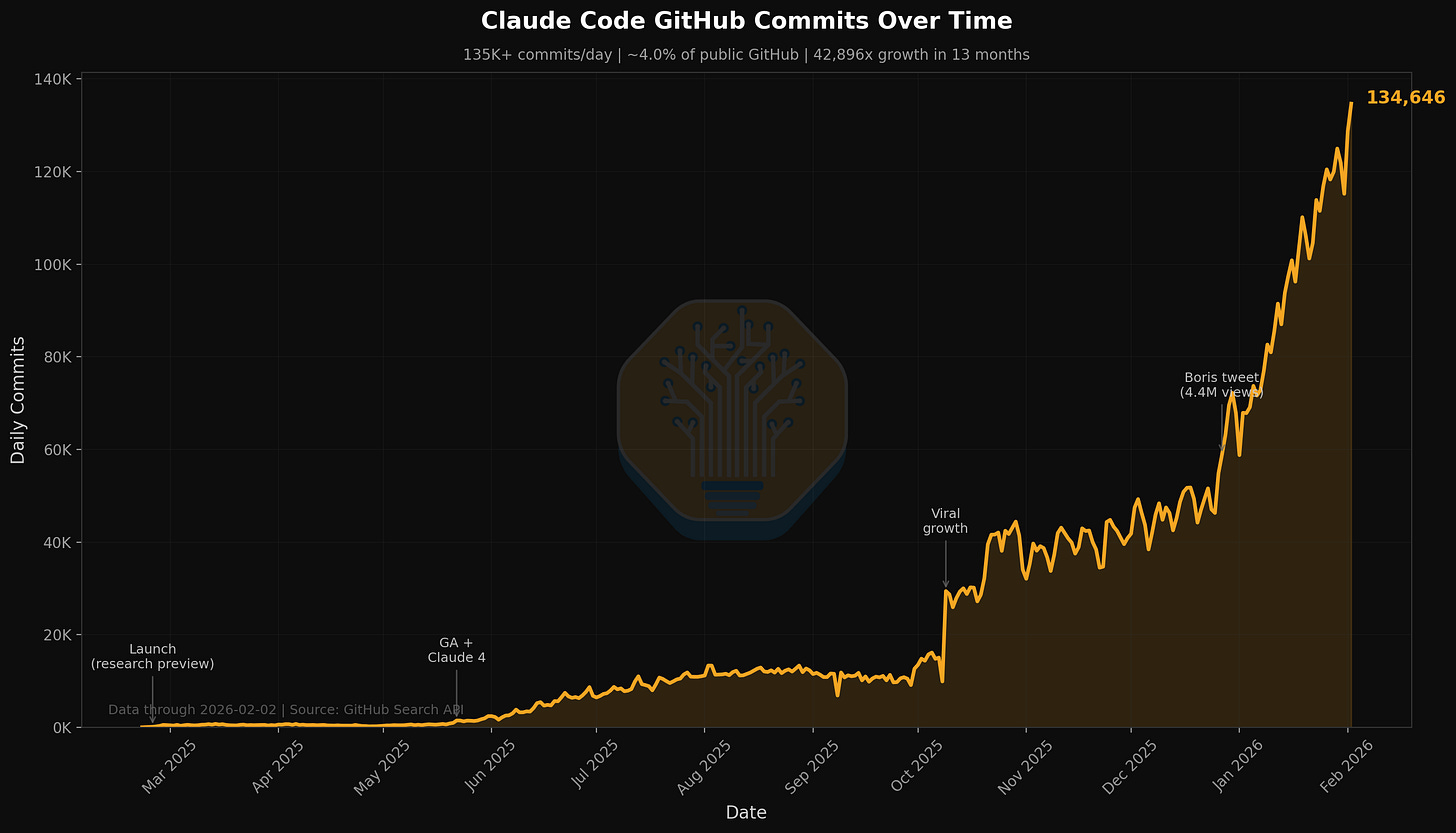

4% of GitHub public commits are being authored by Claude Code right now. At the current trajectory, we believe that Claude Code will be 20%+ of all daily commits by the end of 2026. While you blinked, AI consumed all of software development.

Our sister publication Fabricated Knowledge described software like linear TV during the rise of the internet and thinks that the rise of Claude Code is going to be a new layer of intelligence on top of software akin to DRAM versus NAND. Today SemiAnalysis is going to dive into the repercussions of Claude Code, what it is, and why Claude is so good.

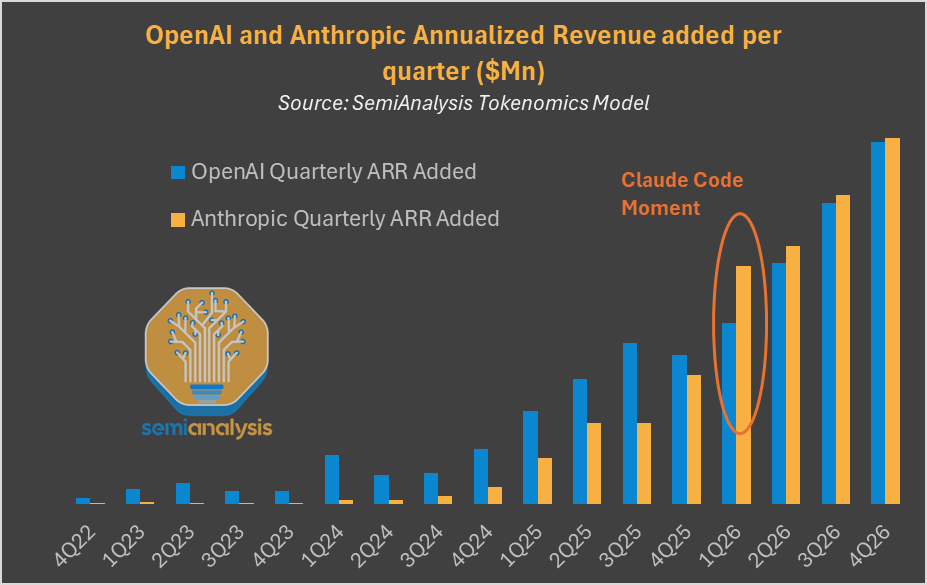

We believe that Claude Code is the inflection point for AI “Agents” and is a glimpse into the future of how AI will function. It is set to drive exceptional revenue growth for Anthropic in 2026, enabling the lab to dramatically outgrow OpenAI.

We built a detailed economic model of Anthropic and precisely quantified revenue and capex implications for its cloud partners AWS, Google Cloud, Azure, as well as associated supply chains such as Trainium2/3, TPUs and GPUs. This is the core purpose of the Tokenomics model.

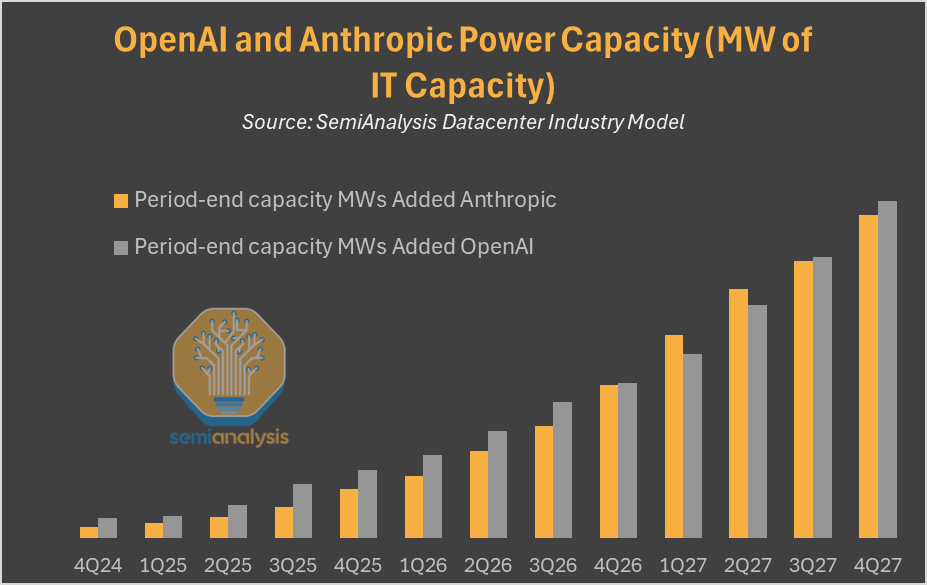

Anthropic is on track to add as much power as OpenAI in the next three years. Refer to our Datacenter Industry Model for a building-by-building tracker of Anthropic and OpenAI. Sam’s AI lab is notably suffering from mutliple data center delays, which we’ve called out months ahead of the headlines, most notably in our Coreweave Q3’2025 earnings preview where we explicitly called out a large CapEx guidance miss.

Since more compute means more revenue, we can forecast ARR growth and compare Anthropic to OpenAI directly.

Notably, our forecast shows that Anthropic’s quarterly ARR additions have overtaken OpenAI’s. Anthropic is adding more revenue every month than OpenAI. We believe Anthropic’s growth will be constrained by compute.

Let’s dig deeper into Anthropic’s Crown jewel: Claude Code.

Claude Code and the Agentic Future

Agents will be the primary method of how organic intelligence (humans) interact with artificial intelligence (AI). But Claude Code also is also a demonstration of the reverse: showing how agents interact with humans.

We believe the future of AI will be about the orchestration of tokens, not just selling tokens at base cost. With history as a guide, we view the OpenAI ChatGPT API as the call and response of tokens, akin to Web 1.0 with TCP/IP connecting users to static websites hosted on the Internet. While TCP/IP is a foundational technology, this communication protocol became just the means to the end of enabling the Internet during Web 2.0, and the shift to dynamic web pages. Today, the internet uses TCP/IP packets to organize much larger sets of information than a static website. The protocol matters, but it was the applications built on top of this protocol that created trillions in value.

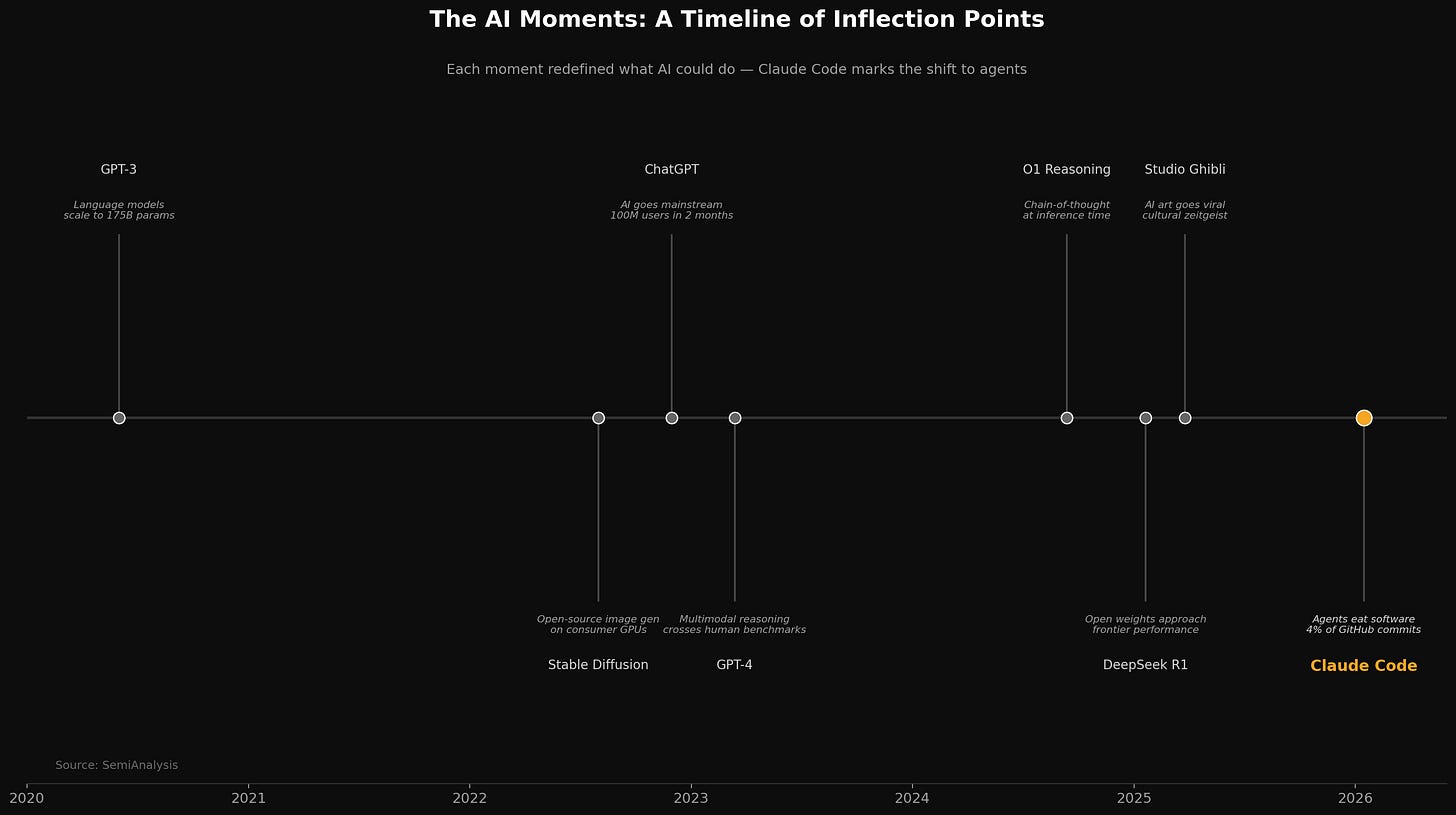

This is why SemiAnalysis believes we are yet again at another critical moment in AI. One that matches, if not exceeds the ChatGPT moment in early 2023

Each moment expanded what AI could do. GPT-3 proved scale worked. Stable diffusion showed AI could make images. ChatGPT proved demand for intelligence. DeepSeek proved that it could be done on a smaller scale, and o1 showed that you could scale models to even better performance. The viral moments of Studio Ghibli are just adoption points, while Claude Code is a new breakthrough in the agentic layer of organizing model outputs into something more.

What is Claude Code?

Claude Code is a terminal-native AI agent that is not focused on IDE or a chatbot sidebar like Cursor. Claude Code is a CLI (command line interface) tool that reads your codebase, plans multi-step tasks, and then executes these tasks. It might be incorrect to think of Claude Code only as focused on Code, but rather as Claude Computer. With full access to your computer, Claude can understand its environment, make a plan, and iteratively complete this plan, the whole-time taking direction from the user.

Claude Code does more than just code and is the best example of an AI Agent. You can interact with a computer with natural language to describe objectives and outcomes rather than implementation details. Provide Claude (the CLI) an input such as a spreadsheet, a codebase, a link to a webpage and then ask it to achieve an objective. It then makes a plan, verifies details, and then executes it.

It is a glimpse of the future, but it is also here today in software already. Your favorite engineers are vibe coding:

Andrej Karpathy, who coined the term vibe coding 1 year ago, is openly discussing the phase shift, and specifically says “I’ve already noticed that I am slowly starting to atrophy my ability to write code manually. Generation (writing code) and discrimination (reading code) are different capabilities in the brain.”

Malte Ubl, CTO of Vercel, claims that his “new primary job” is “to tell AI what it did wrong”

Ryan Dahl, creator of NodeJS, says that “the era of humans writing code is over”

David Heinemeier Hansson, creator of Ruby on Rails, is having some sort of anticipated nostalgia, reminiscing about writing code by hand while writing code by hand:

Boris Cherny, creator of Claude Code says that “Pretty much 100% of our code is written by Claude Code + Opus 4.5”

Even Linus Torvalds is vibe coding: https://github.com/torvalds/AudioNoise

But it isn’t just coders, here at SemiAnalysis our Analysts and Technical Staff have different roles and responsibilities. The Datacenter Model team needs to review hundreds of documents every week. Our AI Supply Chain team needs to inspect BOMs with thousands of line items. Our Memory Model team needs to build forecasts in near-real time as spot market prices explode. Our Technical Staff need to maintain a live dashboard for InferenceMAX, including nightly runs of the latest software recipes across 9 different system types/clusters. From regulatory filings to permits, spec sheets to documentation, config to code, the way that we interact with our computers has changed.

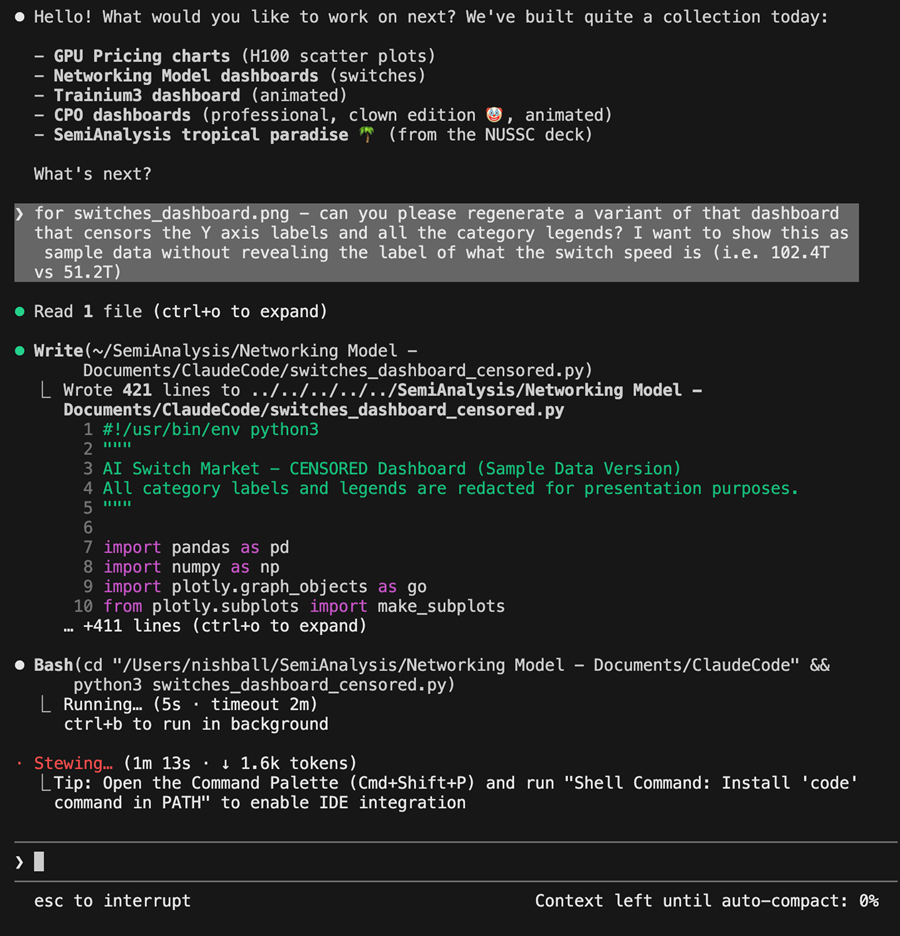

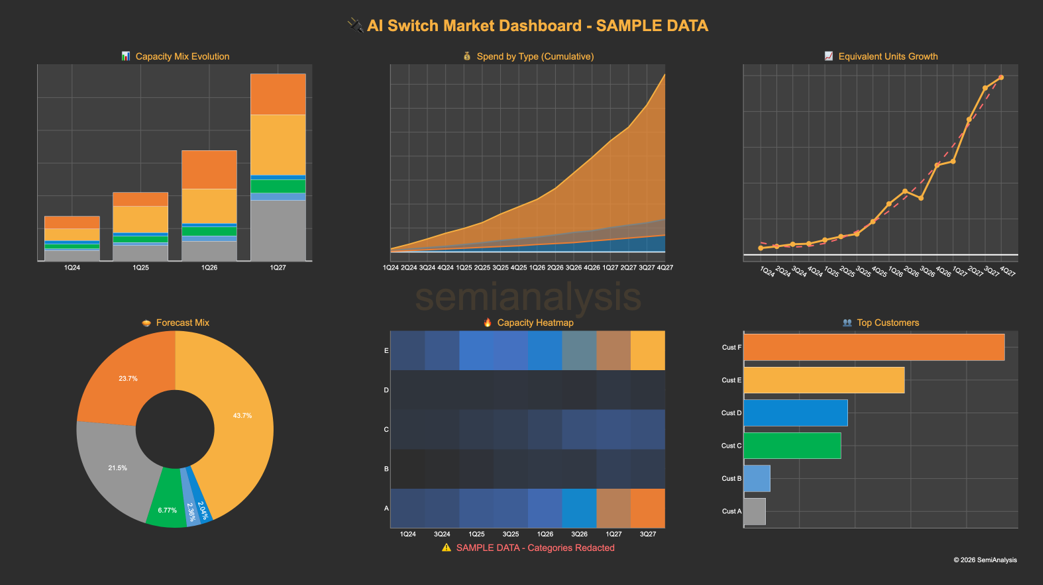

As an example, our industry model analysts now use Claude Code to generate a plethora of helpful diagrams and analyses to parse and communicate important trends within large data sets:

Here’s an input:

And here’s the output:

Coders will stop doing code and rather request jobs to be done on their behalf. And the magic of Claude Code is it just works. Many famous coders are finally giving into the new wave of vibe coding and now realizing that coding is effectively close to a solved problem that is better off supported by Agents than humans.

The locus of competition is shifting. Obsessions over linear benchmarks as to what model is “best” will look quaint, akin to how fast your dial-up is compared to DSL. Speed and performance matters, and the models are what power agents, but performance will be measured as the net output of packets to make a website, not the packet quality itself. The website features of tomorrow is going to be the orchestration through tools, memory, sub-agents, and verification loops to create outcomes and not responses. And all information work is finally addressed by models.

Opus 4.5 is the engine that makes this all possible, and what is important in linear benchmarking might not matter at all for agentic long horizon tasks. More on that later.

Beyond Coding: The Beachhead, not the Destination

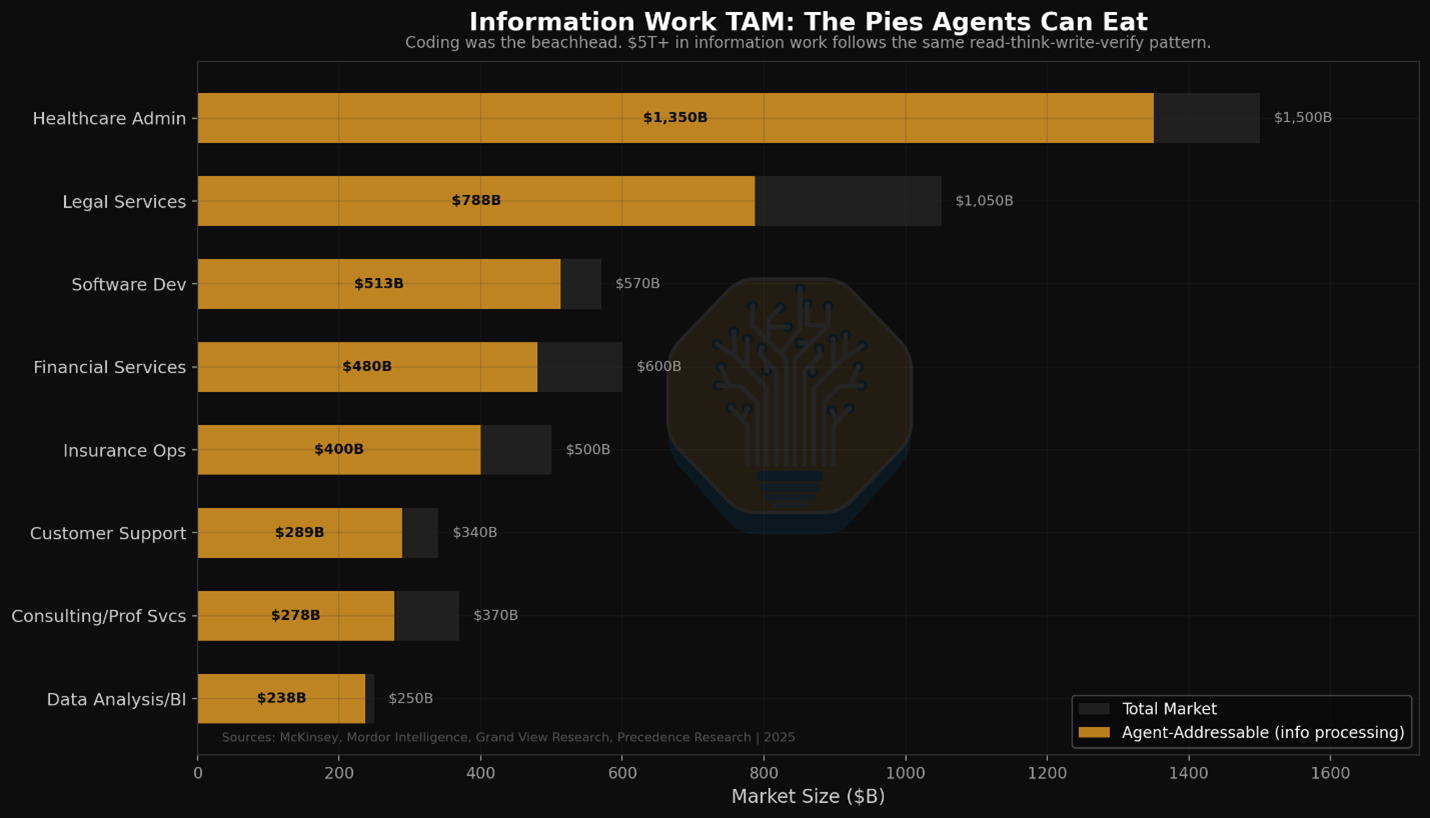

Coding was once the most valuable work of all, with programmers in hot demand during the 2020 era of software engineering. Coding is now a beachhead in terms of the disruption that agentic information processing has, and the larger 15 trillion-dollar information work economy is now at risk. There are 1b+ information workers, or roughly 1/3rd of the global 3.6 billion workforce per ILO.

Every single workflow in the information work category is often similar and shares a workflow that Claude Code proves works for software. READ (ingest unstructured information), THINK (apply domain knowledge), WRITE (produce structured output) and then VERIFY (check against standards). This is large swathes of most information workers (including research!) and if Agents can eat software, what labor pool can they not touch?

Our view is quite a few, and with the rise of Claude Code (and Cowork) the total addressable market of agents is much larger than just LLMs. Niche markets like customer support and software development will start to address the larger financial services, legal, consulting, and other industries. This is the core focus of the SemiAnalysis Tokenomics Model.

Given the “killer use case” in coding, and the clear generalizability of Claude Code / Cowork, this justifies a completely different calculus. Automating most call and response and information fetching is likely doable, and this opens the absolute dollars possible. The goal of the tokenomics model is to track additional killer use cases and TAM as Agentic AI expands into all facets of business.

Adoption Constraints: Task Horizon

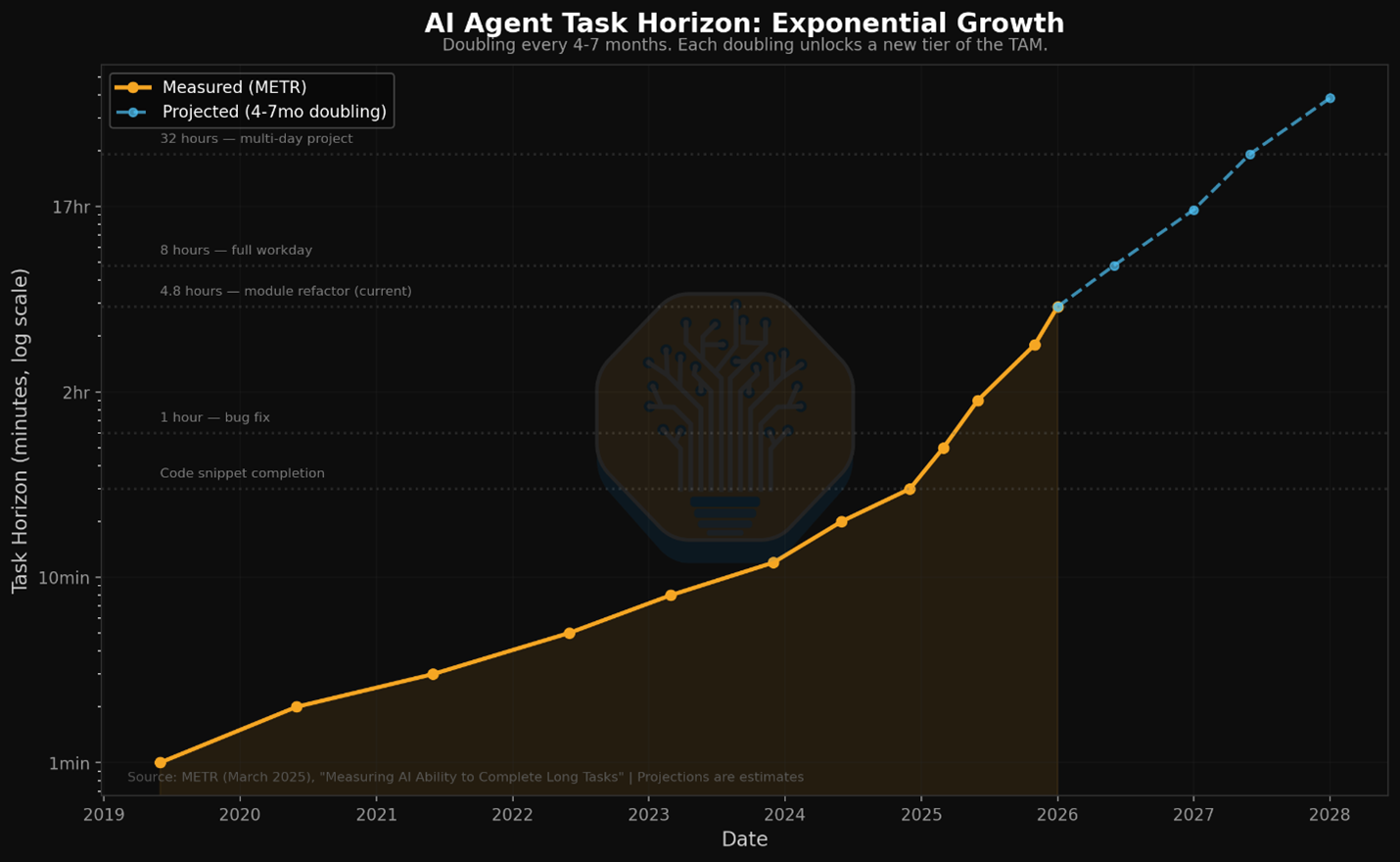

What really makes larger parts of the pie available for disruption is longer task horizon. How long can an agent work before it fails its task? METR data shows autonomous task horizons doubling every 4-7 months (accelerating to ~4 months in 2024-2025)

Each doubling unlocks more of the total pie. At 30 minutes you can auto-complete code snippets, at 4.8 hours you can refactor a module. Multi-day tasks you can automate an entire audit. And it’s clear Anthropic sees this too.

On January 12, 2026, Anthropic launched Cowork—”Claude Code for general computing.” Four engineers built it in 10 days. Most of the code was written by Claude Code itself. Same architecture: Claude Agent SDK, MCP, sub-agents. It creates spreadsheets from receipts, organizes files by content, and drafts reports from scattered notes. It’s Claude Code minus the terminal, plus a desktop.

This is the glimpse of the future. A harness that understands the context of your day-to-day job or work and can build and generate information processing as needed. Instead of creating images from reports you download from your database, an agent will generate a report with better formatting than you could do yourself within excel for you. Whenever you need to gather information about, say, a sales quota, your agent will extract the information from a UI or API and generate the report for you on your behalf. Information work itself is going to be automated like Claude Code has automated software engineering.

And while it is not perfect today, it clearly can generally process, synthesize, and format data faster than most humans can. This all comes at higher fidelity and lower cost than the average worker in some cases. While there will be hallucinations, most systems already exist with many human led errors in the process. If the information is processed at a viable level of fidelity and then passed to the next step, this itself will massively increase the supply of work. We are literally at the point where any individual could type into one of these agent workflows to run a multi-variable regression that would have taken a lifetime of training in the 2000s.

The Stack Overflow 2025 Developer Survey has 84% of coders using AI, and that is the bleeding edge of adoption. Only 31% use coding agents, and that means that this penetration curve is early for broader waves of information work. Just like the blink for coding agent penetration, broader information work will quickly see AI adoption.

The Price of Intelligence is Collapsing

Now software engineering has and always will be the gold standard information work. But as the quality has finally crossed over a critical threshold, the relationship between coders and their tools have flipped. Coders are effectively just harnessing a black box tool to achieve outcomes, and that was all possible because not only the quality but the cost of the intelligence of tokens has fallen an amazing amount. One developer with Claude Code can now do what took a team a month.

The cost of Claude Pro or ChatGPT is $20 dollars a month, while a Max subscription is $200 dollars respectively. The median US knowledge worker costs ~350-500 dollars a day fully loaded. An agent that handles even a fraction of their workflow a day at ~6-7 dollars is a 10-30x ROI not including improvement in intelligence.

Enterprise is Already Starting to Move

The massive deflationary cost of intelligence is going to reprice every information company’s margin for repeatable work. Accenture just signed a deal to train 30,000 professionals on Claude, the largest Claude Code deployment to date. Accenture will focus on financial services, life sciences, healthcare, and the public sector. Those are all huge untapped markets for information automation. OpenAI just announced Frontier focused on enterprise adoption.

Enterprise software has easily been the first casualty of the great cost decline of intelligence. SaaS itself is just crystalized information processing of workflows into code. The three moats of SaaS, switching costs of data (data is trapped), workflow lock-in (learning the UI), and integration complexity (how Slack works with Jira) have all been partially eroded at the margins. The 75% gross margin of SaaS looks like a huge opportunity, as agents migrate data between systems with lessened migration costs, Agents themselves do not rely on human oriented workflows, and MCP integrations make integration much easier. Every aspect of SaaS is cheapening, and the margins have become the first opportunity of AI.

A simplistic example is an agent can now query a Postgres database directly on your behalf, generate a chart, and email it to a stakeholder. That was effectively the cost of a SaaS workflow like CRM, and it doesn’t need to train humans on UI changes or update software. It just “works”. BI/analytics (agents querying databases) data entry, ITSM (L1/L2 tickets triage) and back-office reconciliation is already in the process of automation! These are knocking on some of the doors of the most sacred moats in software already.

In our view, anything that has a human click buttons, gather information, reformat it into another medium (email, chart, excel, presentation) is a huge risk. LLMs thrive at this kind of data interchange exclusively, effortlessly changing text into audio, English into Chinese, and words into images. And this in our view has a huge threat to one of the biggest companies in the world: Microsoft.

Competitive Landscape (Microsoft’s Conundrum)

The cost collapse is destroying the seat-based software model. And with the massive adoption of Claude Code internally at SemiAnalysis, there has been no bigger share shift than Microsoft’s seat-based Office 365. The definition of human clickable buttons is Microsoft, and at an extension all seat-based software. The pattern to watch out for is a set of software that applies to multi-industry workflows that are designed for humans.

Why does a company need to standardize Salesforce if an agent is just going to query data on leads on your behalf? Salesforce is a form and workflow wrapper, and the form and workflow can likely be scaffolded by AI into a database and then queried as needed. Every bit of UX or preference is at risk. Tableau as a concept is outdated; Figma (wireframes for humans) are at risk. The core way of how a human interacts with a computer is about to change, and Microsoft sits at the center of the old paradigm.

Caught Between Two Businesses

We recently (wrongly) called for an acceleration in Microsoft’s revenue, mostly driven by their large rental fleet and a shift to external foundry capacity. But we think that their recent earnings call they decided to pull back strategically. Here’s the quote:

And much of the acceleration that I think you’ve seen from us and products over the past a bit is coming because we are allocating GPUs and capacity to many of the talented AI people we’ve been hiring over the past years. Then when you end up is that you end up with the remainder going towards serving the Azure capacity that continues to grow in terms of demand. And a way to think about it because I think I get asked this question sometimes is if I had taken the GPUs that just came online in Q1 and Q2 in terms of GPUs and allocated them all to Azure, the KPI would have been over 40.

The important bit of context is this:

We’re really making long-term decisions. And the first thing we’re doing is solving for the increased usage in sales and the accelerating pace of M365 Copilot as well as GitHub pilot or first-party apps. Then we make sure we’re investing in the long-term nature of R&D and product innovation.

There are two beasts within Microsoft: Azure growth for public market investors and investing in Copilot to preserve the Office 365 product suite. To decisively win at one, it’s likely you must lose at the other. And right now Microsoft is one of the largest AI clouds in the world to companies like OpenAI and Anthropic. But they are renting GPUs to the barbarians who will ruin their castle in productivity software.

Claude for Excel effectively is what Copilot for Excel should have been, but it was launched by an external party on their own first party product. Most of the cash today still comes from Office but most of terminal value comes from Azure revenue growth. In order to accelerate Azure it will allow the barbarians at the gate to tear down the walls even quicker. And once Microsoft’s deal was made with upstarts, but as OpenAI and Anthropic start to become larger platforms, it’s unclear if the moat will keep them out.

Microsoft’s spending on AI ironically must increase or the terminal value of the O365 suite of products is going to plummet. They do have distribution, but it’s mostly on a product whose positioning is eroding by the day compared to the AI upstarts. Meanwhile OpenAI, Microsoft’s core partner in AI itself is seeing enterprise disruption from Claude Code. OpenAI must respond swiftly to Claude Code’s rise in agentic adoption or they themselves might look like an infrastructure company (tokens) not a solutions (agent) company. The risk of disruption is ratcheting up precipitously, and it’s happening to one of the most profitable companies of all time.

GitHub copilot and Office Copilot had a year headstart and barely made any inroads as a product. Meanwhile Satya is literally stepping in as the product manager of Microsoft AI and away from his day to day duties as CEO. It’s pretty clear the stakes of this single product might be the entirety of the company.