ClusterMAX™ 2.0: The Industry Standard GPU Cloud Rating System

95% Coverage By Volume, 84 Providers Rated, 209 Providers Tracked, 140+ Customers Surveyed, 46,000 Words For Your Enjoyment

Introduction

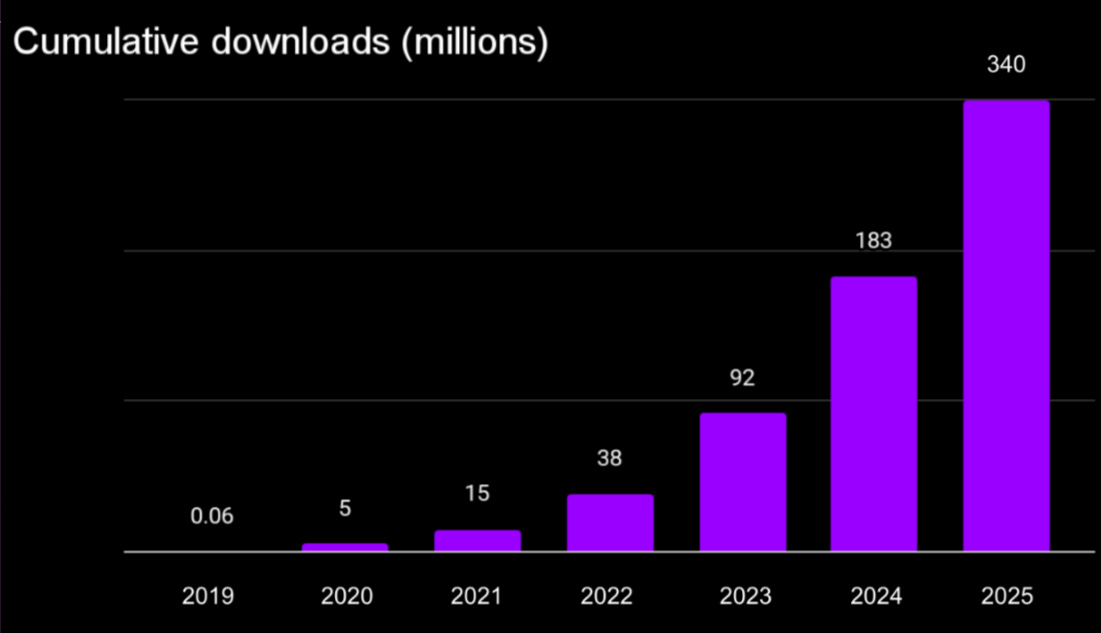

GPU clouds (also known as “Neoclouds” since October of last year) are at the center of the AI boom. Neoclouds represent some of the most important transactions in AI, the critical juncture where end users rent GPUs to train models, process data, and build inference endpoints.

Our previous research has set the standard for understanding Neoclouds:

Since ClusterMAX 1.0 was released 6 months ago, we have seen significant changes in the industry. H200, B200, MI325X, and MI355X GPUs have arrived at scale. GB200 NVL72 has rolled out to hyperscale customers and GB300 NVL72 systems are being brought up. TPU and Trainium are in the arena. And many buyers are turning to the ClusterMAX rating system as the trusted, independent third party with a comprehensive, technical guide to understanding the market.

An update is needed!

Executive Summary

YouTube summary video available here!

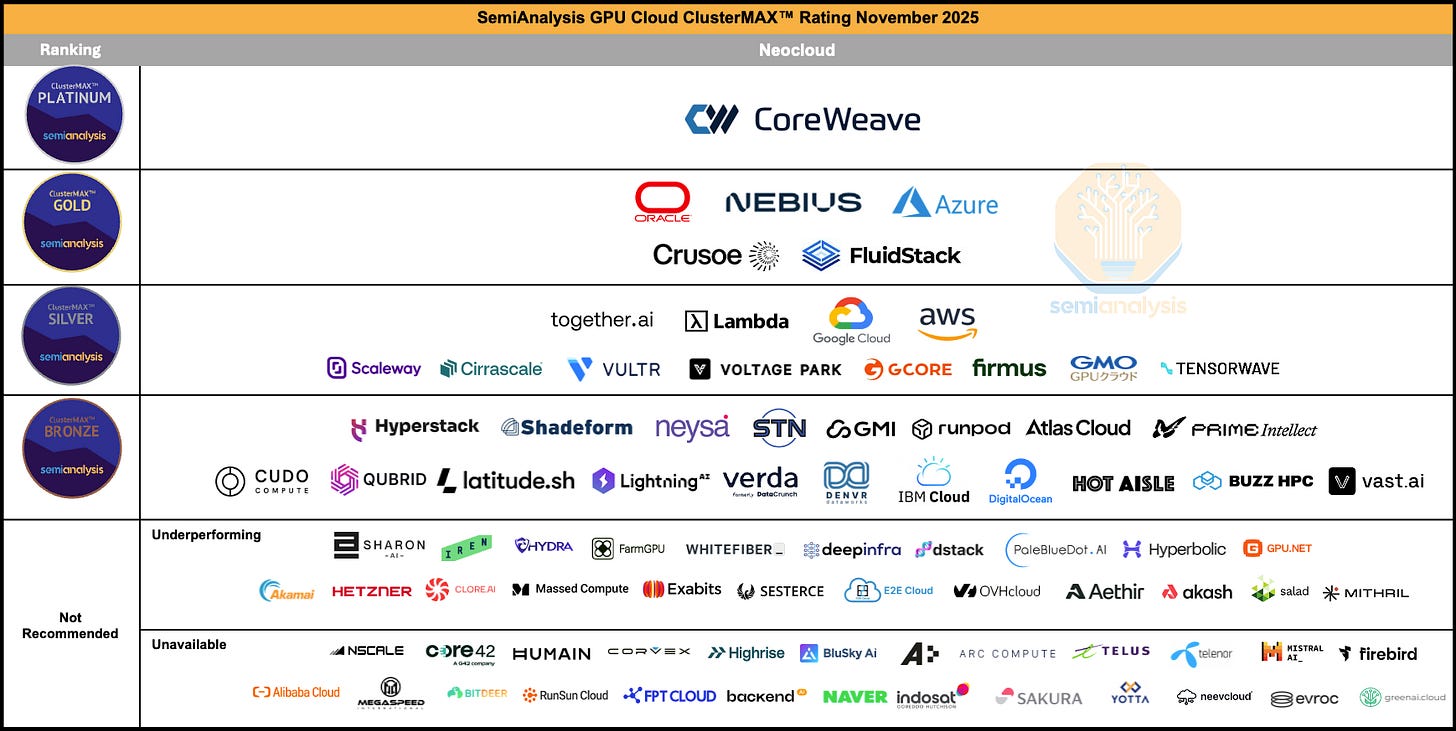

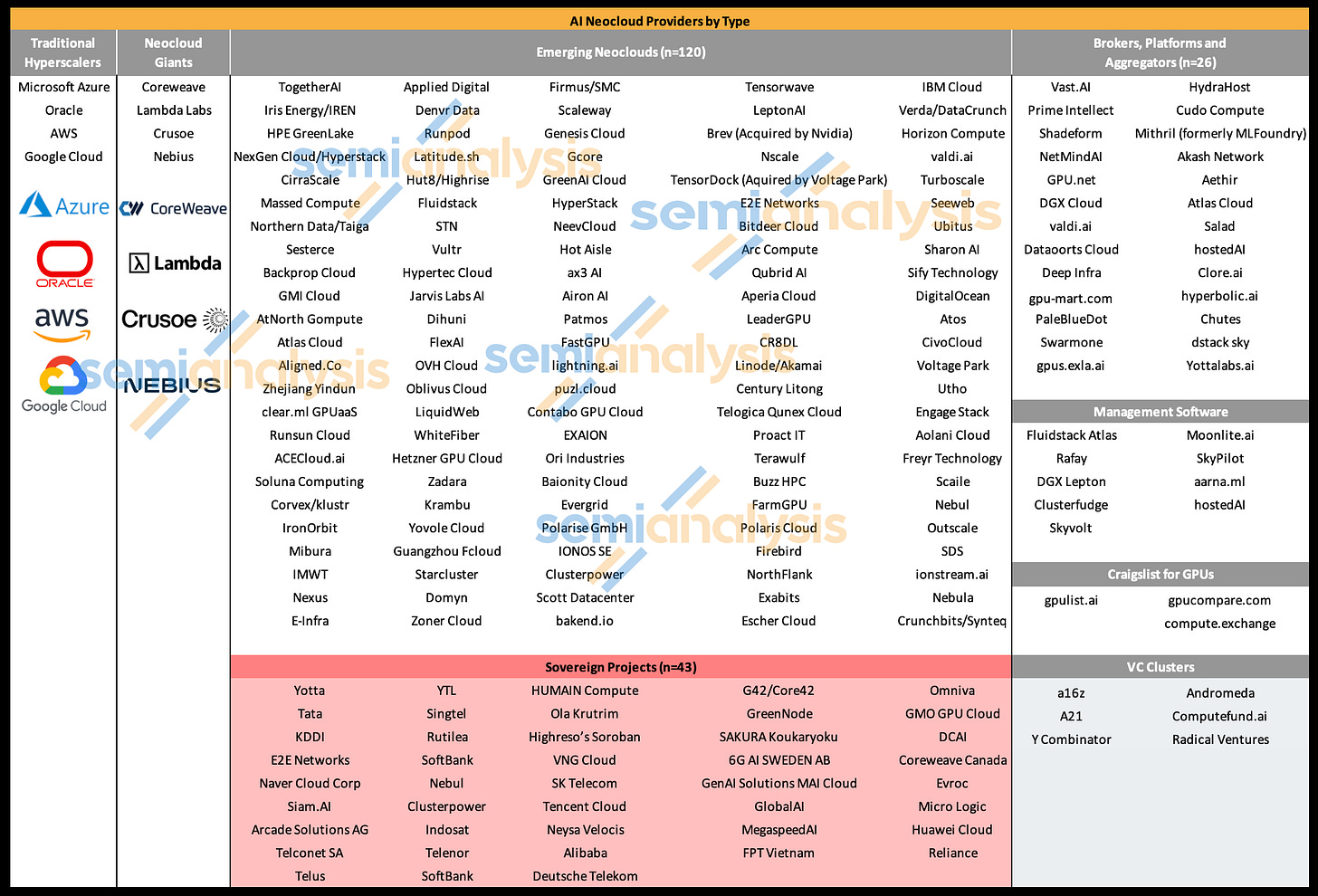

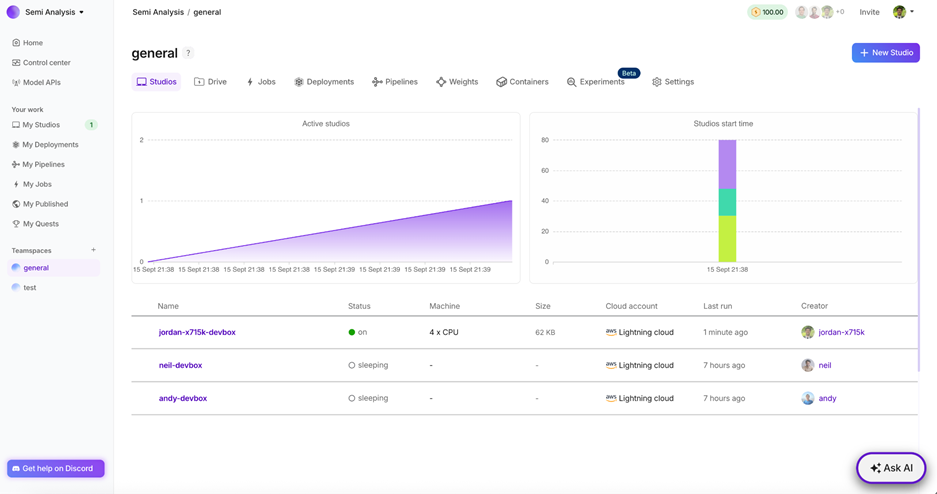

ClusterMAX 2.0 debuts with a comprehensive review of 84 providers, up from 26 in ClusterMAX 1.0. We increase our market view to cover 209 total providers, up from 169 in our previous article and 124 in the original AI Neocloud Playbook and Anatomy. We have interviewed over 140 end users of Neoclouds as part of this research.

We release an itemized list of all criteria we consider during testing, covering 10 primary categories (security, lifecycle, orchestration, storage, networking, reliability, monitoring, pricing, partnerships, availability).

We release five descriptions of our expectations, covering SLURM, Kubernetes, standalone machines, monitoring, and health checks. We encourage providers to use these lists when developing their offerings. We consider the lists as an amalgamation of our interviews with end users, and continue to pursue the quality when developing their offerings.

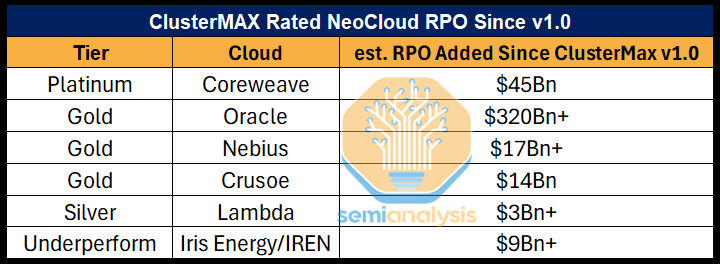

CoreWeave retains top spot as the only member of the Platinum tier. CoreWeave sets the bar for others to follow, and is the only cloud to consistently command premium pricing in our interviews with end users.

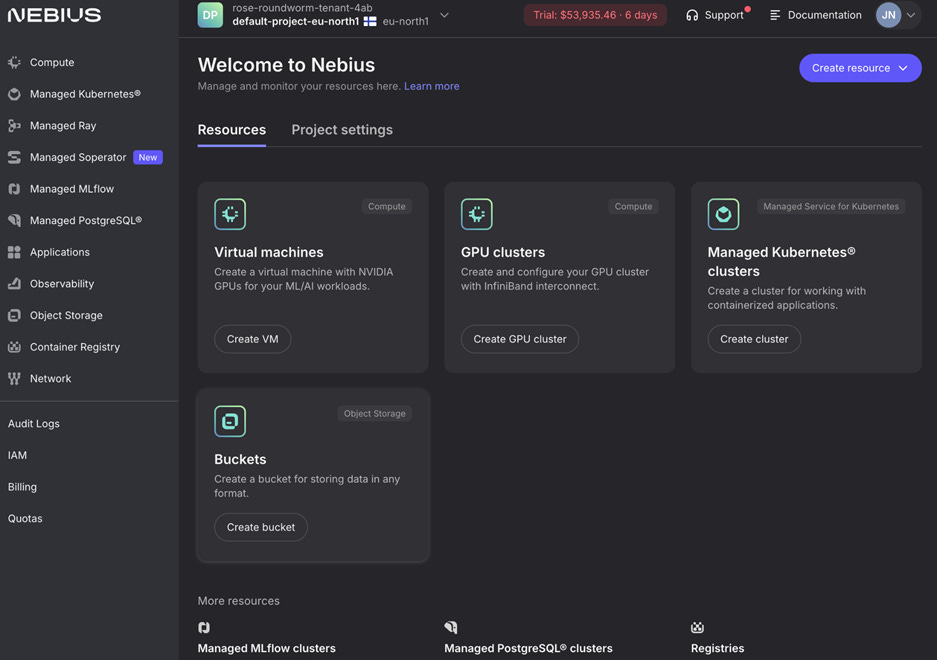

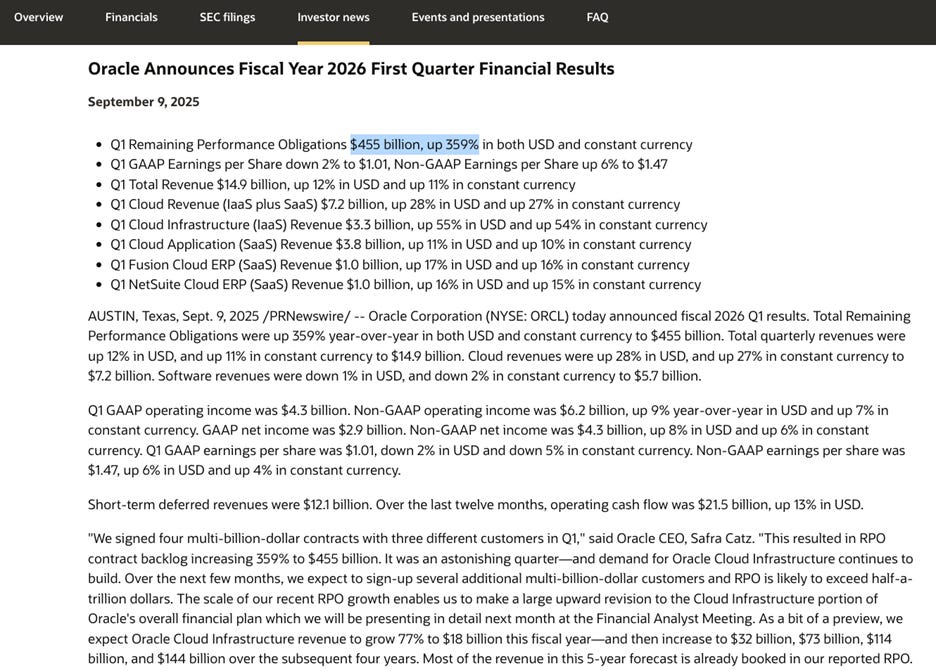

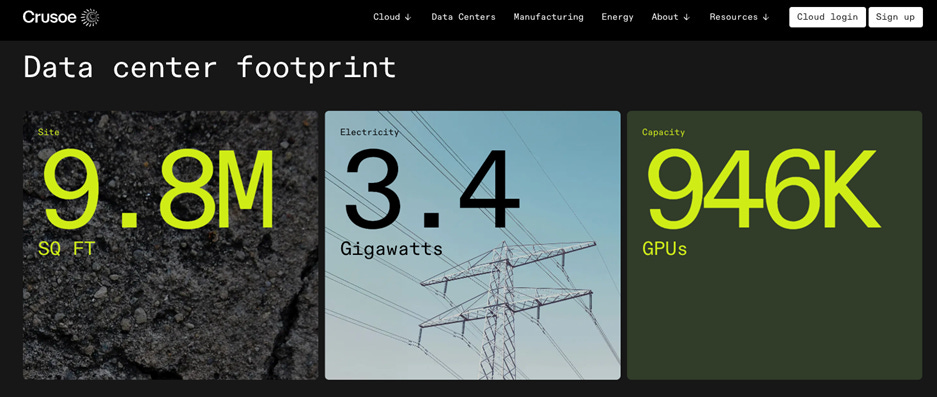

Nebius, Oracle and Azure are the top providers within the Gold tier. Crusoe and new entrant Fluidstack also achieve Gold tier.

Google rises to the top of the Silver tier, alongside AWS, together.ai and Lambda. Many more clouds from all around the world debut at the Bronze or Silver tier, for a total of 37 clouds achieving a medallion rating.

We provide analysis of key trends: Slurm-on-Kubernetes, Virtual Machines or Bare-Metal, Kubernetes for Training, Transition to Blackwell, GB200 NVL72 Reliability and SLA’s, Crypto Miners Here To Stay, Custom Storage Solutions, Cluster-level Networking, Container Escapes, Embargo Programs, Pentesting and Auditing

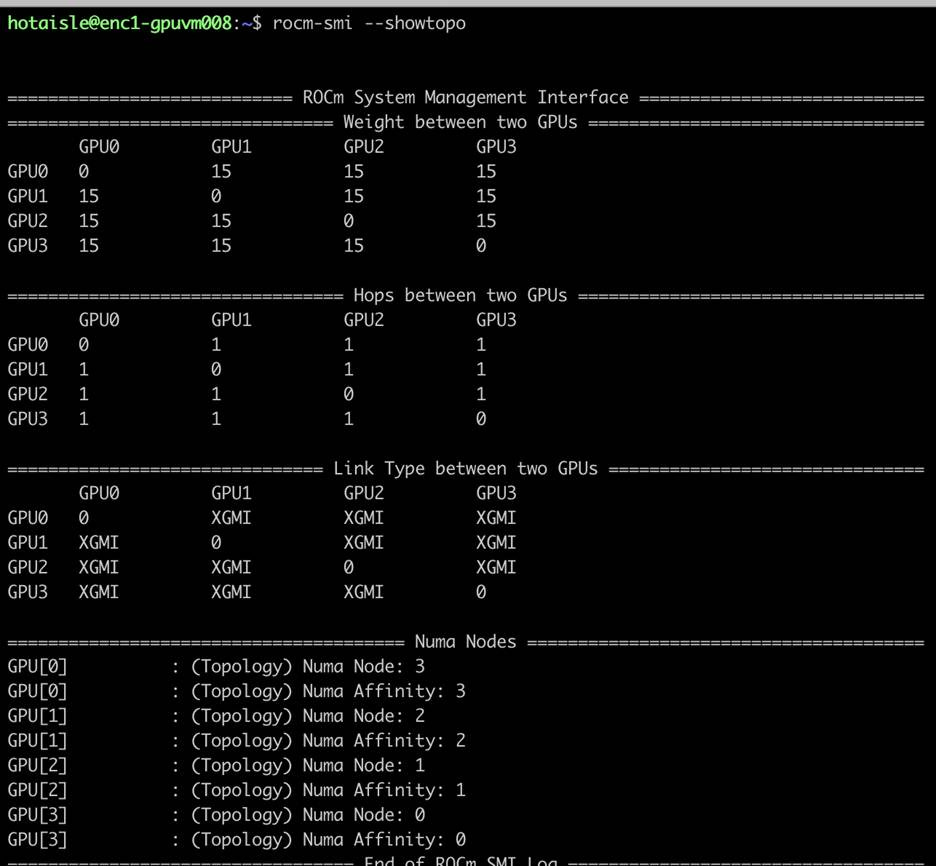

For providers that have deployed both AMD and NVIDIA GPUs, the quality of their AMD cloud offering is much worse than their NVIDIA cloud offering. AMD offerings tend to be missing critical features like detailed monitoring, health checks with automatic remediation, and working SLURM support when compared to NVIDIA.

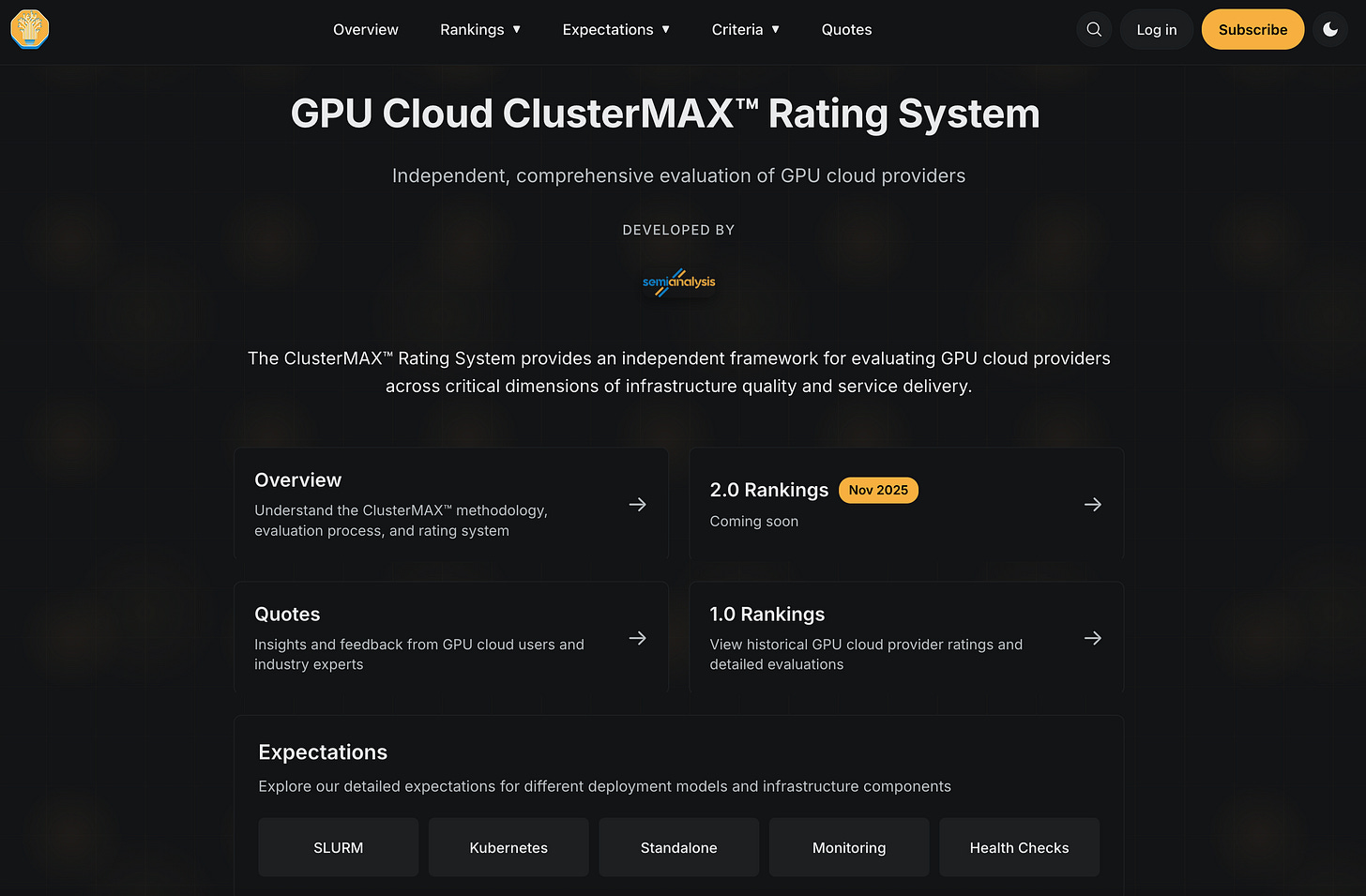

As a companion to this article, we release https://www.clustermax.ai/, a one-stop shop to review our current criteria, expectations, and results moving forward

Before we even get to the results, we’d like to point that since releasing v1.0 in late March, our highest rated Neoclouds have collectively booked nearly $400Bn in Remaining Performance Obligations (RPOs). This further reinforces the idea that our rating system translates well across the ecosystem from technical users to key decision makers looking to contract compute.

Results

ClusterMAX 2.0 debuts with an updated ranking list:

We also release an updated market tracker by type:

Many people have contributed to this research. We thank all ClusterMAX supporters at the leading AI labs, hardware OEMs, investors, startups, industry influencers and more. The impact of ClusterMAX has been immense. For a complete list of quotes from notable members of the community supporting our work, please see https://www.clustermax.ai/quotes

We received two clear pieces of feedback when we released the first version of ClusterMAX:

People who saw only the results table wondered why, exactly, a given cloud had been given a certain rating.

People who read the first article (an estimated 76 minute read with over 20,000 words) wanted even more detailed information on our experience.

For reference, Animal Farm checks in at 29,800 words and the dialogue of the movie “Social Network” about Zuck co-founding Facebook is 34,000 words

This ClusterMAX 2.0 article is over 46,000 words

In this article we attempt to strike the balance between useful summary information and detailed technical information that accurately describes our experience. More information is always available upon request to clustermax@semianalysis.com.

We Are Hiring - ClusterMAX

If you enjoy this article and have a unique technical perspective on Neoclouds, we would love to work with you. Please consider joining us to work on ClusterMAX 2.1, ClusterMAX 3.0 and beyond:

Key Responsibilities

Lead the development of next generation benchmarks and TCO analysis for publication in future versions of ClusterMAX™ and related projects.

Collaborate with executives and engineers from over 203 neoclouds, hyperscalers, marketplaces, and sovereign projects

Establish and maintain partnership with AI Chip manufacturers, startups and OEMs such as NVIDIA, AMD, Intel, etc

Build on existing relationships with leading AI labs, investors, startups, and community members to gauge their experience working with cloud providers and contribute expertise

Author detailed technical research reports analyzing benchmark results, reliability, and ease of use

Stay current on emerging trends and technologies by attending major conferences such as NeurIPS, MLSys, NVIDIA GTC, OCP, SC, HotChips and more. Travel is encouraged but not required

Qualifications

Experience with ML frameworks such as PyTorch or JAX

Experience with GPU or TPU clusters running kubernetes or slurm

Experience with filesystems such as Weka, VAST, Lustre, and GPFS

Experience with interconnects such as InfiniBand and RoCEv2

Experience with ML system benchmarking (GEMMs, nccl-tests, vllm, sglang, mlperf, STAC, HPL, FIO, torchtitan, megatron, etc.)

Experience working at a hyperscaler, neocloud, server OEM, chip manufacturer, or large scale user of these technologies (preferred)

Proactive and capable of working in a global, distributed team

Rating Tiers

Following an evaluation against 10 key criteria (Security, Lifecycle, Orchestration, Storage, Networking, Reliability, Monitoring, Pricing, Partnerships, and Availability) we assign one of five ratings to a given Neocloud (Platinum, Gold, Silver, Bronze, or Underperforming).

It is important to understand that this is a relative rating system. In other words, the quality of the services offered by each Neocloud in each of the ten criteria is assessed relative to their peers. Checking boxes against all of the itemized criteria is a good start, but it is important to note that Gold and Platinum Neoclouds rise to the top by introducing new features and functionality that others do not have, and customers appreciate.

ClusterMAX™ Platinum represents the best GPU cloud providers in the industry. Providers in this category consistently excel across evaluation criteria. Platinum-tier providers are proactive, innovative, and maintain an active feedback loop with their user community to continually raise the bar. In practice, Platinum-tier providers are able to command a pricing premium over their competitors, due to a recognition that the TCO of their offering is better, even if the raw $ per GPU-hr is higher when compared directly to a competitor.

ClusterMAX™ Gold includes strong performance across all evaluation categories, with some opportunities for improvement. Gold-tier providers may have small gaps or inconsistencies in the user experience, but generally demonstrate responsiveness to feedback and a commitment to improvement. Gold-tier providers are a great choice, and generally win deals, especially if they have the best price per GPU-hr and the cluster is available on the customer’s expected timeline.

ClusterMAX™ Silver is an adequate GPU cloud offering but may have noticeable gaps compared to gold or platinum-tier providers. Some users will not consider an offering from a silver tier provider due to these gaps, regardless of an attractive price per GPU-hr and good availability. In general, silver-tier GPU clouds have room for improvement and growth, and we encourage them to adopt industry best practices in order to catch up to their peers.

ClusterMAX™ Bronze includes all GPU cloud providers that fulfill our minimum criteria, and is the last of the providers that we directly recommend to anyone, in any circumstance. Common issues may include inconsistent support, subpar networking performance, unclear SLAs, limited integration with popular tools like Kubernetes or Slurm, or less competitive pricing. Providers in this category need considerable improvements to enhance reliability and customer experience. Some of these providers in this category are already making considerable effort to catch up and we look forward to testing with them again soon.

Not Recommended is our last category, and the name speaks for itself. Providers in this category fail to meet our basic requirements, across one or more criteria. It is important to note that this is a broad category, so in the body of the article we describe what exactly each cloud is missing. The Not Recommended list is further broken down into two categories:

Underperforming – based on our hands-on testing, we believe that providers in this category can quickly rise to bronze or even silver if they fix one or more critical issues. Examples of critical failures include but are not limited to: no modern GPUs available (i.e. only A100, MI250X, or RTX 3090, since we expect at least H100 to be available), not having basic security attestation completed (e.g. SOC 2 Type I/II, or ISO 27001), mistakenly disabling key server features (i.e not disabling ACS, or not enabling GPUDirect RDMA), or conducting offensive business practices such as charging users for GPU hours while a cluster is being created or while servers are down due to hardware failure.

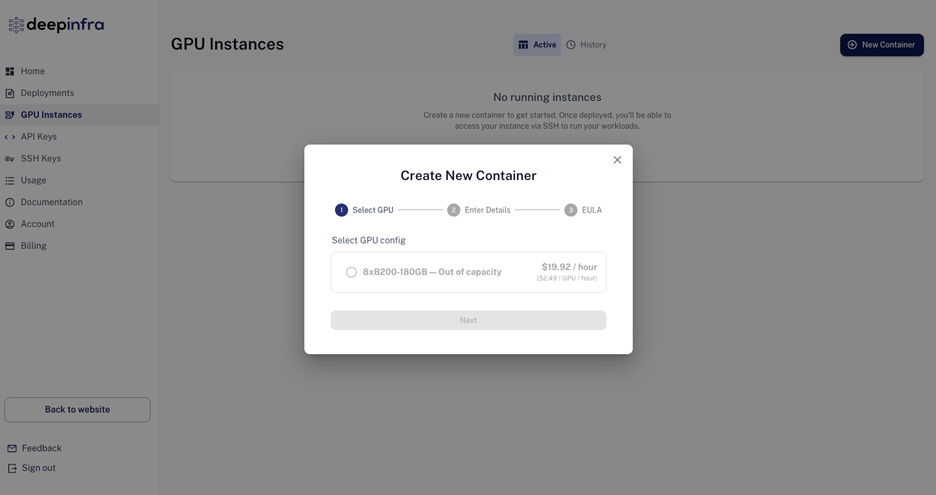

Unavailable – providers in this category have an interesting service that we are excited about, but have no way to verify as it is not available to the public or for us to test. Examples include providers that have not launched their service yet (despite marketing material that would have you thinking otherwise), providers that are completely sold-out with no plans to add new capacity, providers that exclusively serve secure government customers, or other reasons. We are excited about many of the providers in this category but maintain a “trust but verify” approach to our testing and keep them in this category until we can complete hands-on testing.

Rating Criteria

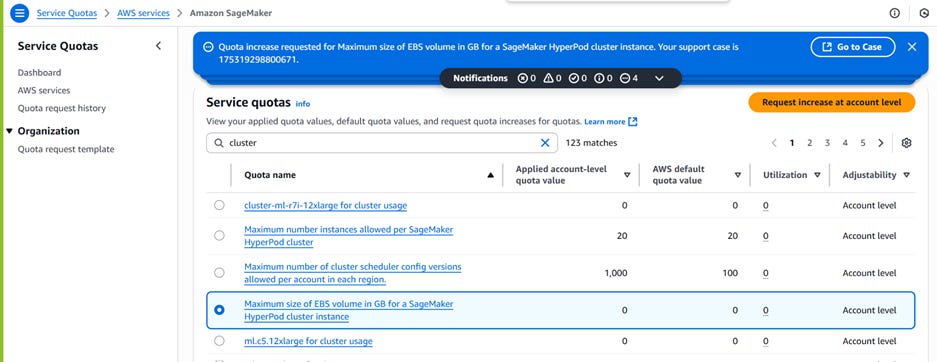

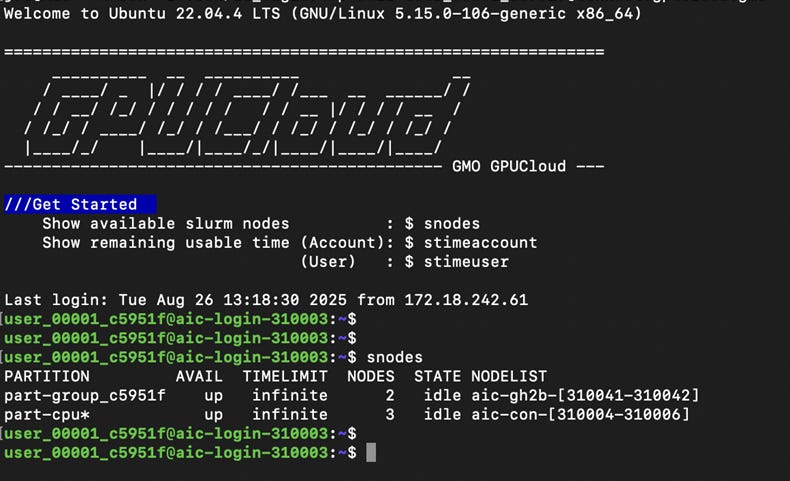

To provide a more detailed description of how we compare Neoclouds across 10 key criteria, we present an itemized list of the testing criteria that we used in ClusterMAX 2.0. Notably, this list was sent to all GPU cloud providers where we have working relations on August 6th. We believe that many clouds delayed cluster handover for weeks following, and rushed to create or modify services to meet our expectations. For example, we had cloud providers that had never installed slurm before try to install it for the first time about a week before handing over a cluster to us. We had others attempt to enable RDMA-capable networks on kubernetes for the first time. And we had even more patching critical security vulnerabilities in their services that they had just learned about from our outreach.

While we appreciate the effort, and believe it is good for the ecosystem to raise the bar, we have penalized clouds that exhibited significant delays during cluster handover. And, via interviews with 140+ cloud users during this effort, we have done our best to assess what is “current state” vs “future state”. In other words, of the services that we are testing, what is public, general availability (GA), and used by customers, vs what is still planned and not rolled out yet.

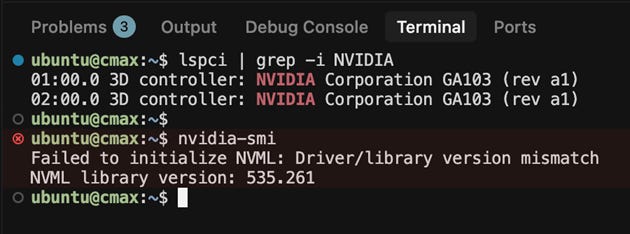

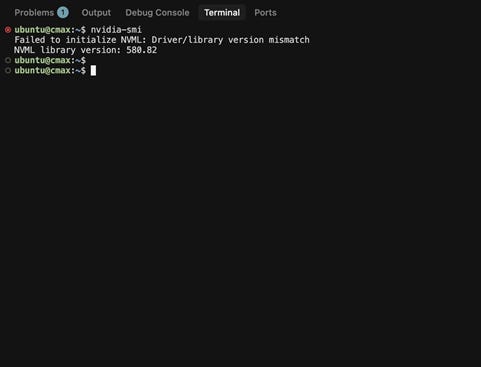

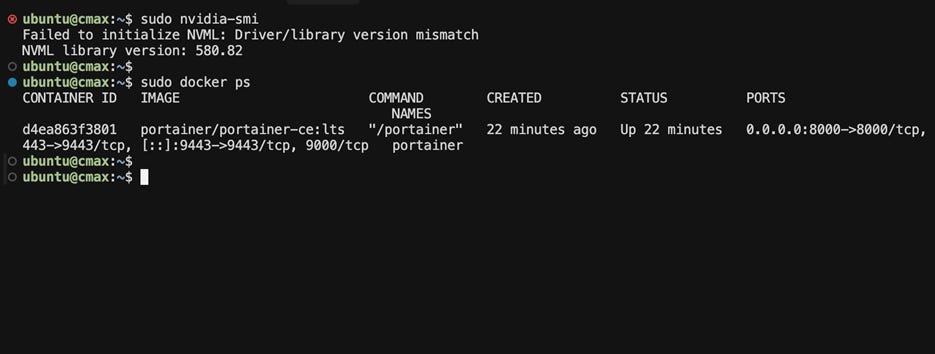

We conduct this research in an attempt to simplify, for users, an assessment of whether or not the slurm, kubernetes, or standalone machine is properly configured and will meet their expectations. We have heard from many users about the process of “giving back” a cluster, or even playing “Russian Roulette” with certain marketplaces that offer access to a single machine. In these cases, issues with reliability of the GPU drivers, GPU server hardware, backend interconnect network, shared storage mounts, internet connection, and more can cause users to lose faith in a provider, and churn out. While we can’t measure reliability at scale over time, we do assess what we can in a ~5 day long test.

For those curious, a full, live, and regularly updated list of itemized criteria is available on the ClusterMAX website at https://www.clustermax.ai/criteria. For users of Neoclouds, we encourage outreach regarding criteria you would like to see represented in this list. In particular we want to thank Mark Saroufim of GPU MODE for his contribution regarding the Nvidia Nsight Compute profiling available for users to profile GPU kernels, without sudo privilege required on the compute nodes. More detail available here: https://clustermax.ai/monitoring#performance-monitoring

Our itemized list looked like this when it went out on August 6th:

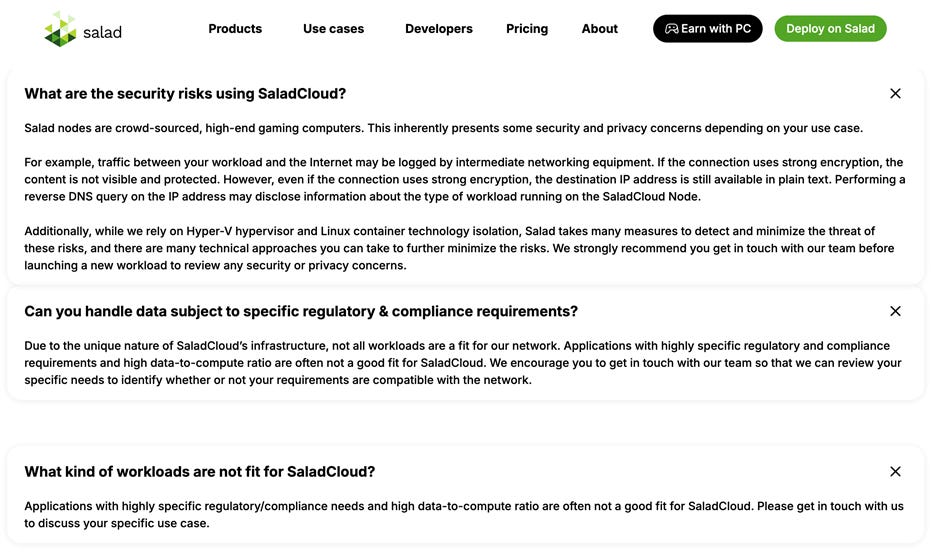

Security

Do you have relevant attestation in place from a third-party auditor that your company processes meet basic standards? (SOC2 Type 1, ISO 27001, etc.)

Do you have more specific compliance in place for global customers (i.e.can you sell services globally?)

Is your backend network setup securely for multi-tenant users (InfiniBand Pkeys, VLANs)

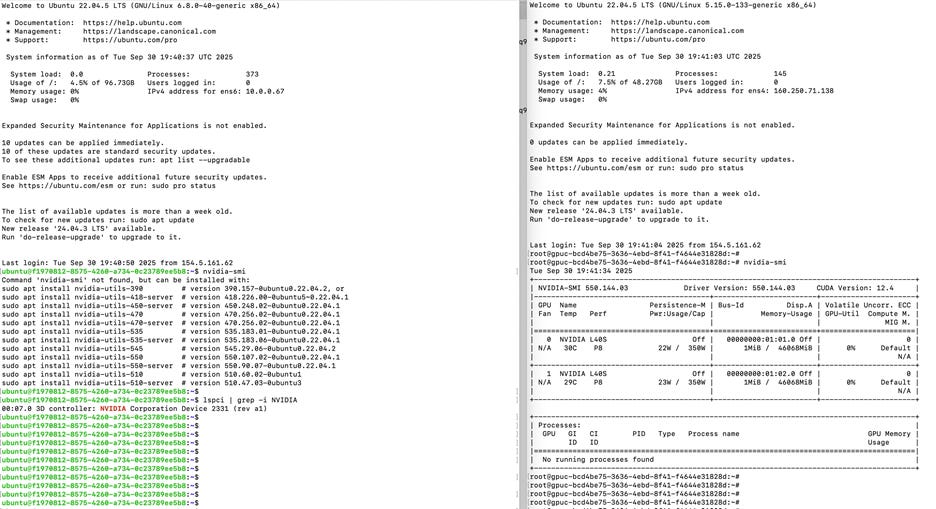

Are drivers and firmware up to date, and what is the process for notification and patching of future vulnerabilities?

Lifecycle

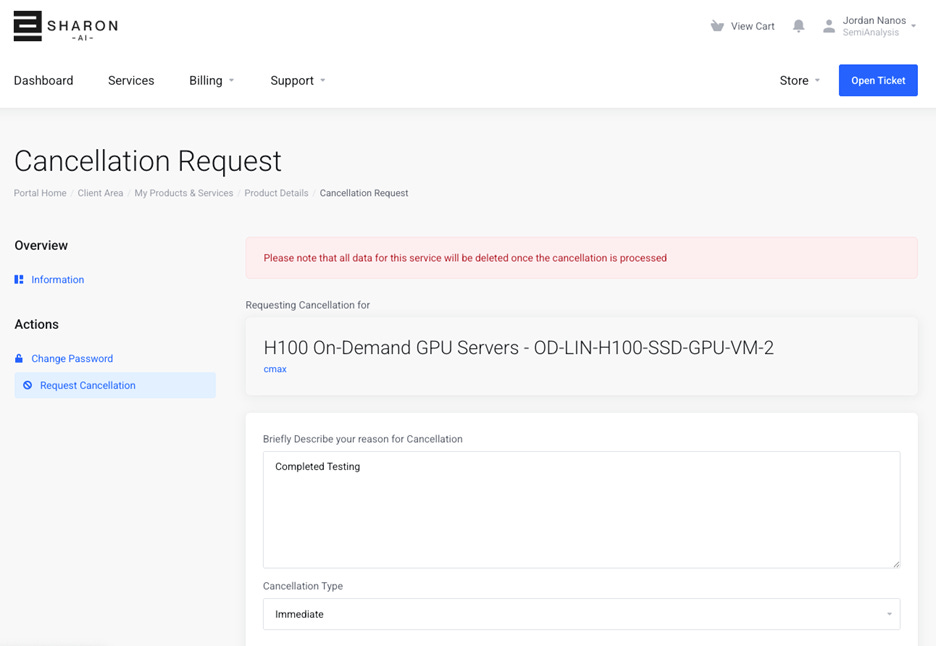

Is it easy to onboard and offboard to the service, are there hidden costs?

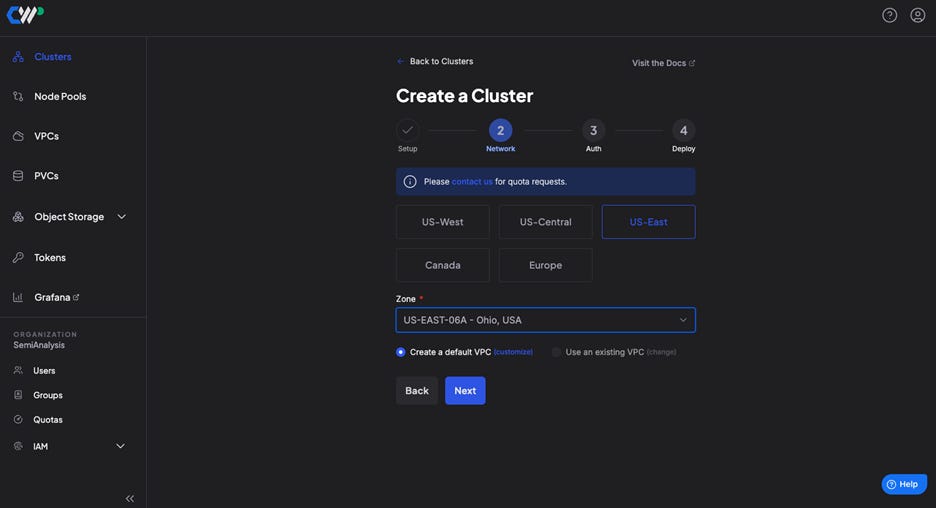

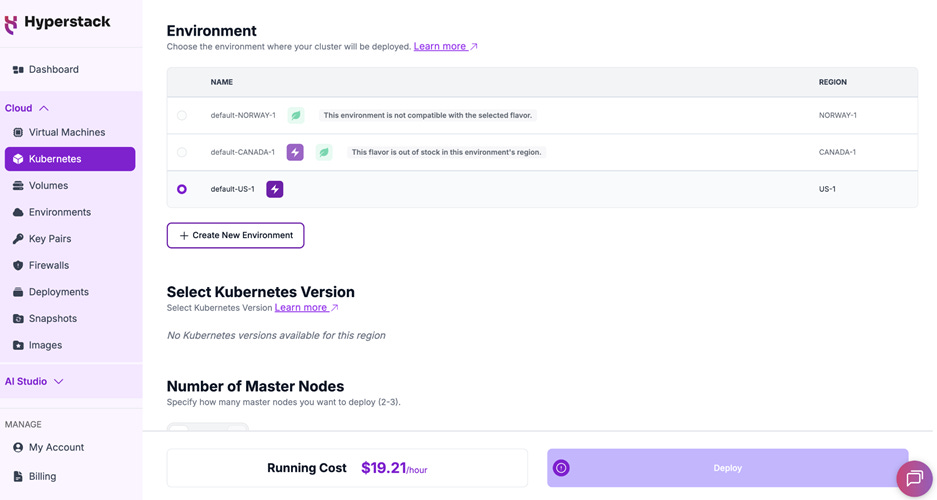

How easy is it to create a cluster?

Is it easy to expand a cluster over time?

Is it easy to use the cluster? (i.e. download speed, upload speed)

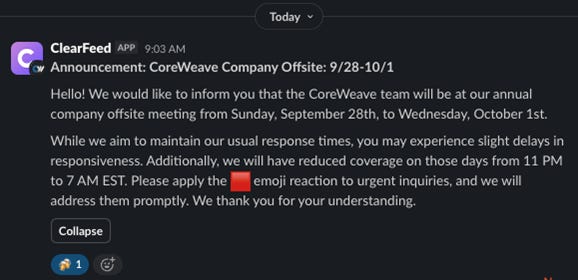

How good is the support experience?

Orchestration

Is the cluster setup properly with reasonable defaults? (OS version, sudo, ssh, basic packages pre-installed like git, vim, nano, python, docker)

How easy is it to add/remove users, groups and permissions?

Can you enforce RBAC on the cluster’s compute and storage resources

Integration with external IAM provider for SSO

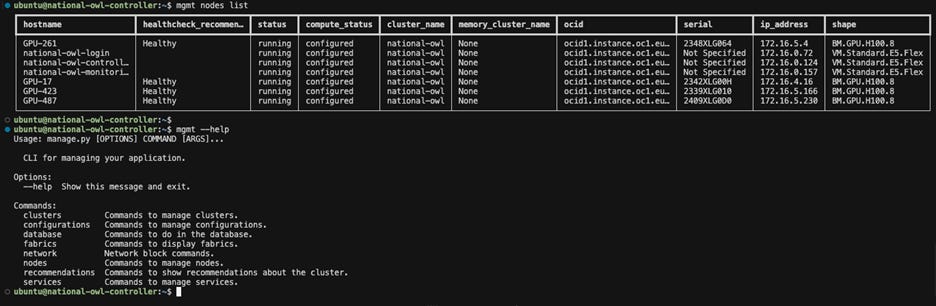

For SLURM: modules, pyxis/enroot, hpcx/mpi, nvcc, nccl, topology.conf set, dcgmi health -c, LBNL node healthcheck or equivalent, prolog/epilog, GPU Direct RDMA all configured correctly

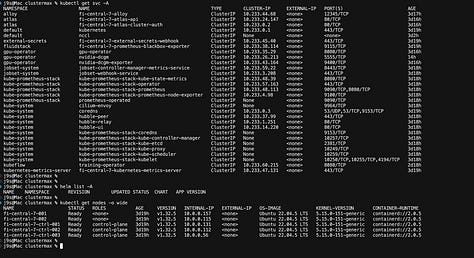

For Kubernetes: easy to download and use kubeconfig, cni configured, arbitrary helm charts, GPUOperator and NetworkOperator able to request resources easily, default ReadWriteMany StorageClass available, metallb or external LoadBalancer, node-problem-detector (or equivalent), all configured correctly

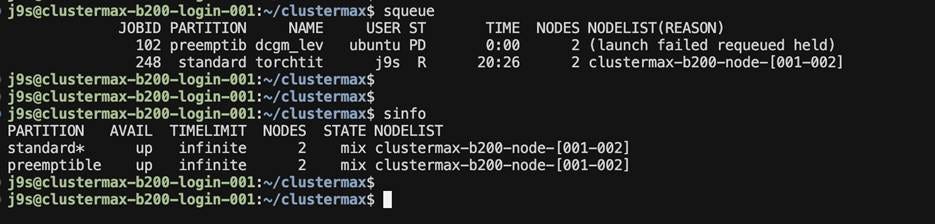

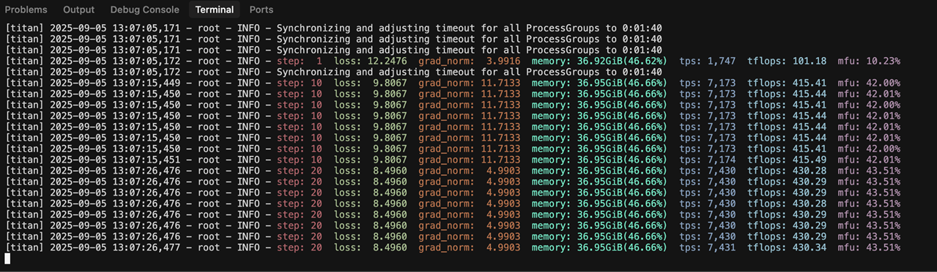

On both slurm and kubernetes: nccl-tests or rccl-tests runs at full expected bandwidth, multinode torchtitan training job runs at expected MFU (via pytorchjob, jobset, volcano batch or other equivalent CRD for k8s training)

For kubernetes only: multi-node p-d disagg serving with llm-d works at expected throughput

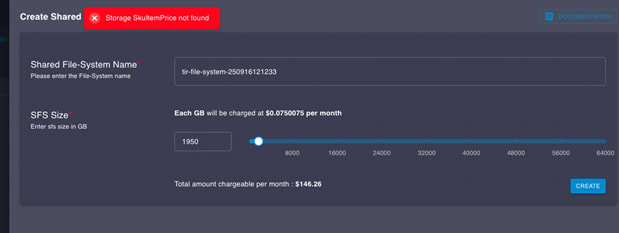

Storage

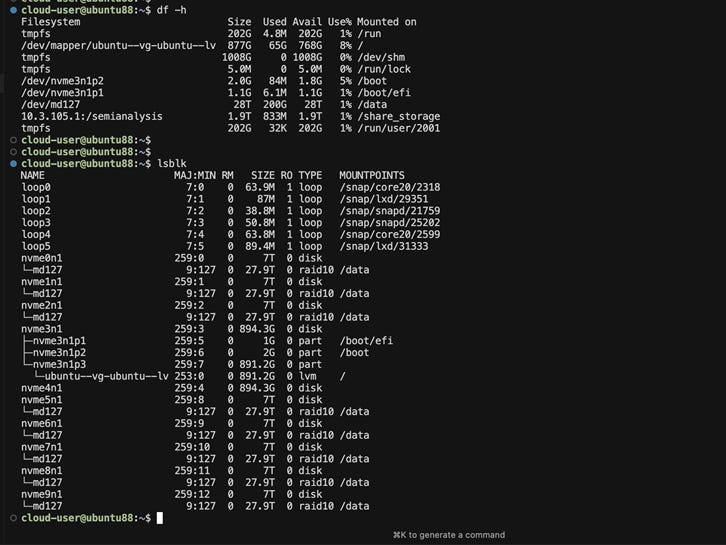

POSIX-compliant filesystem available (e.g.Weka, VAST, DDN)

S3-compatible object storage available (i..easy to use AWS S3, Azure Blob, GCS, R2, CAIOS, Scality)

Mounts available for /home and /data (or equivalent) on slurm, default RWM SC on k8s

Local drives or distributed local fs available for caching on /lvol (or equivalent)

Storage is scalable and performant

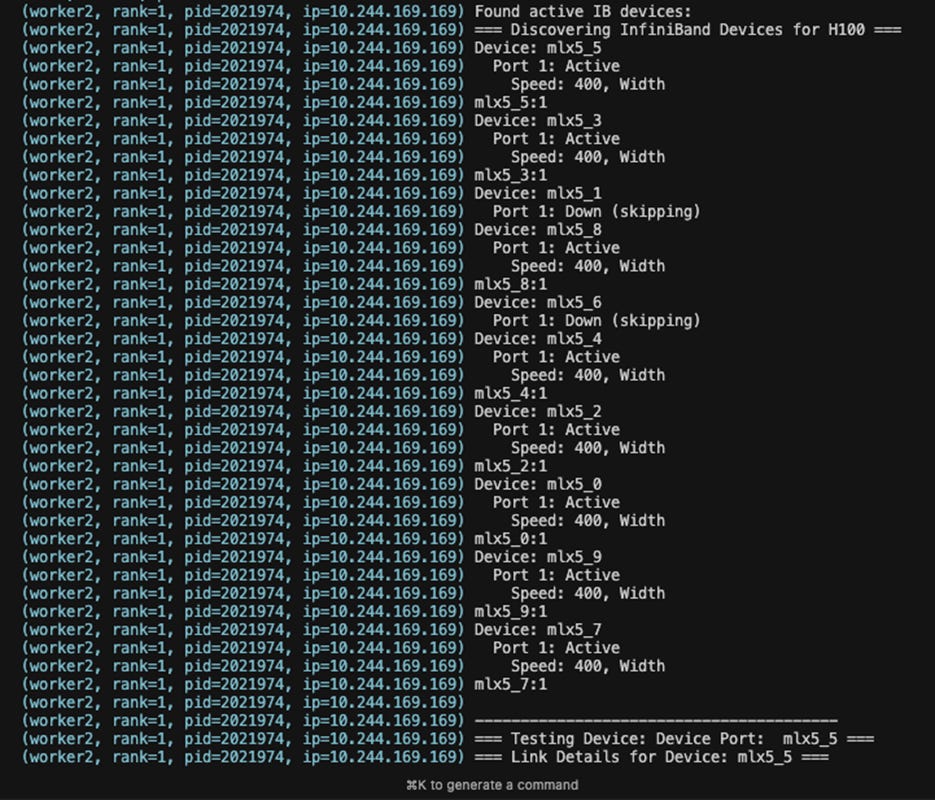

Networking

InifiniBand or RoCEv2 available

MPI implementation available (i.e.hpc-x available via default mpirun)

Default NCCL configuration in /etc/nccl.conf is reasonable

nccl-tests or rccl-tests or stas all_reduce_benchmark.py runs at full bandwidth

multinode torchtitan training job runs at expected MFU

SHARP support for improved nccl performance at scale

NCCL monitoring plugin available

NCCL straggler detection available

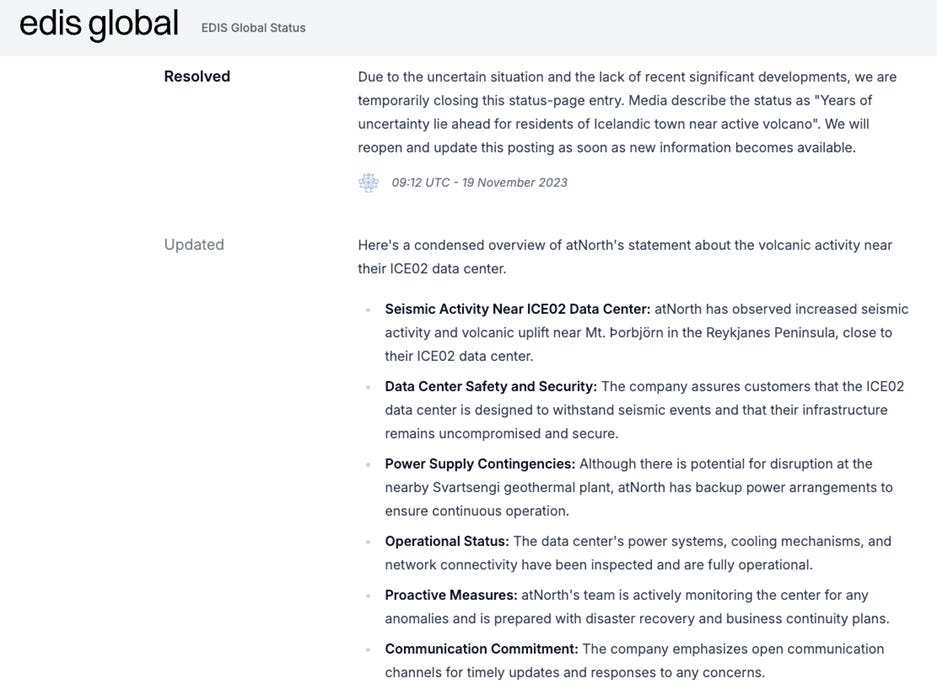

Reliability

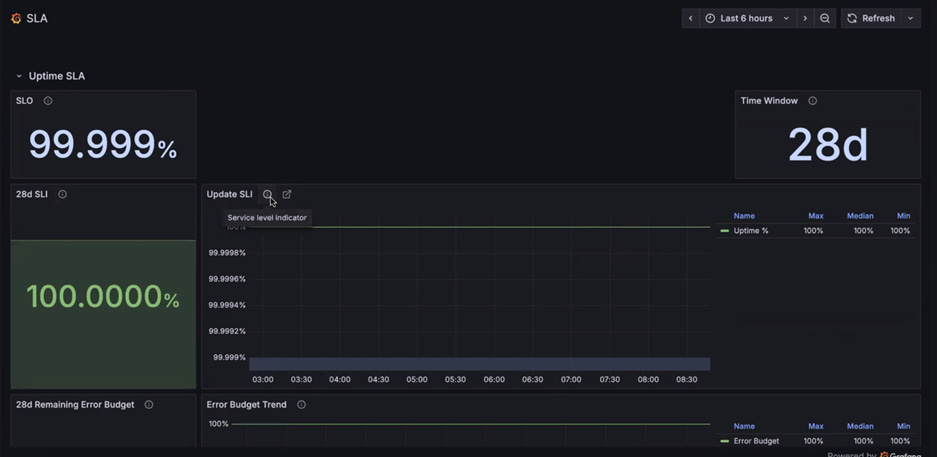

Hardware uptime SLA is available and reasonable (i.e.99.9% on compute nodes, 99% on racks)

24x7 support is available, 15-minute response SLA

No link flapping on the interconnect network

No filesystems unmounting randomly

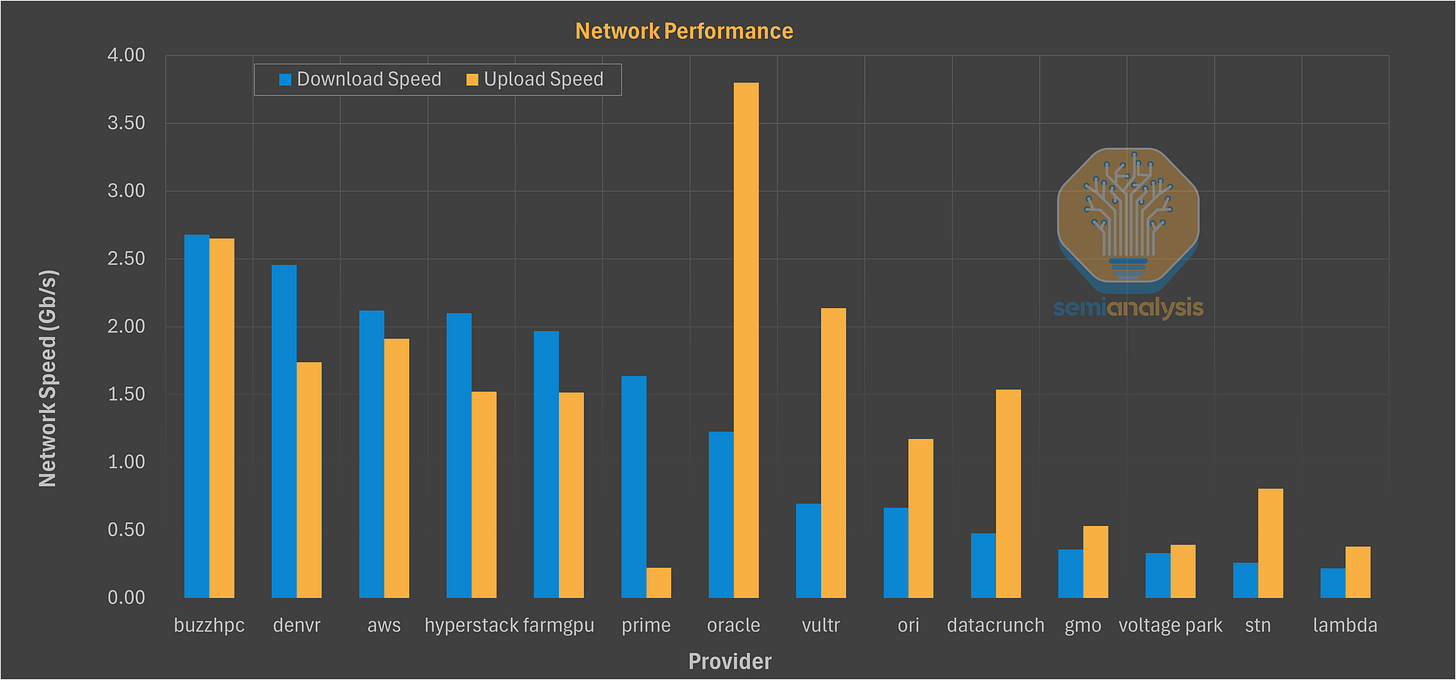

WAN connection is stable over time, upload/download speed is reasonable

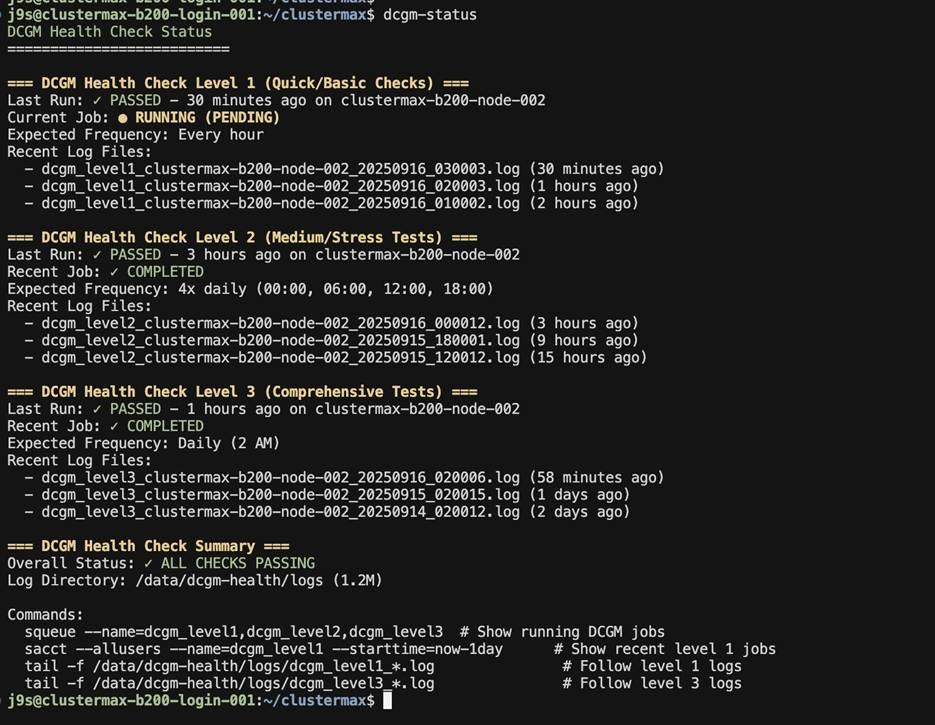

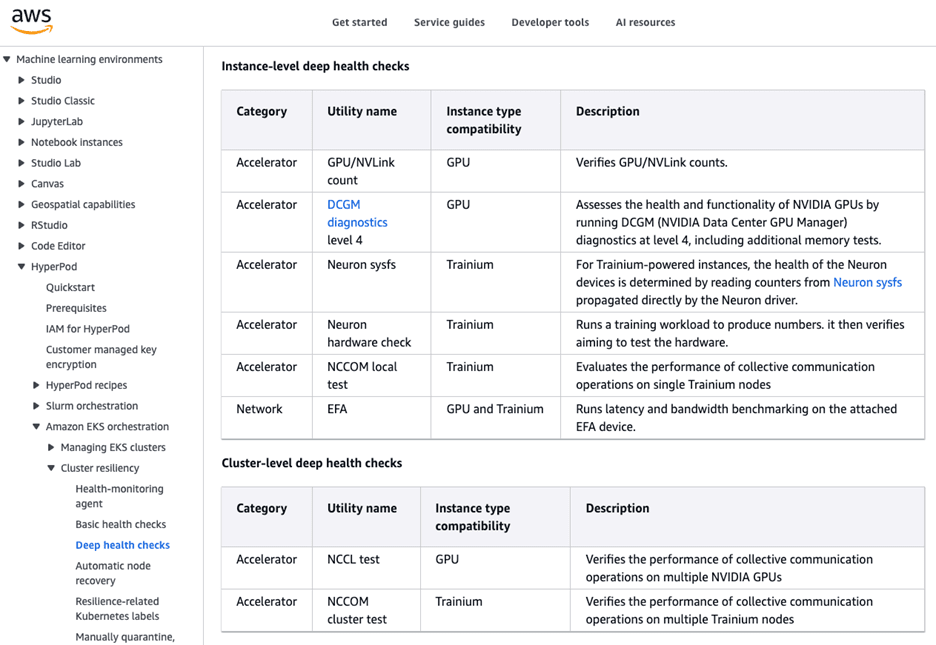

Full suite of Passive Health Checks

https://docs.nvidia.com/datacenter/dcgm/latest/user-guide/feature-overview.html#background-health-checks

Detect and drain when: GPUs fall of the bus, PCIe errors, IB or RoCEv2 events/link flaps, thermals out of range, ECC errros on GPU or CPU memory, XID or SXID’s, NCCL/RCCL stalls

Full suite of Active Health Checks

Lightweight test suites run in prolog/epilog or hourly/daily in a low-priority partition on idle nodes

Aggressive test suite runs weekly on idle nodes

DCGM level 1, 2, 3 with EUD, DtoH and HtoD bandwidth, local NCCL test, local IB test, pairwise GPU and CPU ib_write_bw, ib_write_latency, GPUBurn or GPU Fryer, NVIDIA TinyMeg2, UberGEMM, multinode megatron or torchtitan job matches reference numbers

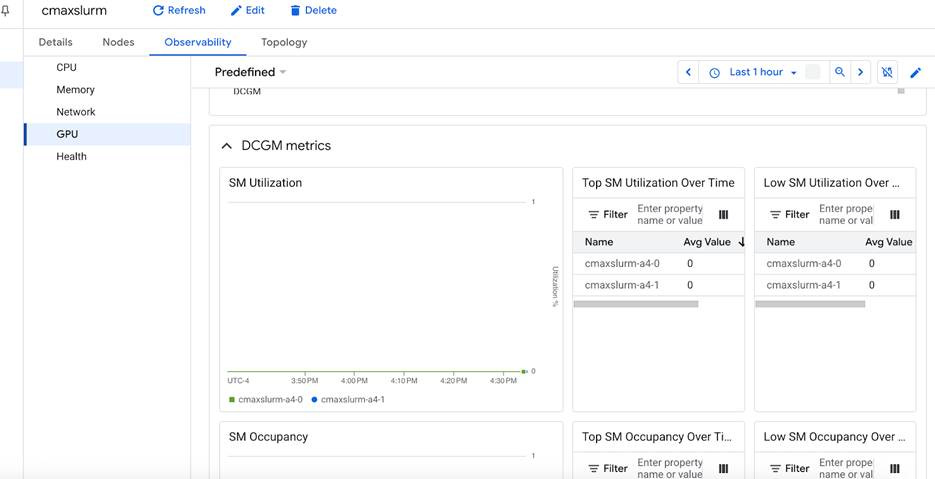

Monitoring

Grafana or an equivalent dashboard is pre-installed and accessible with high-level and low-level view of cluster information

Easy to configure custom alerting

SLURM integration for job stats, resource usage, summaries (sacct)

Kubernetes integration (kube-state-metrics, node-exporter, dcgm-exporter, cAdvisor)

DCGM information available

SM Active monitoring via DCGM_FI_PROF_SM_ACTIVE

SM Occupancy monitoring via DCGM_FI_PROF_SM_OCCUPANCY

TFLOPs estimation via DCGM_FI_PROF_PIPE_TENSOR_ACTIVE

PCIe AER rates monitoring via DCGM_FI_DEV_PCIE_REPLAY_COUNTER

GPU and CPU memory errors via DCGM_FI_DEV_ECC_SBE_VOL_TOTAL

Node level health status: power draw, fan speed, temperatures (CPU, RAM, NICs, trasceivers, etc.)

PCIe, NVLink or XGMI, InfiniBand/RoCEv2 throughput

dmesg logs (i.e.promtail)

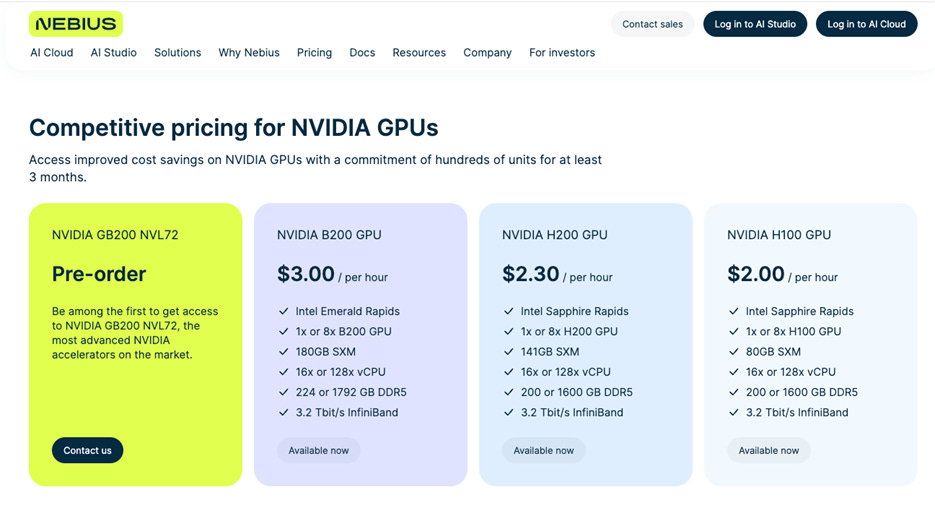

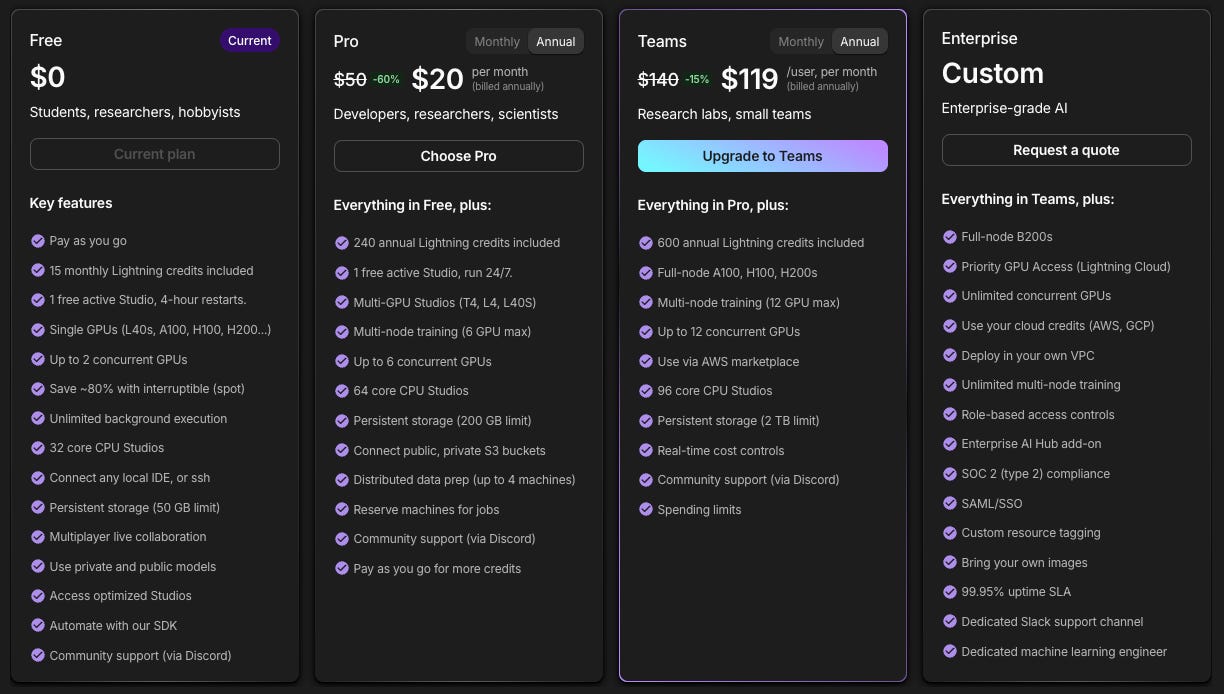

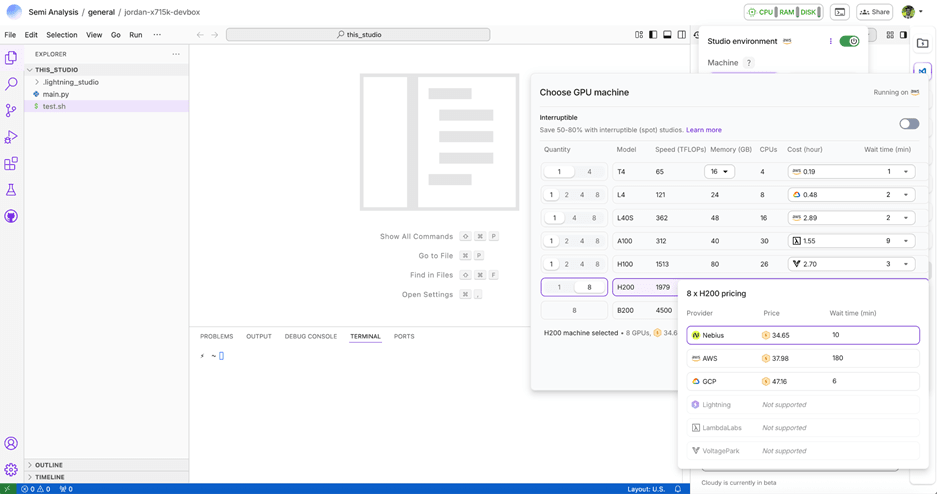

Pricing

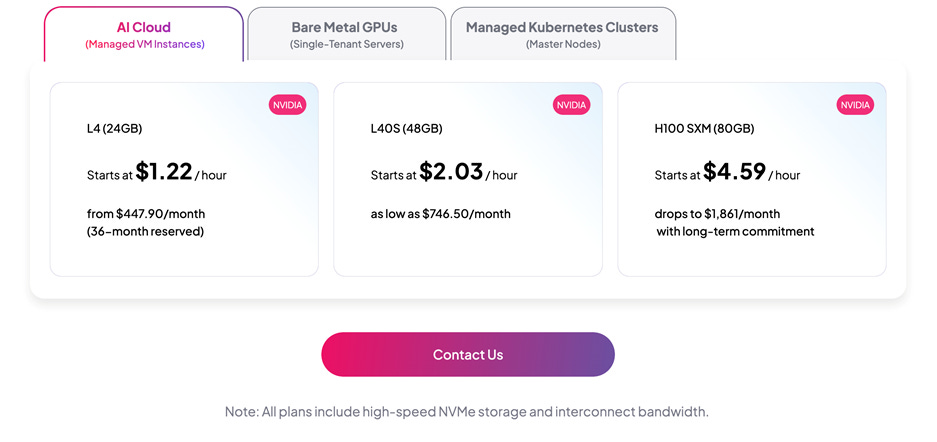

Generally, lower prices per GPU-hr are better for end users, assuming the quality does not change

Consumption models (1 month, 3 month, 6 month, 1 year, 2 year, 3 year)

Individual charges for storage, compute nodes, network, etc.or bundled

Expansion and extension of existing contracts

Partnerships

AMD or NVIDIA investment

NVIDIA NCP certification

NVIDIA exemplar cloud performance certification

AMD Cloud Alliance status

Knowledge of security updates (e.g., follow Wiz)

SchedMD partnership (makers of SLURM)

Participation in industry events, ecosystem support

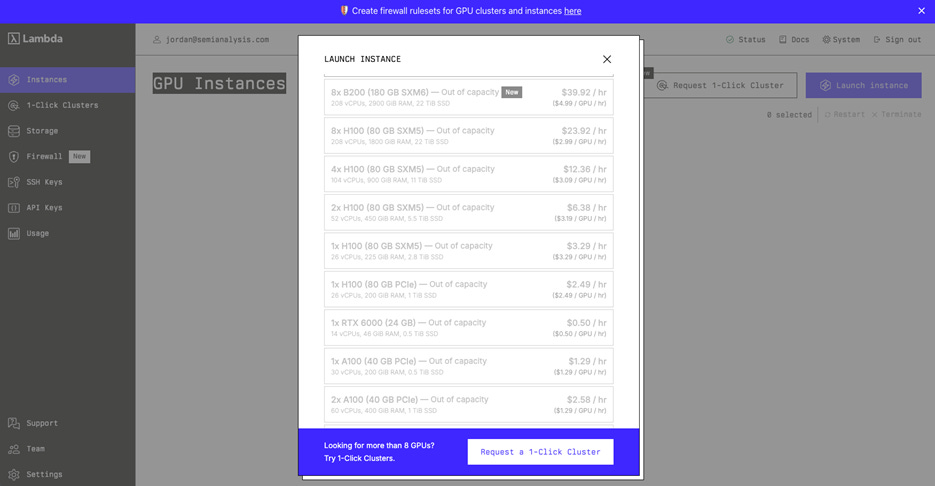

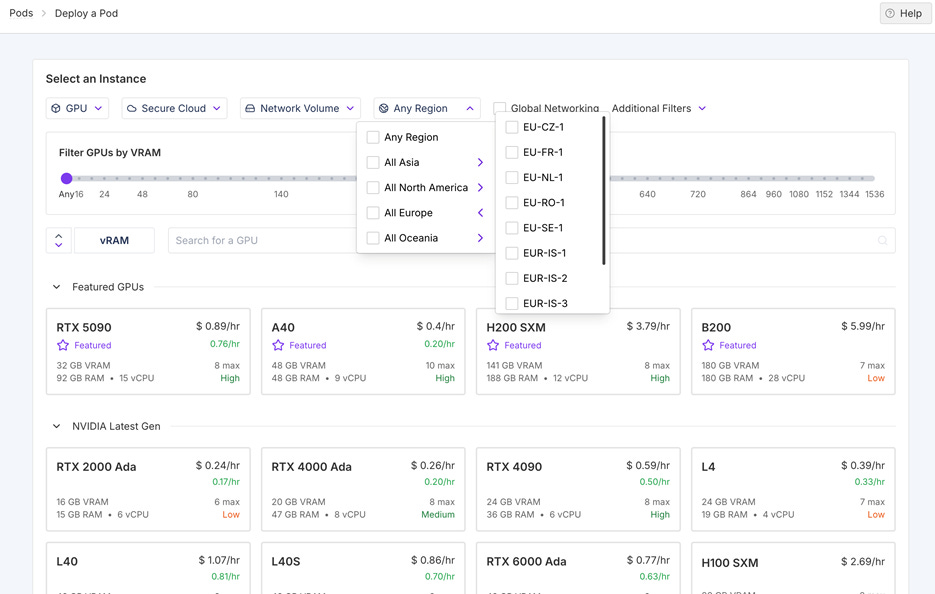

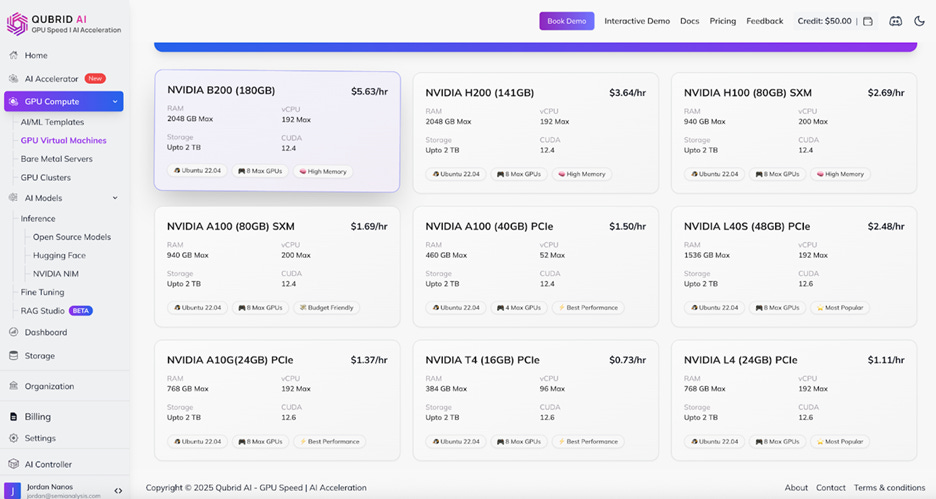

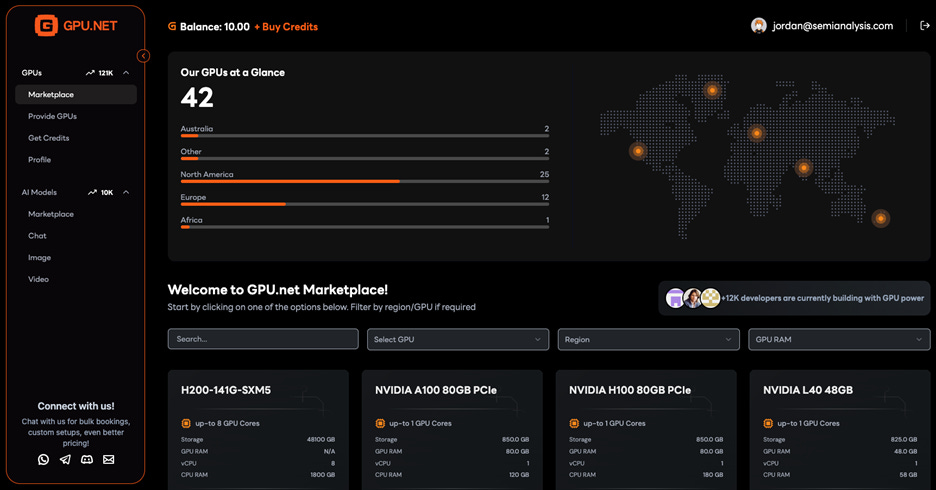

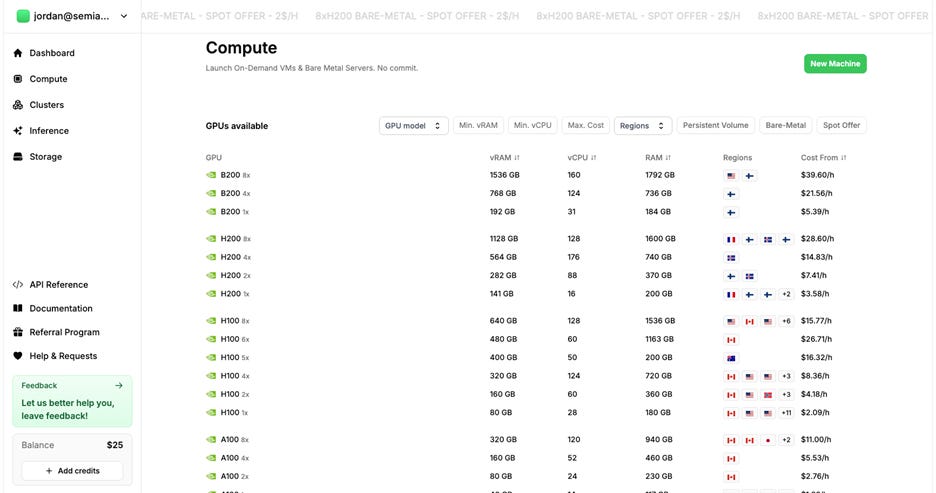

Availability

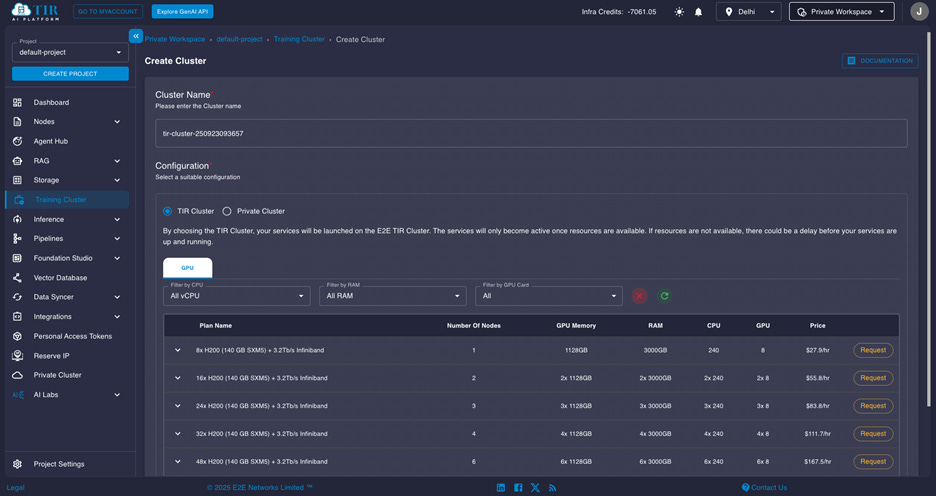

Total GPU Quantity and Cluster Scale Experience

On-demand availability, utilization, capacity blocks

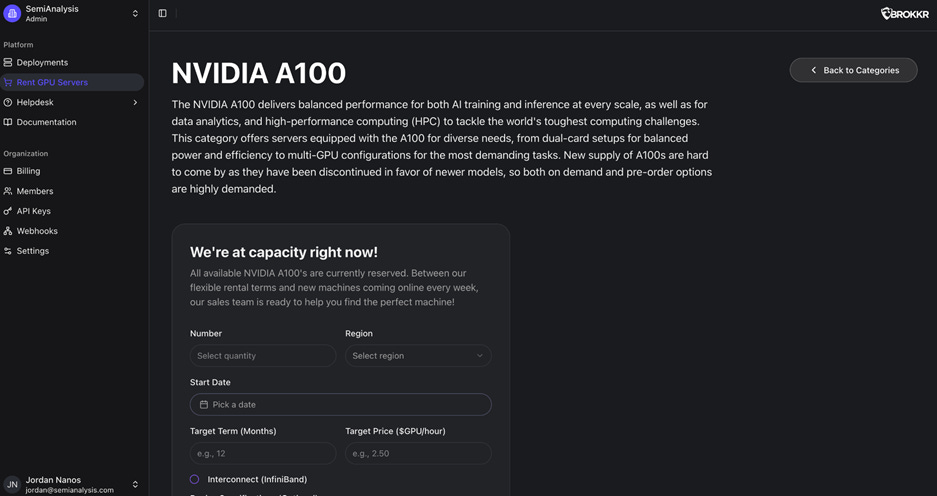

Latest GPU Models Available (H100, H200, B200, MI300X, MI325X, MI355X)

Roadmap for Future GPUs (B300, GB200 NVL72, GB300 NVL72, VR, MI400, etc.)

Many notable improvements have already been made to this list since our testing window closed on September 15th, 2025. In order to accommodate new additions to the rating system, we will be accepting anyone attempting to move onto the leaderboard and achieve a ClusterMAX medallion status in the coming weeks. To achieve this, we will progressively release minor versions, i.e. ClusterMAX 2.1, without re-testing all providers.

When a notable change in the industry occurs, say when rack-scale systems such as GB200, GB300, VR200 and MI450X gain widespread market adoption we will move to a major version upgrade, i.e.ClusterMAX 3.0. We expect this to be every ~6 months.

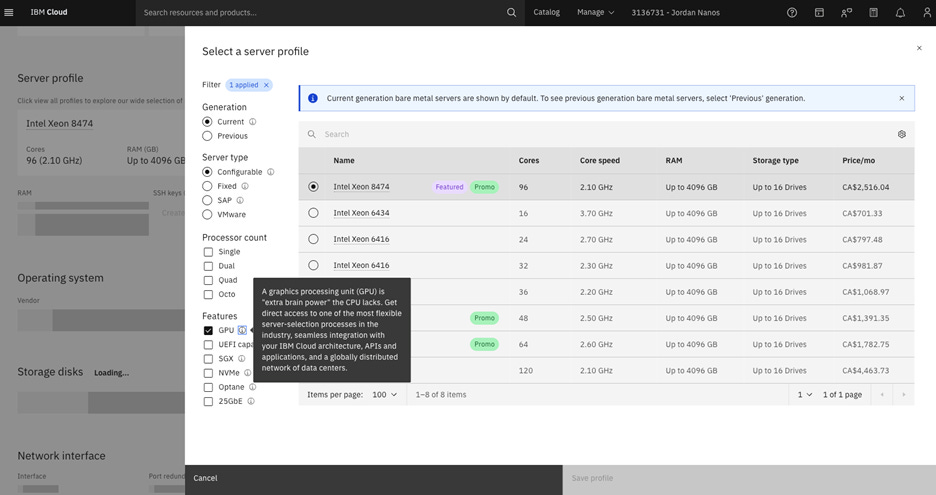

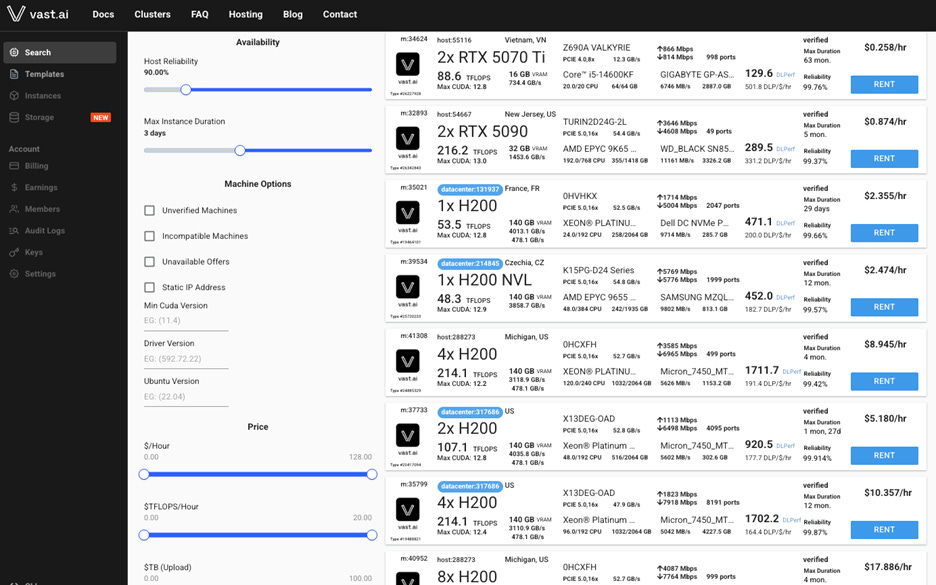

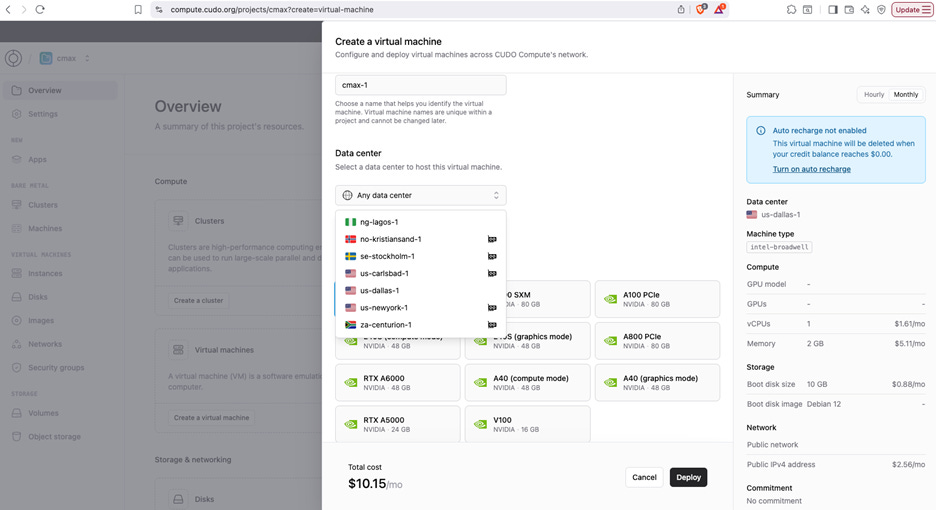

Example Tests

Many industry analysts and financial speculators believe that GPU compute has turned into a commodity, easily compared by the price per GPU-hr. We think that price is just one of many criteria that buyers consider when committing to a cluster, or even a virtual machine.

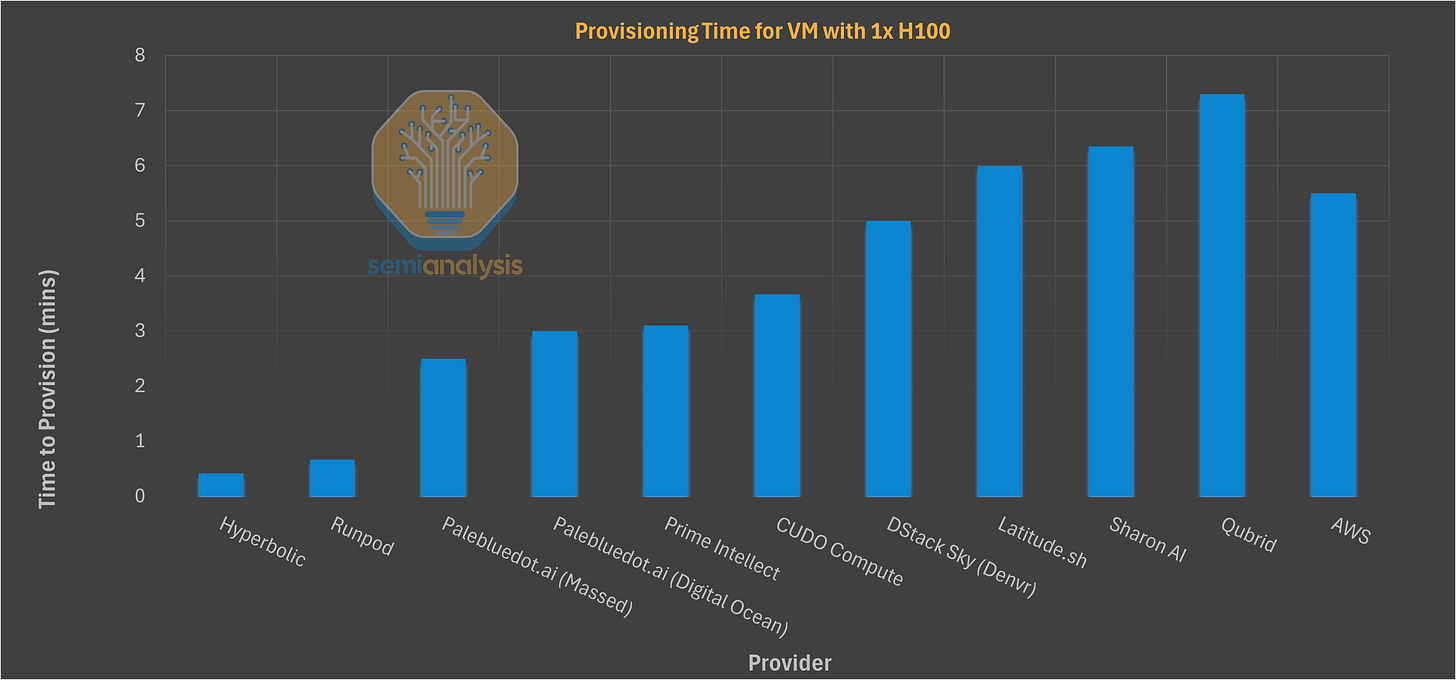

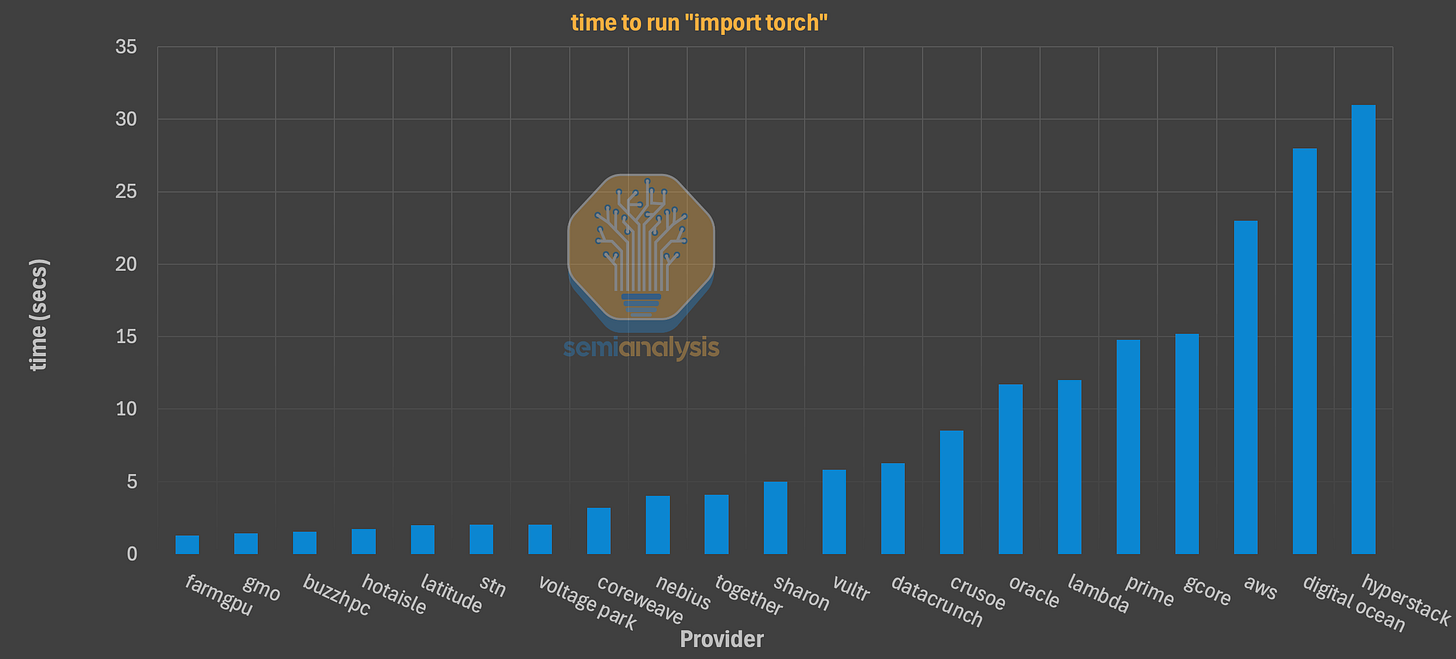

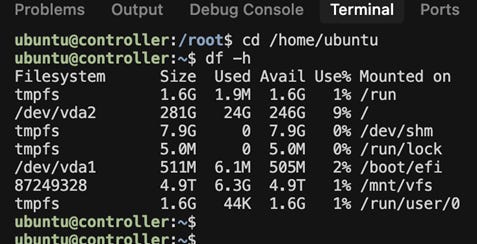

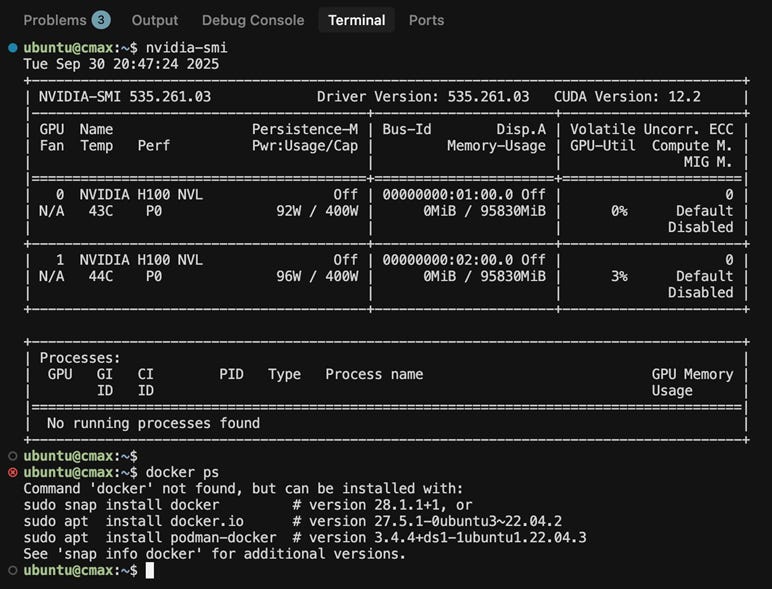

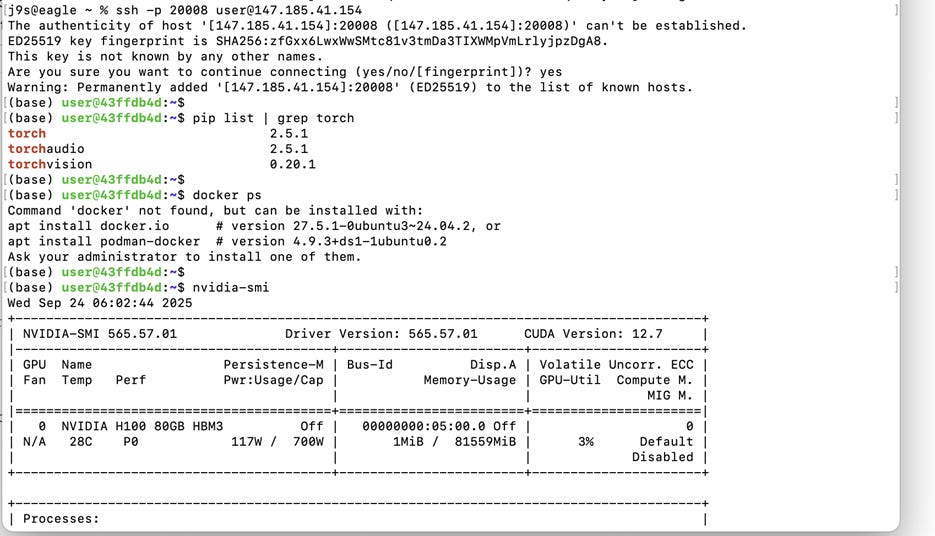

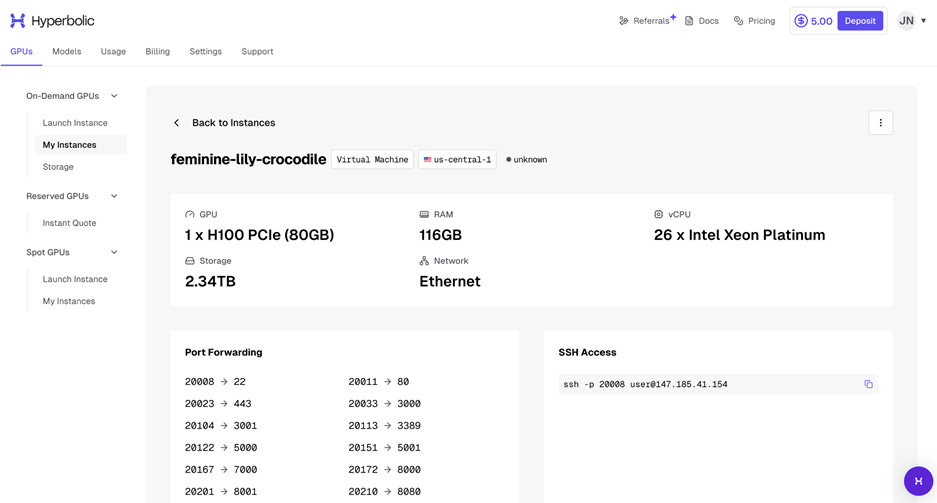

These simple tests generally complete in under one minute. We treat them as a proxy to reveal underlying issues with network configuration, storage performance, peering, and virtualization overhead. A provider that fails these basic setup tasks will almost certainly fail at running a multi-week, multi-node training job. Below are some direct comparisons for useful information that compare GPU clouds directly:

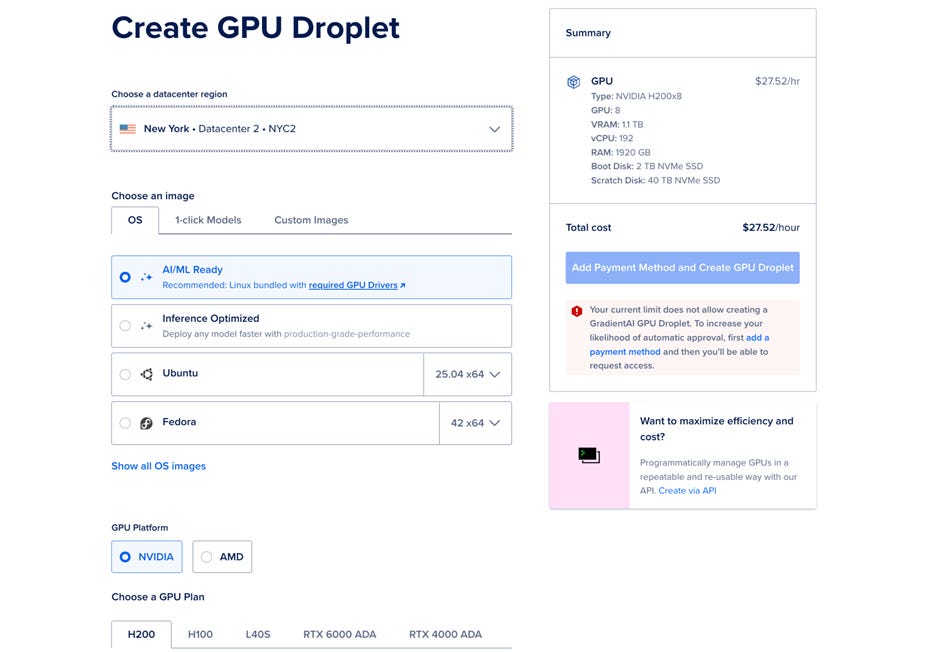

Time to install PyTorch via uv and via pip. This is a proxy for WAN connection quality and local disk I/O for small file operations. Using the modern uv installer, we saw a massive divergence. Best-in-class providers (e.g., CoreWeave, GCP) completed the install in ~3.2 seconds. The median time was ~8.5 seconds, while one Bronze-tier provider took a staggering 41.2 seconds. Using pip was universally slower, with a median of ~28 seconds, highlighting the penalty for not adopting modern tooling.

Time to download pytorch container from NGC. A 22.1GB pull of the standard nvcr.io/nvidia/pytorch:24.05-py3 container is a critical test of a provider’s peering and local caching strategy. Platinum-tier providers like CoreWeave, which maintain local NGC mirrors, completed the pull in under 10 seconds. Gold-tier providers (e.g., Nebius, Oracle) were respectable at 45-60 seconds. Silver-tier providers often took 90-120 seconds, and many Bronze-tier providers exceeded 4 minutes, revealing a lack of basic infrastructure setup.

Time to run “import torch”. This measures the ‘cold start’ time for a developer, including driver initialization and library loading. Anything other than near-instant is a sign of misconfiguration or virtualization overhead. While the median was a tolerable ~1.8 seconds, we found multiple providers, particularly those with complex VM configurations, which have a frustrating 8-10 second delays.

Time to download 15B Microsoft/phi-4 model A real-world test of sustained WAN throughput from Hugging Face’s CDN. The median time was ~35 seconds (roughly 3.4 Gbps). Top-tier providers consistently achieved speeds over 6 Gbps, finishing in ~20 seconds, while others lagged significantly, increasing friction for researchers switching between models.

Time to load 15B Microsoft/phi-4 model into GPU memory A pure test of the local data path, from disk (local NVMe or shared filesystem) to GPU memory over PCIe. The median time here was ~12 seconds. Slower times directly correlate to a poor local storage tier, a bottleneck that will be felt during every checkpoint load.

Location of the machine and IP Ownership match expected provider name A simple whois on the machine’s public IP. This test is critical for exposing aggregators and resellers. In numerous tests of smaller providers, the IP ownership resolved back to Oracle, CoreWeave, or AWS respectively. This challenges some the provider’s marketing and raises questions about pricing and quality of support.

General network speedtest While synthetic, speedtest-cli is useful for spotting WAN saturation or traffic shaping. We saw a median download of ~4.5 Gbps and upload of ~2.8 Gbps. More interestingly, some providers (like STN) would post excellent, symmetrical 10 Gbps speedtest results, but then perform poorly on real-world file (e.g., NGC, Hugging Face) downloads, suggesting traffic management is in place to game these exact synthetic tests.

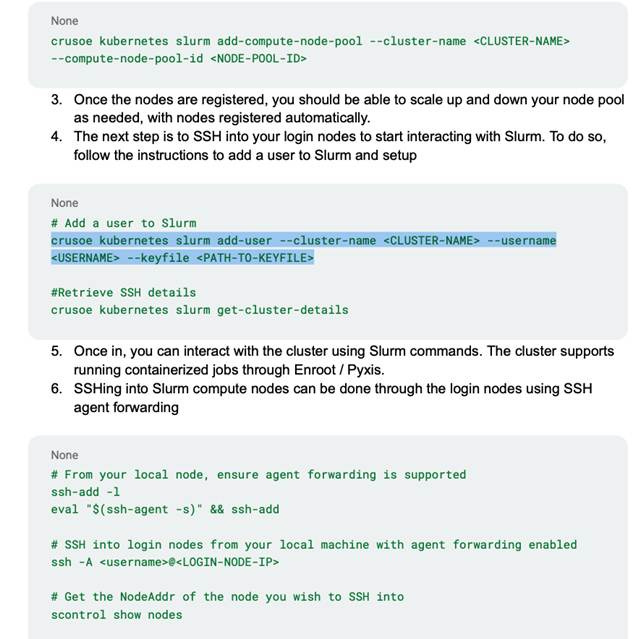

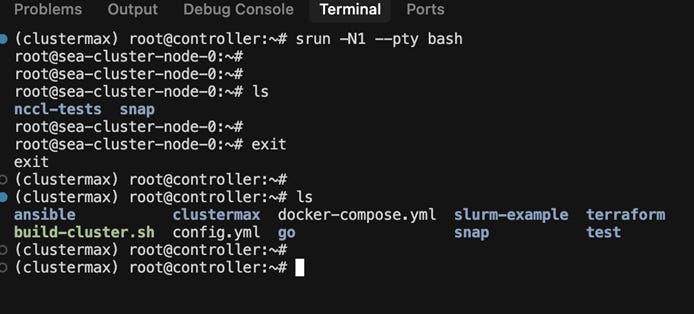

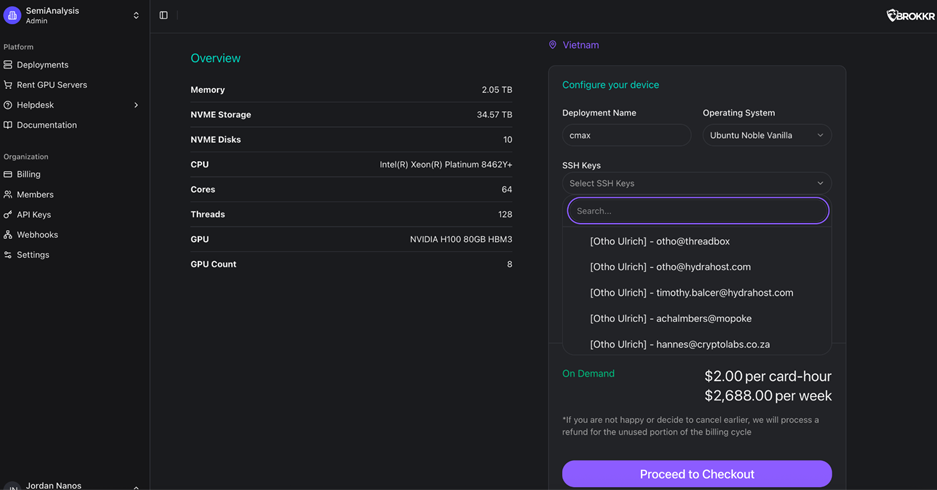

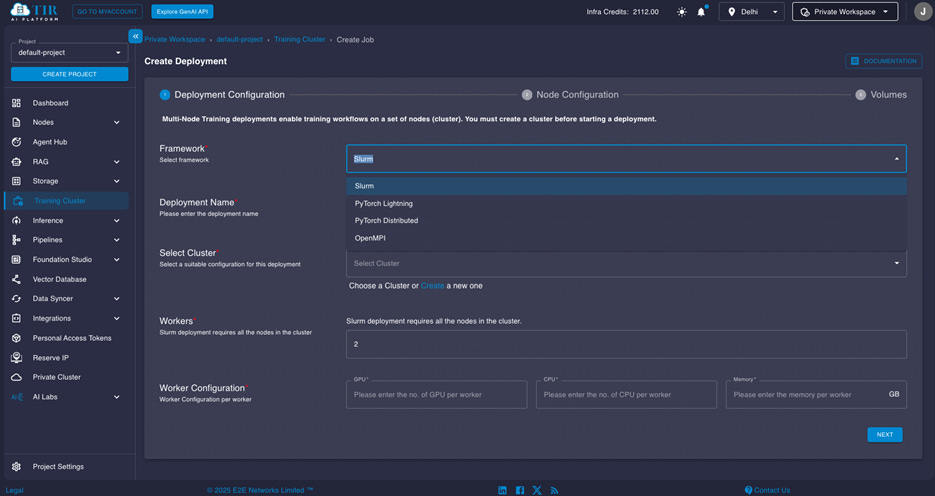

Interconnect bandwidth test (“nccl-tests” or “rccl-tests”) Using the open source repos, built with mpi or using a torch launch script maintained by Stas Bekman in the Machine Learning Open Book, we test the interconnect on synthetic allreduce, allgather, and alltoall workloads and compare to expected algbw and busbw results. This is a simple way to verify that the network is setup correctly and GPU Direct RDMA is enabled on the compute nodes. We run this test on SLURM via salloc/mpirun, salloc/srun, or sbatch/enroot/pyxis. We run this test on Kubernetes via the MPIOperator or via JobSet.

GEMM benchmark Using a custom script we verify that individual GPUs perform General Matrix Multiplication operations at expected performance (realized TFLOPs). This is a simple way to verify that the GPUs are performing as expected. We run this test directly in a python script.

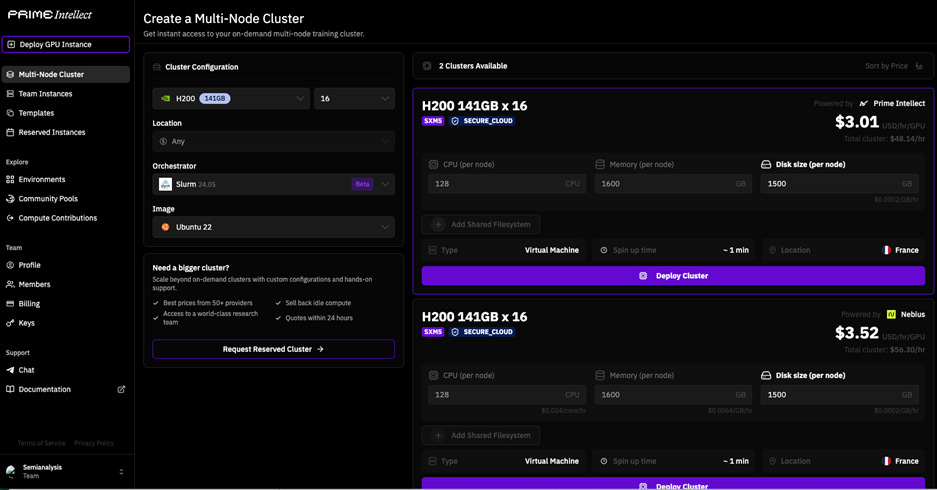

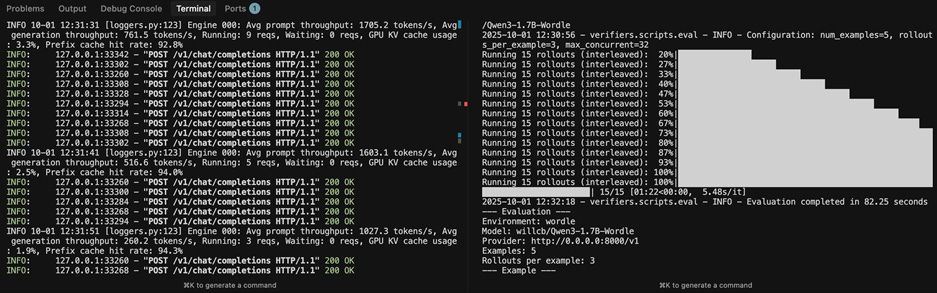

Single node RL job Using the verifiers library from prime intellect, we run a GRPO training script to train a Qwen model to solve the puzzle game wordle and compare against expected training time per rollout on a given GPU. This is a simple way to verify that the GPUs are performing as expected. We run this test directly in a python script.

Multinode pretraining job Using the torchtitan library, we run a multimode pretraining script to train a Llama model on the C4 datasets, and compare against expected training time per step and MFU. This is an important way to verify that the GPUs and interconnect network are performing as expected. We run this test on SLURM via sbatch, and on Kubernetes via PyTorchJob or JobSet.

Multinode inference benchmark Using llm-d, we run a prefill-decode disaggregated endpoint serving a Qwen model. We verify that inference throughput on the vllm performance suite matches expected performance, and verify that performance improves as more copies of the model are deployed behind the endpoint. We run this test on Kubernetes only.

Some of the results are interesting to view when comparing providers directly:

Notable Trends

There are many notable trends changing how the Neocloud industry operates today and what customers expect from their providers. The following is a description of a few of these trends:

Slurm-on-Kubernetes

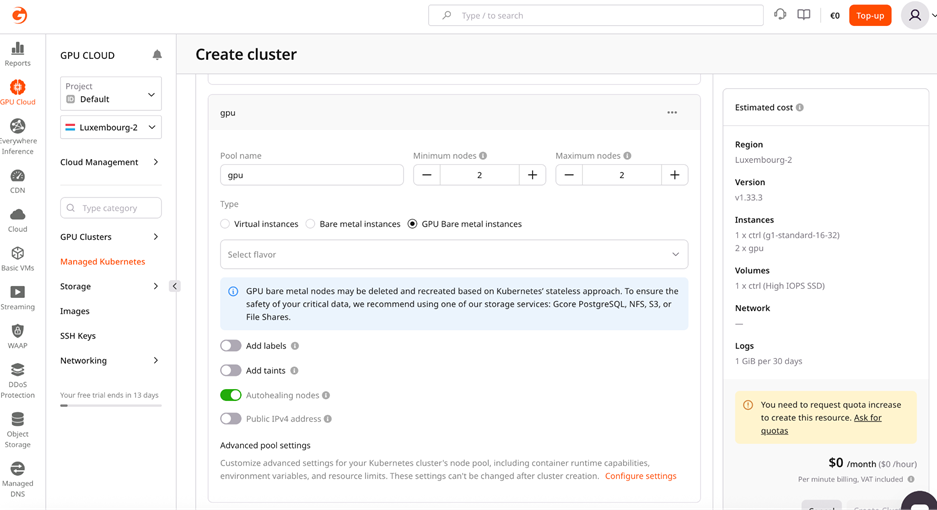

Virtual Machines or Bare-Metal?

Kubernetes for Training

Transition to Blackwell

GB200 NVL72 Reliability and SLA’s

Crypto Miners Here To Stay

Custom Storage Solutions

InfiniBand Security

Container Escapes, Embargo Programs, Pentesting and Auditing

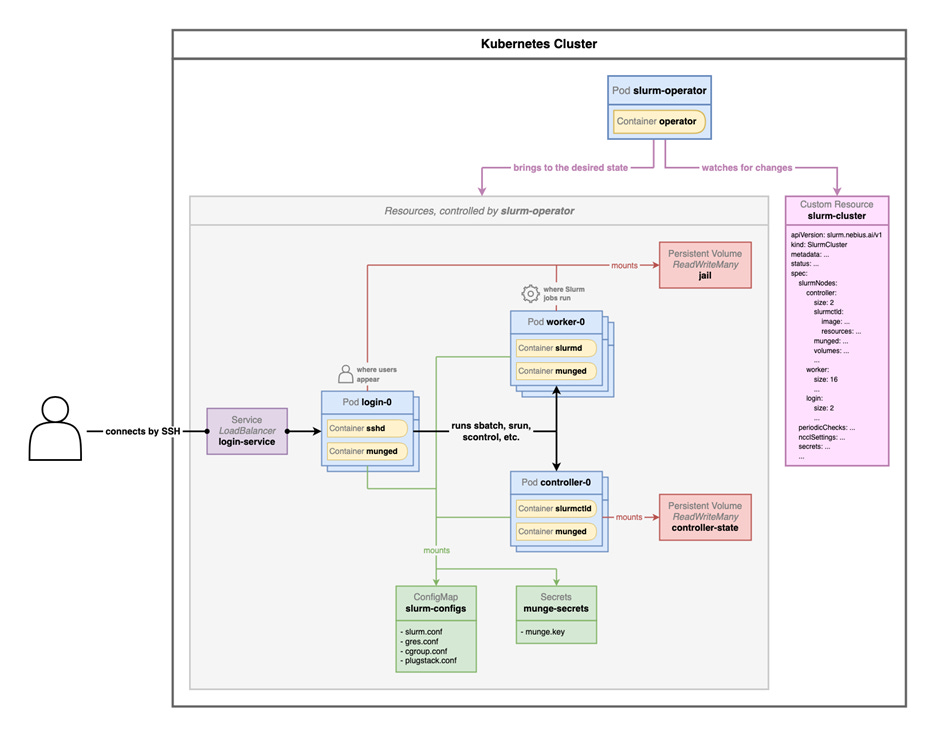

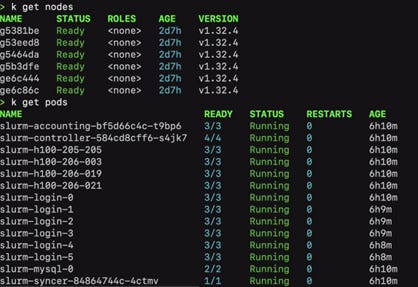

Slurm-on-Kubernetes

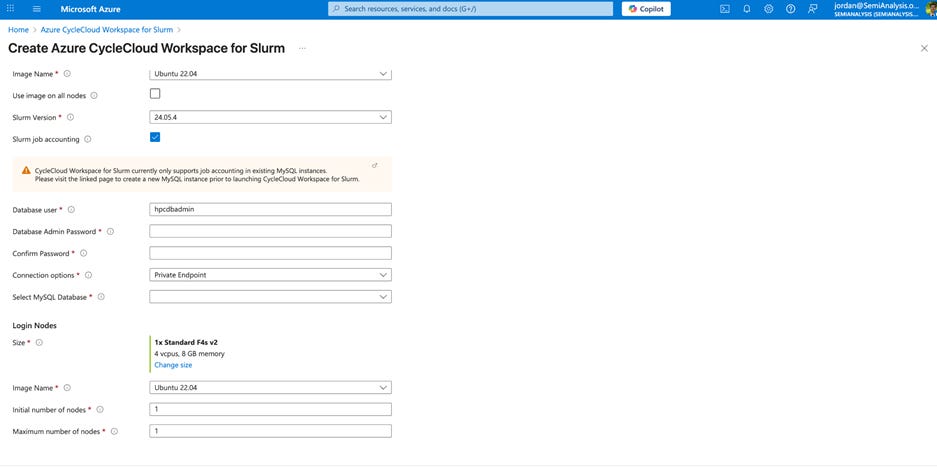

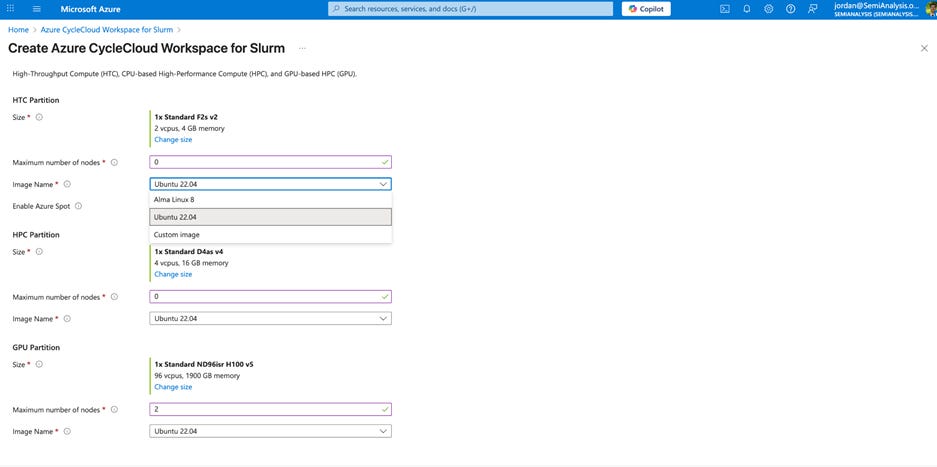

Currently, there are three ways to run Slurm-on-Kubernetes

SUNK from CoreWeave

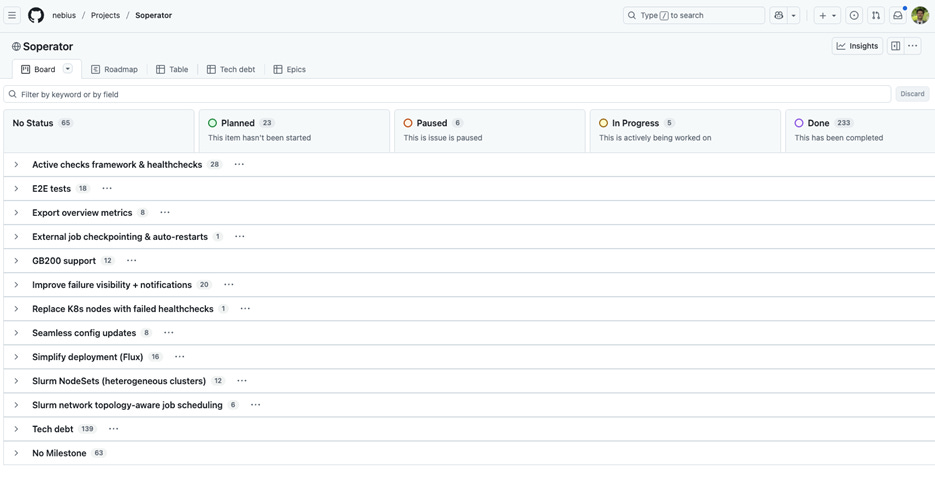

Soperator from Nebius

Slinky from SchedMD

There are major differences between the three Slurm-on-Kubernetes offerings. Below we describe the key differences between these projects, and how they are currently used by different providers and users in the Neocloud ecosystem.

CoreWeave’s SUNK was first to market, is proprietary, and continues to be the only viable solution for running both slurm and Kubernetes jobs on the same underlying cluster. For example, a batch queue of slurm training jobs, and an autoscaling inference endpoint on Kubernetes that compete for underlying GPU resources.

Next, Soperator from Nebius, which is open source has also seen widespread adoption amongst Nebius users that prefer slurm. Unlike SUNK, we are not aware of any users that use the kubeconfig/kubectl access to the underlying Kubernetes cluster to schedule workloads on Kubernetes. Instead, Nebius relies on autoscaling and node lifecycles to move GPUs between a slurm batch queue and a kubernetes inference cluster, as an example. However, access to the underlying cluster is open, and some customers do use this access for cluster lifecycle management, observability/logging, debugging via kubectl, and some even customize Soperator itself, setting up user and access management, VPNs, custom prolog/epilog scripts, CSI drivers, etc.

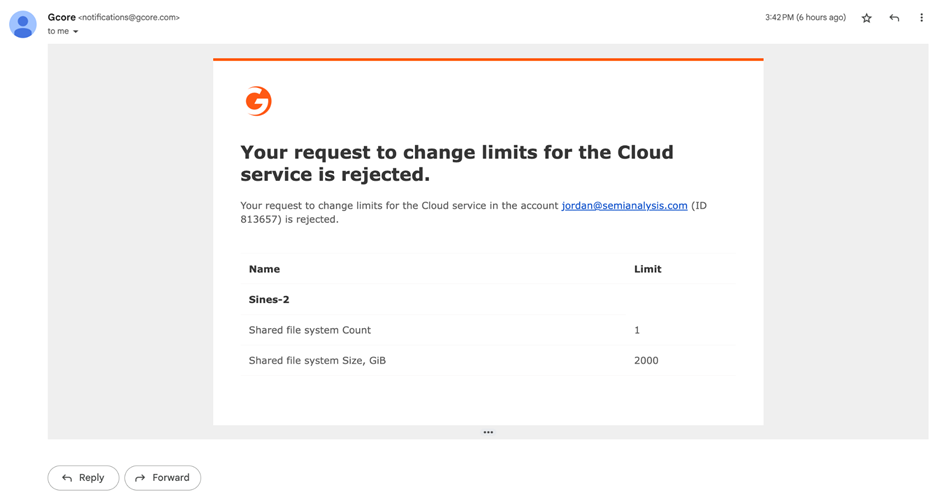

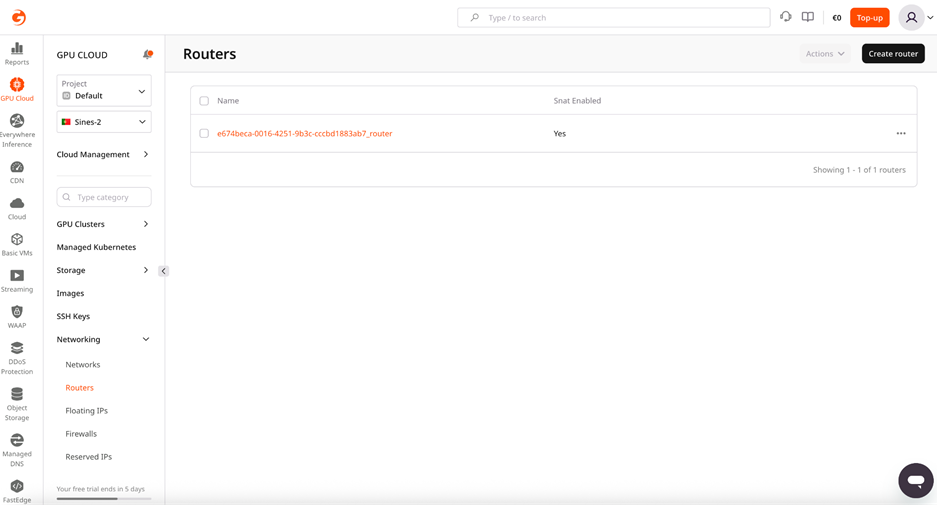

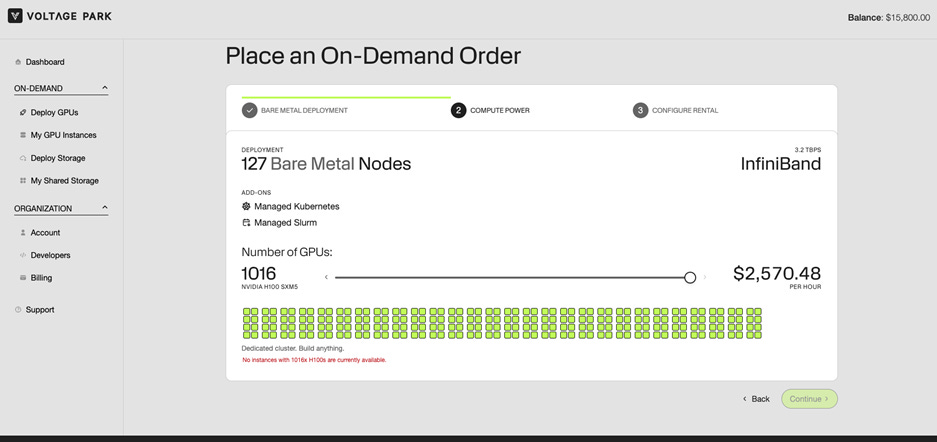

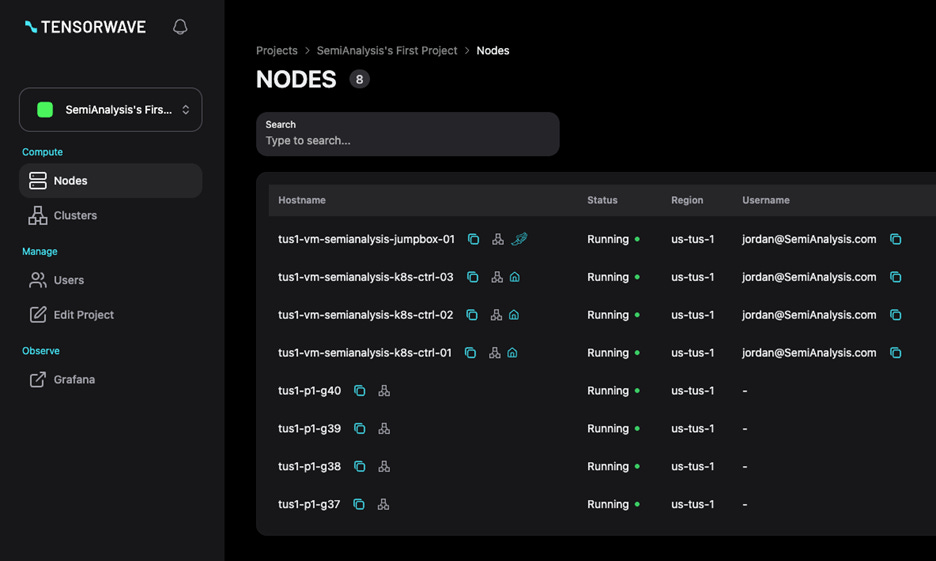

At this point, we are aware of two other cloud providers outside of Nebius (namely, Voltage Park and GCORE) that rely on Soperator to provide their SonK services.

Finally, Slinky from SchedMD, the original creators of slurm, gatekeepers of the slurm roadmap, PR deniers, and sole providers of support: https://github.com/slinkyproject

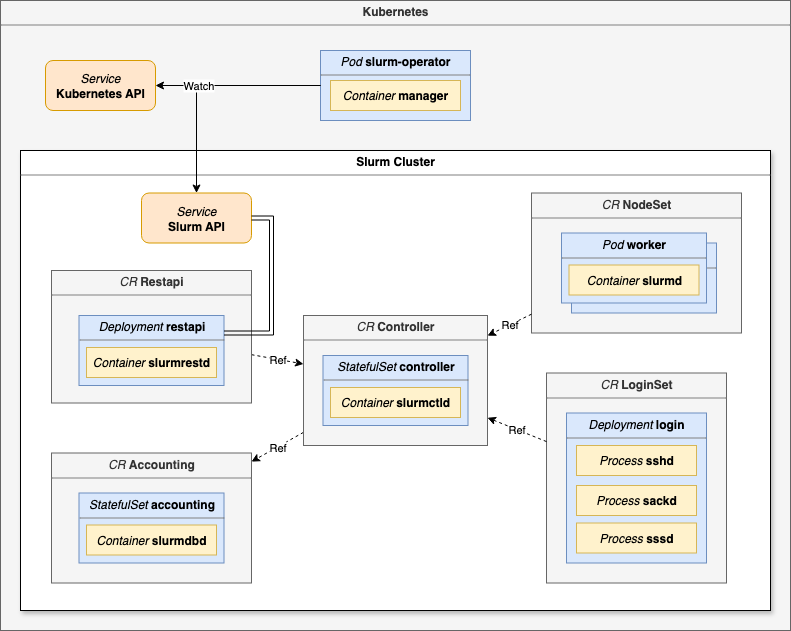

At this time, Slinky is broken into separate projects, most importantly slurm-operator, which is a set of custom controllers and CRDs capable of running the core slurm services on a Kubernetes cluster, instead of directly on bare metal or VMs. These slurm services are:

slurmctld – the slurm controller which manages what jobs run on what slurm workers in the cluster, and what the health state of these workers are. On kuberenetes this is

slurmd – the slurm worker nodes themselvesslurmrestd – provides an API endpoint for slurmctld

slurmdbd – a database to store job stats, i.e. who ran what

To do this, slinky introduces a new process, slurm-operator, for managing the slurm resources on the cluster. Slinky also introduces a separate login pod, managed as a CRD “LoginSet” that runs the sshd, sackd, and sssd processes needed for users to run commands against the slurm cluster such as srun, sbatch, salloc, scontrol, squeue, sinfo, sacct, and sacctmgr.

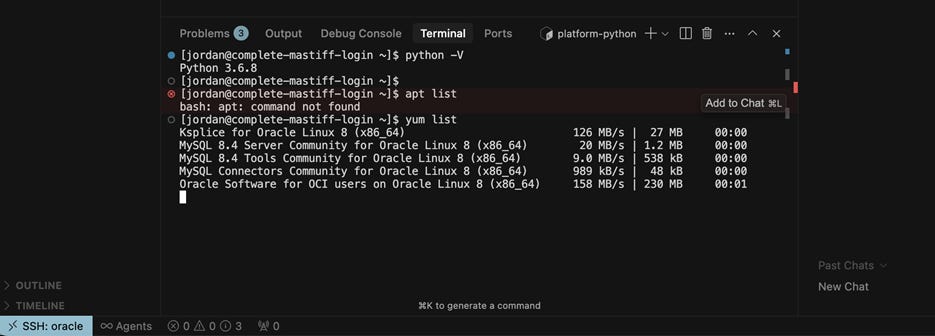

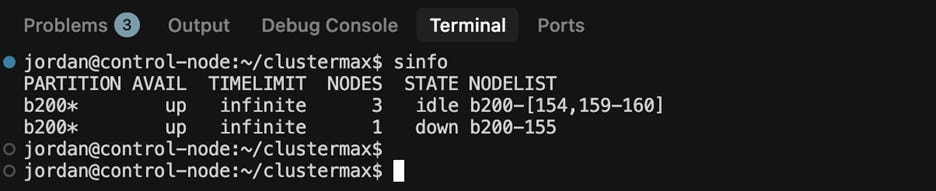

By default, Slinky out-of-the-box is not usable. Unfortunately, this has become a giant footgun for cloud providers that want to run slurm on kubernetes for whatever reason, but unfortunately don’t have the slurm experience to test anything other than the “sinfo” command. The default Slinky LoginSet pod is missing vim, nano, git, python, sudo permissions, and more. This has led to five different cloud providers giving us access to login to a slurm cluster, run sinfo and then… nothing else. How can we download our codebase without git? How can we edit a file without vim/nano? How can we run a script without python? How can we install any software without permissions to run apt?

SSH access between nodes is typically also not an option in these environments. Explaining Slinky is basically the easiest way to start explain SUNK and Soperator. So let’s do that.

Soperator was originally announced in October 2024 during Q3 Financial reporting, with an intro blog and explainer to go with it, but managed Soperator was not released until June 2025.

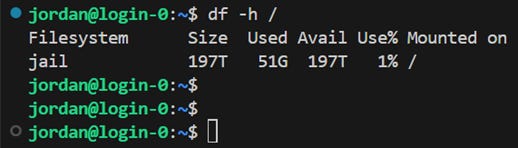

Architecturally, Soperator is similar to Slinky in that it runs on top of kubernetes, not beside it. In Nebius’s implementation, users are provisioned qemu based VMs on an internal-facing kubernetes cluster, and Soperator manages the Slurm environment within that. Effectively, at Nebius, it’s Slurm-on-Kubernetes-on-VMs-on-Kubernetes. However, that last part is not mandatory, any VMs or bare metal kubernetes cluster will do. Soperator works just fine on other kubernetes clusters, assuming that storage gets setup correctly.

Technically, Soperator is a Kubernetes Operator that automates the deployment and lifecycle of the relevant slurm services. The entire cluster is defined declaratively via a SlurmCluster Custom Resource Definition (CRD):

Provisions KubeVirt VirtualMachine resources for the Slurm control plane (slurmctld, slurmdbd).

Generates the necessary configuration files (e.g., slurm.conf) and distributes them to the VMs.

Manages compute partitions as SlurmNodeSet resources, which in turn create and manage the VM pool for slurmd workers.

Critically for performance, it orchestrates the passthrough of hardware like NVIDIA GPUs and InfiniBand/RoCEv2 NICs directly to the VMs, allowing jobs to achieve bare-metal performance.

It handles elasticity, allowing users to scale partitions up or down by changing a replicas field in the CRD, which Soperator reconciles by creating or deleting the underlying VMs.

However, unlike SUNK or slurm-bridge, Soperator does not provide a hybrid scheduler to co-locate and arbitrate between competing slurm and kubernetes jobs.

Finally, to describe SUNK in detail, it is important to focus on the difference between metadata management and integration with CKS at CoreWeave. SUNK is built with a slurm syncer pod that propagates metadata between slurm services and the Kubernetes scheduler. This is evident when the slurm epilog runs, it sees a failure in node health, and throws an error to the slurm controller, setting the node into a “drain” state. The integration does not stop at the slurm level, it also integrates with kubernetes via a custom scheduler. A slurm job actually creates kubernetes resources with custom scheduler logic for a given pod, which sets a dummy schedule, lets slurm do the scheduling, and then connects that pod to the slurm controller. This means that users can run both kubernetes and slurm jobs on the cluster, but everything is actually being scheduled by slurm. The result is that the slurm controller is aware of all workloads running on the cluster.

In addition, the default behavior of the LoginSet for Slinky and Soperator leaves a lot to be desired. With SUNK, the login pod controller flow includes a hook up with the LDAP/IAM system for user management, and automatically load balances users into an interactive, ephemeral ssh pod that is spun up on-demand and mounted to the standard shared fs for the user’s /home directory. Accessing the pod can be done via ssh, or via the tailscale operator, meaning the cluster doesn’t need a public IP for every login pod. With Slinky, the load balancing is an IP-based layer 3 round robin, which can lead to differences if a user installs some software and then gets disconnected from the cluster.

Overall, it is clear that many providers are looking to adopt the mutual benefits of kubernetes for lifecycle management of the hardware and reliability guarantees, with the ease of use and familiarity that slurm provides users for batch scheduling. We expect Slurm-on-Kubernetes will continue to be a compelling option for providers to use going forward.

Virtual Machines or Bare-Metal?

The classic debate is evolving. Providers like CoreWeave and Oracle champion bare metal for maximum performance. However, this approach necessitates offloading networking, storage, and security functions to DPUs (like Nvidia BlueField), replicating the hyperscaler model that AWS popularized with their Nitro system.

Meanwhile, providers like Nebius (kubevirt) and Crusoe (cloud-hypervisor) utilize lightweight VMs. The claim is that this provides significant operational benefits such as rapid provisioning (seconds vs. minutes/hours), stateful snapshots, shared storage, and cleaner security isolation. In the case of Nebius, they have achieved bare-metal class performance as confirmed by our testing, industry benchmarks, and direct conversations with their users. With Crusoe, this level of performance is an ongoing work in progress.

Overall, the choice is now less about performance and more about architectural philosophy on the side of the provider. It is notable that even amongst the top tier providers in the industry there is not a settled best practice between bare-metal, VMs (cloud-hypervisor), and VMs-on-kubernetes (kubevirt) as the best option for infrastructure lifecycle.

Kubernetes for Training

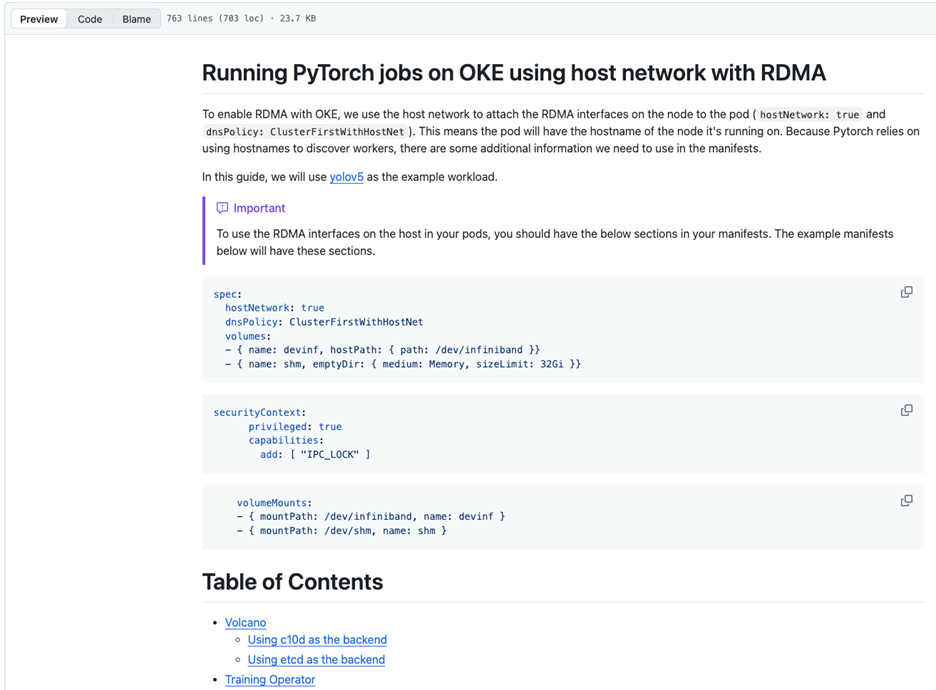

Without Slinky, Soperator, or SUNK, there are still ways to run training jobs on Kubernetes.

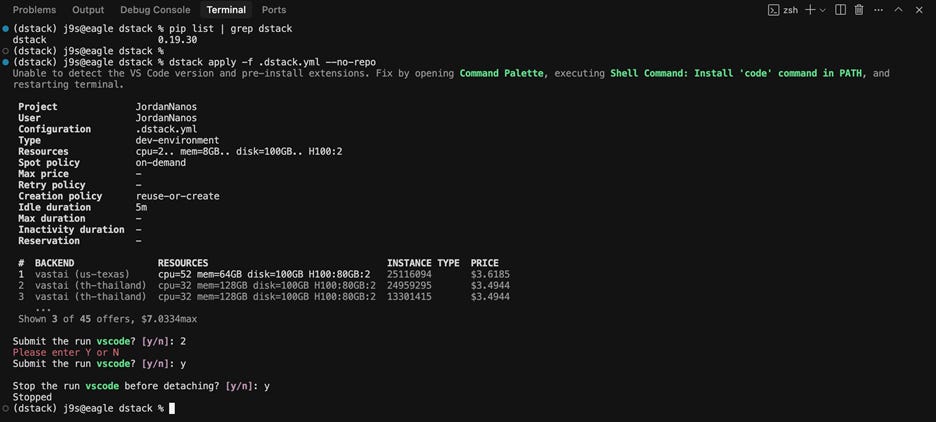

Specifically, tools/CRDs like Kubeflow (MPIOperator, PyTorchJob, TrainingOperator), Jobset, Kueue, Volcano, Trainy, dstack, and SkyPilot all provide methods for scheduling a training job on a kubernetes cluster, and handling a batch queue.

It is useful to understand what each tool does, and where it came from:

Kueue – CRD – Manages job queues and user quotas. Works with the default kubernetes scheduler. Helps teams share underlying cluster resources (i.e. who gets what GPUs).

Volcano – Custom Scheduler – Provides gang scheduling, job dependencies and other advanced features that are missing in the default kubernetes scheduler. Can be used with Kueue.

PyTorchJob – Operator/CRD – Lifecycle management of pytorch jobs. Comes from the KubeFlow community.

MPIOperator – Operator/CRD – Manages the lifecycle of MPI jobs. Comes from the KubeFlow community.

KubeFlow Training – Platform – a collection of multiple different training operators, includes more than just MPI and PyTorch.

Jobset – CRD – General purpose resource definition for gang-scheduled jobs, improving on the Kubernetes batch API. Contributed by Google to the kubernetes community, and growing fast in adoption. Can be used with Volcano and Kueue.

Trainy – Platform – Simplifies job submission to kubernetes, reducing yaml burden for users to contend with. Paid product.

dstack - Platform - Simplifies job submission to kubernetes, reducing yaml burden for users to contend with. Open source with enterprise option (support, SSO).

SkyPilot – Platform – Simplifies job submission to kubernetes, reducing yaml burden for users to contend with. Open source, growing fast in adoption.

We expect the market to consolidate around a few approaches, namely: Jobset + Kueue for those interested in OSS, Volcano for those requiring large batch queues with gang scheduling, and SkyPilot for those running in multi-cloud scenarios.

Transition to Blackwell

We have seen a steep drop in H100 pricing over the last year, and a hesitation for many organizations to move to Blackwell. We believe that performance improvements like this will come in due time, especially for users working on FP8 and FP4 (MXFP4 or NVFP4 datatypes), and especially for inference. During our test period we received a mix of H100, H200, and B200 nodes from different providers.

For our rankings, at the current time, we mainly consider Hopper performance, with H100 and H200 still being the most popular clusters (in terms of quantity of purchases, not total volume) that we have heard about during our discussions with end users.

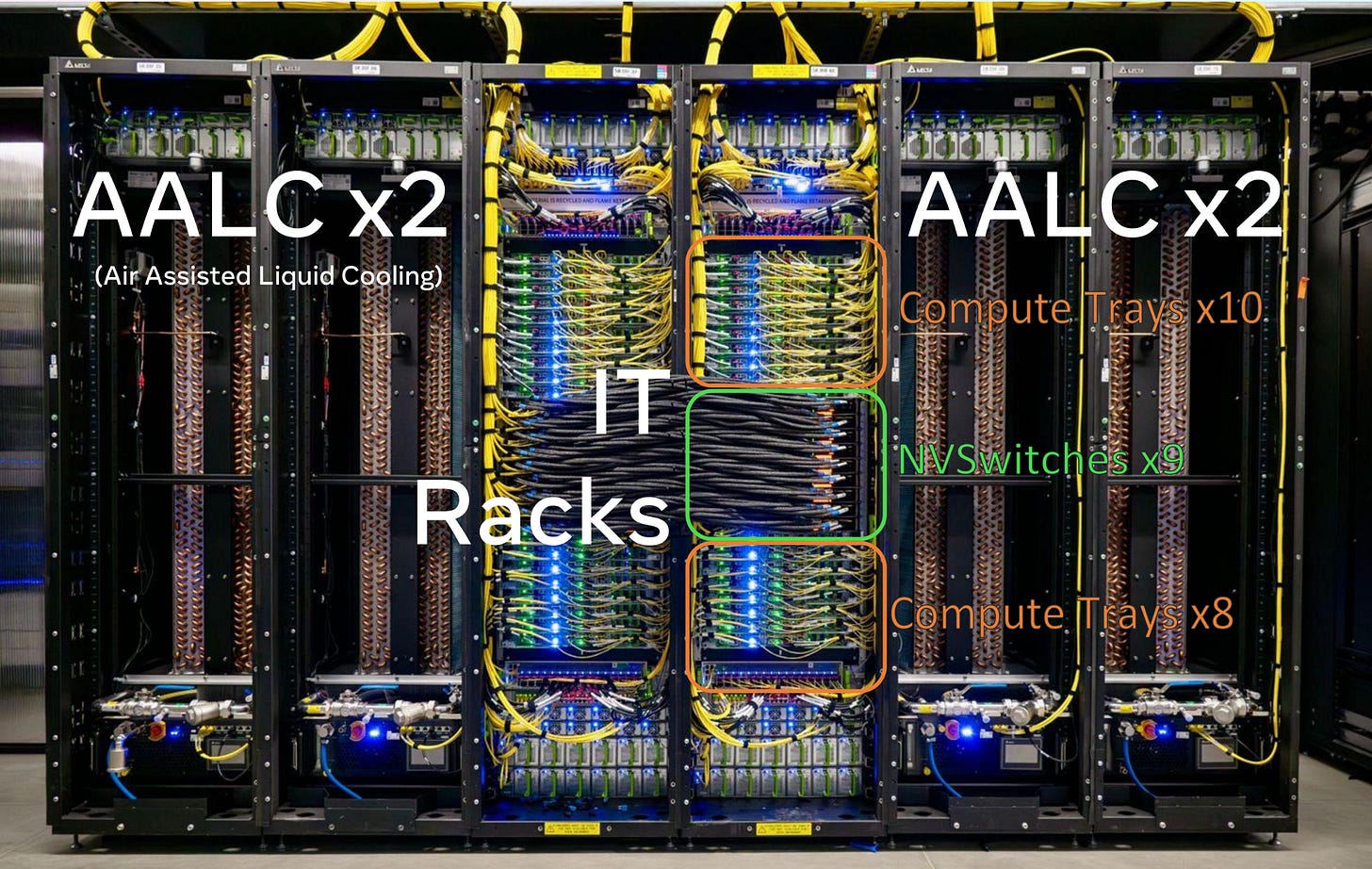

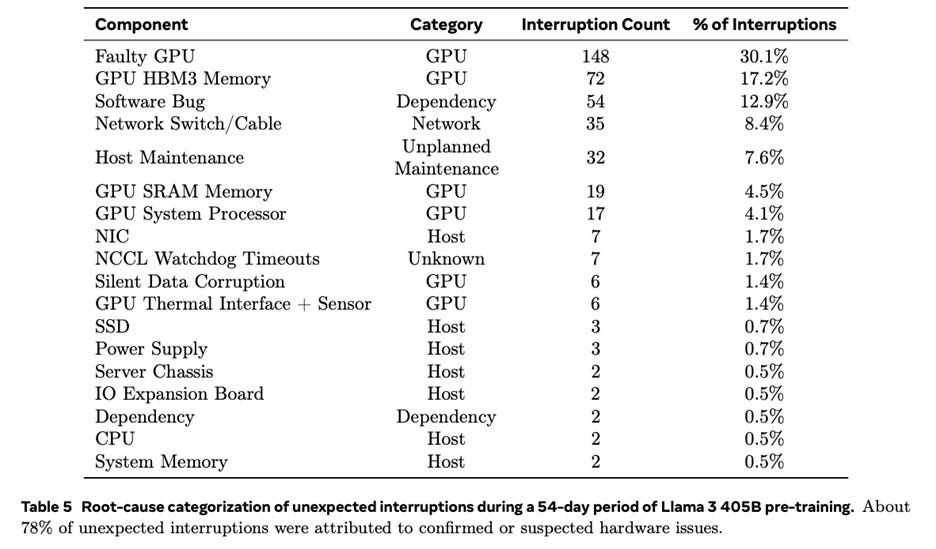

GB200 NVL72 Reliability and SLA’s

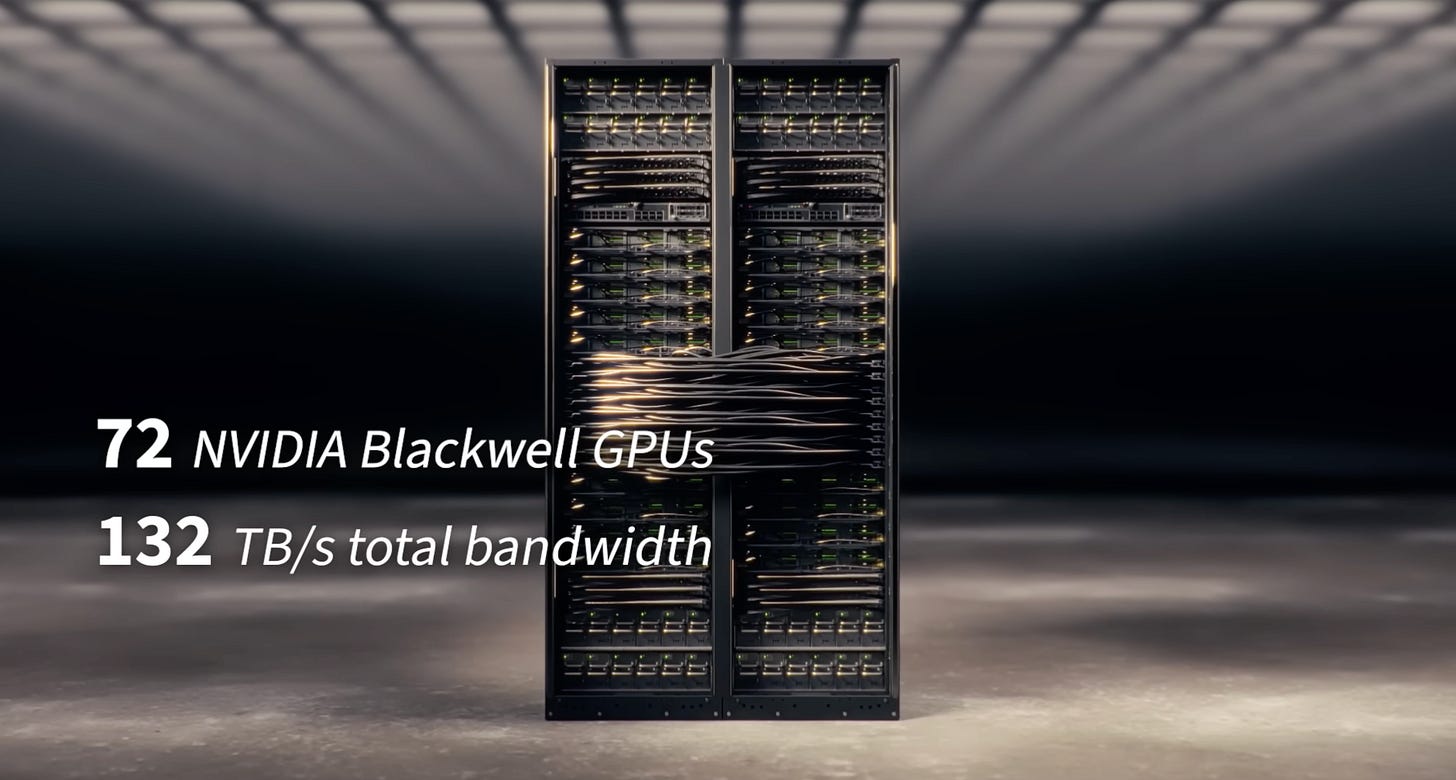

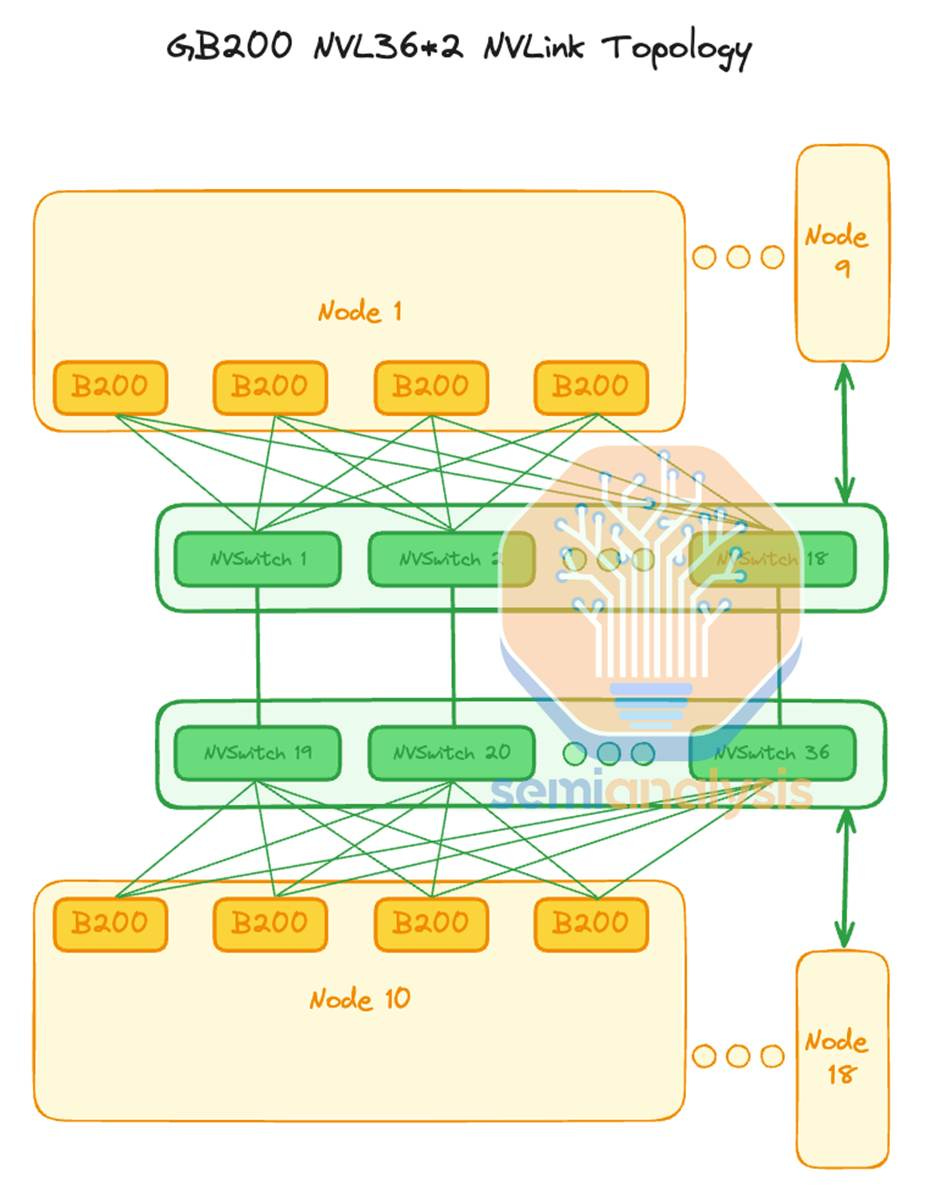

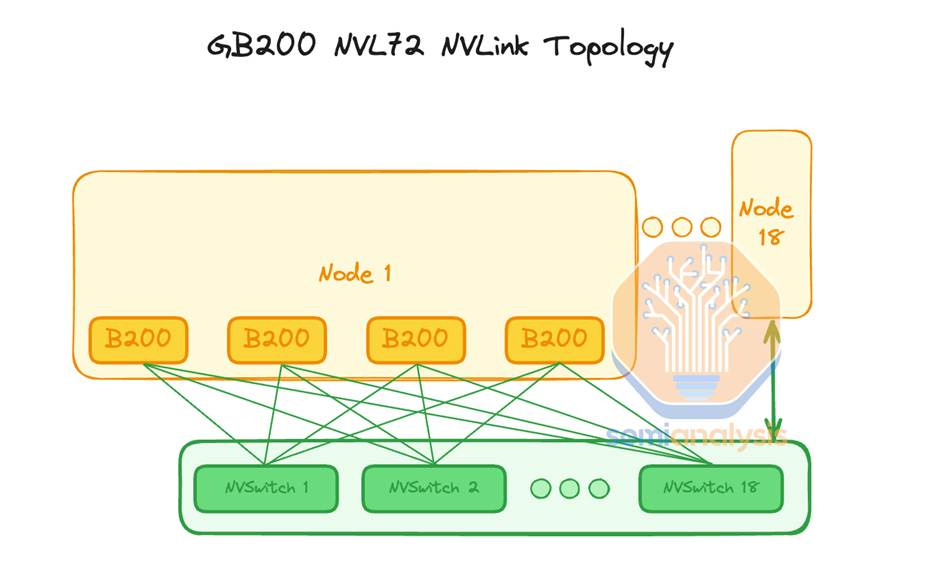

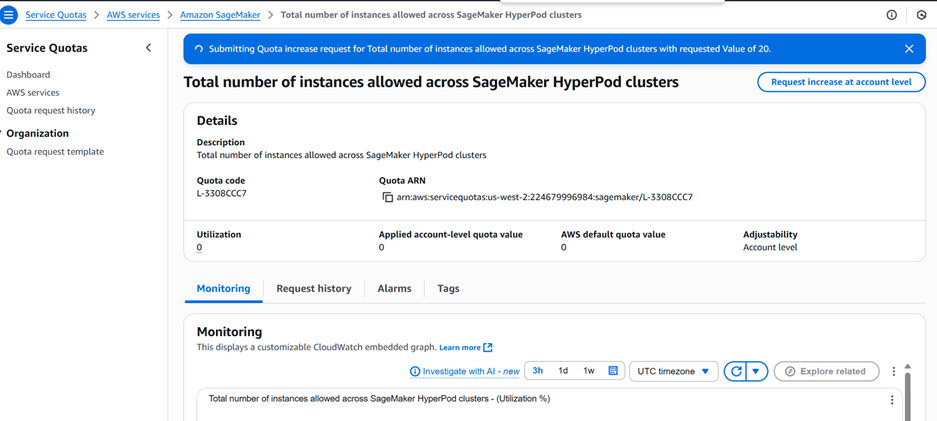

With Hopper, the failure domain was the node. With Blackwell rack-scale systems like the GB200 NVL72, the entire rack including compute, NVLink switches, cable cartridge/backplane and multiple fabrics (scale out, scale up) is the failure domain. This fundamental shift makes reliability and Service Level Agreements (SLAs) a critical piece during pricing negotiations between customer and provider.

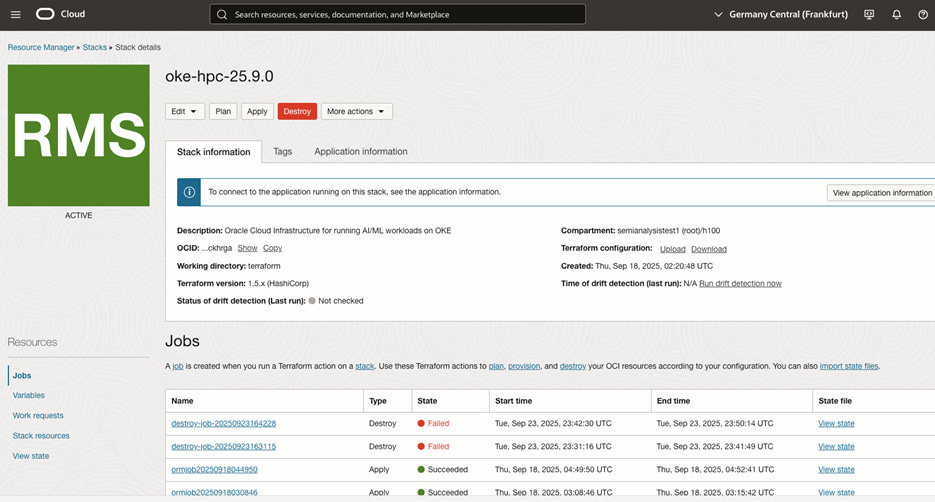

As we described in our GB200 NVL72 article in August, a single faulty component can require draining an entire 72-GPU rack. To be clear, this does not literally mean draining the liquid from the cooling loops in the rack, it means the slurm concept of node state = “drain” or kubernetes node health state = “drain” and the node is “cordoned”. In response, top-tier providers are moving beyond node-level uptime and introducing rack-level SLAs. We are seeing top providers like CoreWeave and Oracle begin to offer 99% rack-level uptime guarantees with requisite penalties attached, even when managing hot spares on behalf of the customer.

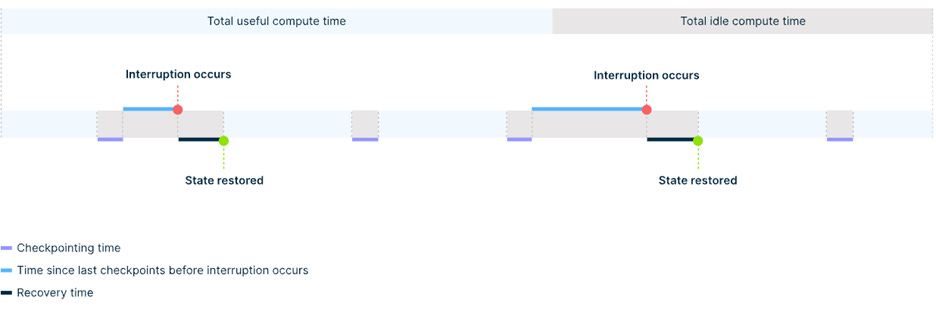

We believe that achieving this level of uptime on a GB200 NVL72 rack scale system is only possible with a massive investment in proactive, automated health checks (both active and passive), sophisticated monitoring to detect and remediate failures before they interrupt a job, an automated provisioning process for hot spares deployed at scale, and a full, vertically integrated commitment from team members from the technician to the SRE to the end user.

This was particularly potent at GCP and AWS, who went for NVL36x2 instead of a full NVL72, though we have heard about backplane/cable cartridge issues from all providers currently deploying GB200 NVL72 at scale too. Nvidia is saying that the issue was traced to a firmware bug that caused NVLink connections to lose sync over time, not to a hardware fault. So after shipping ES/PS systems as early as November 2024, with volume shipments beginning this past spring, Nvidia finally is releasing this month the all-too-famous firmware version 1.3 that makes the system stable to use. To be clear, this is a delay of 6-7 months after shipping NVL36x2 systems. This also affects a lot of Meta’s racks, where they opted for Ariel NVL36x2 (72 CPU + 72 GPUs) as we described in our GB200 Hardware Architecture - Component Supply Chain and BOM article.

As shown below, NVL36x2 racks experience these intermittent NVLink reliability issues between the cross-rack ACC cables that take two NVL36 racks and connect them together to make a logical NVL72. These ACC cables and the flyover cables have troublesome signal integrity issues due to their additional length.

One of the issues that this firmware update will address is a firmware bug that causes NVLink connections to lose sync over time. We believe that there continues to be significant hardware issues in these systems, including complete failures, transient interruptions, and also in software stack instabilities from firmware to drivers to software libraries that require upgrades to the latest version in order to achieve an acceptable end user experience. Many end users and hyperscale operators of NVL36x2 and NVL72 are still complaining about the reliability of the backplane, cross-rack ACC cables, and the additional flyover cables.

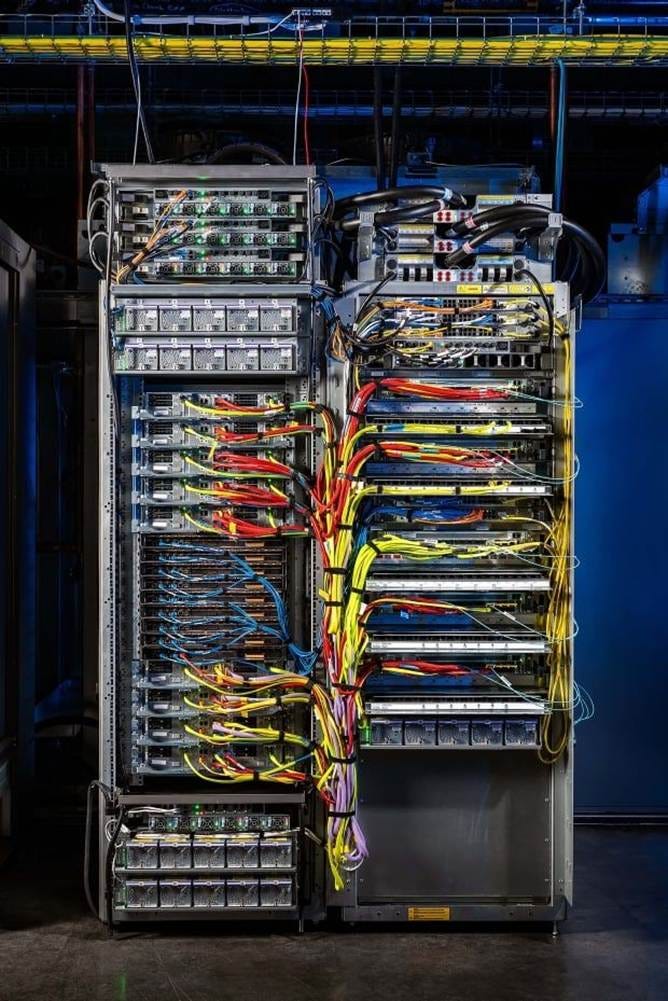

The challenges that providers describe as the most difficult part of operating GB200 NVL72 rack scale systems is not the total amount of failures, perceived component quality, or even the stability of the software stack. It is the blast radius, and time to recovery. Here we describe the process to provision a GB200 NVL72 rack from scratch in the event of a backplane/cable cartridge failure, assuming that the pre-requisite to begin repairs has been accomplished (i.e. the rack has been drained/cordoned at the cluster level, and no jobs or only pre-emptible jobs are running):

Mechanical validation. Assuming that the NVLink backplane/cable cartridge (effectively, the rack’s “spine”) was replaced, or this is a fresh rack being booted for the first time, ensure strict alignment of all blind-mate connectors from compute/switch trays into the backplane.

Power validation. Energize the PDUs in the rack, but leave compute trays in standby state (POST) and focus on the NVLink fabric.

Connectivity for out of band management. MAC addresses for all BMCs on all 18 compute trays and 9 NVLink switch trays are identified and accessible by the cluster manager (i.e. NVIDIA Base Command Manager, Canonical MaaS). IPs are assigned to these BMCs.

Apply firmware baseline. If there is a mismatch between the compute trays with Grace CPUs, NVLink switches, CX-7 or CX-8 backend NICs, and BlueField-3 frontend NICs, there will be issues. A golden image with correct firmware package must be applied to all rack components simultaneously. Note there is exact compatibility requirements between the NVLink switch firmware and the driver versions coming at the OS level later that must be respected.

Boot NVLink switch trays. Before loading the OS on the compute trays, NVLink switch trays must be booted to a minimal OS/firmware stack, then chassis-level diagnostics are run. In other words, all 72 Blackwell GPUs need to be able to see each other across the new backplane/cable cartridge/“spine”. If re-seating or swapping does not fix the problem, the troubleshooting process begins anew (do not pass go, go straight to mechanical validation with new backplane).

Calibrate NVLink fabric. Fabric training involves forcing the SerDes in the NVLink interfaces to train against the copper backplane, adjusting their electrical signalling parameters to establish a stable connection. This validates that all links are running at full speed. If links fail to train, or negotiate at low speeds, you guessed it: try re-seating, and swapping to establish exactly which component is faulty (compute tray, NVSwitch tray, copper backplane, connectors/interposers). Potentially, begin anew with a new backplane.

Provision the Operating System. Generally, the target OS is deployed to all nodes simultaneously, with a recipe of GPU drivers, MOFED/DOCA drivers, CUDA Toolkit, DCGM, and the Fabric Manager that matches the new firmware baseline.

Start the fabric manager. All compute trays need to be running in order to validate the 72 GPUs still see each other over NVLink at the OS level.

Integrate with the scale-out network. The scale-out fabric (distinct from the scale-up NVLink fabric) is InfiniBand or RoCE. For InfiniBand, UFM must discover the new GUIDs, assign LIDs, and recalculate fabric routing paths. For RoCE (Spectrum-X), the topology location of new nodes is identified with LLDP and the cluster manager (BCM) automatically assigns IPs. Nodes advertise their routes into the fabric with BGP, updating forwarding tables across all switches. A similar process is applied for the frontend/N-S uplink network.

Burn-in validation. The provider’s standard active health check suite is applied to all nodes in the rack, running tests such as dcgmi diag -r 3, nccl-tests, multinode ubergemm, and a sample mutlinode training job to ensure that CPUs, GPUs, scale-up, and scale-out interfaces all experience thermal expansion and contraction at the same time.

We describe this process in detail to shine some light on why providers believe that the provisioning process for a fresh backplane or a brand new NVL72 rack can take anywhere from 9 hours to multiple days. If anyone reading this has personal experience trying to install Nvidia drivers on their gaming PC or workstation, just imagine doing that on 18 PCs and 9 workstations at once, except you’re also installing a new OS on all of them while your kid randomly flips breakers in the garage and the clock on the microwave has to match the one on the stove.

Anyway, an important concern here is diagnosing the correct failure. Experienced NVL72 operators certainly do not want to spend time swapping an expensive component if it is not necessary, and especially when that time can constitute a 1 day rack outage that counts against their uptime SLA with their customer. All sorts of tricks have emerged to localize and identify the exact failure that is occurring. Here is a rough breakdown:

If a single link has failed, it’s a specific pin on a tray connector or a single trace on the backplane

If all links on one GPU fail, it’s the SerDes on that specific GPU or its local routing to the tray connector

If all links on one tray (4x GPUs) fail, it’s a board failure on that tray or that slot

If there are widespread, random failures, it’s the backplane

In the case of first-time failures on a rack, it is also important to consider an A/B swap of trays to pinpoint if failures move with the tray or stay with the slot. To do this in production, we stress the importance of high quality monitoring dashboards, active/passive health checks, and automatic remediation on the side of the provider and promote it throughout our ClusterMAX research effort.

It is also unclear how Nvidia supports customers with tooling. We hear different failure scenarios described by different providers, which seem to currently be solved with a whack-a-mole approach. In other words, Nvidia is coming up with tools and approaches on the fly both for debugging and fixing failures. This leaves providers to differentiate both with their operational processes, and software systems. It seems that the speed which which a provider can onboard a new debugging tool into their automation stack can make all the difference.

The result of all of this is an SLA commitment from the provider to the customer. To booth, we have seen a wide variety of SLAs being applied to these rack-scale systems, involving a range of different uptime guarantees and penalties associated with a breach. We have seen remarkably different approaches:

A provider commits to a node level SLA, and a rack level SLA. In other words, individual nodes must have a 99% uptime, but a rack (defined as 16 of the 18 nodes, or 64 of 72 GPUs) has a lower SLA at 95%. Customers get access to all nodes in the rack.

A provider commits to a node-level SLA only. In other words, individual nodes have a 99% uptime guarantee, and the customer only gets access to 16 of the 18 nodes in the rack. The leftover nodes are used by the provider for other purposes (including as a hot-swap for the customer, for other customer workloads, or for internal workloads)

A provider commits to a rack-level SLA only. In other words, all 18 of 18 nodes must be up 95% of the time, and the customer gets access to all nodes.

The way in which credits or deductions work is also unique. In the event of a breach, some providers insist on providing a credit towards a future monthly bill that the customer spends in the future. Other providers reconcile in realtime, deducting any downtime off the current month’s bill.

At this time, we don’t have a strong preference between approaches to SLAs nor a recommendation to providers or customers. However, after living through the Hopper generation of deployments, we hope that there will be less people suing each other this time around. Less hostility = better outcomes for everyone.

Remember kids, CoreWeave called GB300 “production ready” back in August!

Crypto Miners Here To Stay

The “AI Pivot” for crypto miners is real, and is primarily driven by their head start over pure-play AI Neoclouds. Miners have long been in the business of finding cheap, large-scale power sources. With GenAI taking off and changing traditional datacenter requirements, many Bitcoin miners realized they were sitting on a gold mine. We came to the same conclusion and laid out bullish views on the industry prior to an avalanche of deals in 2025 leading to surging stock prices.

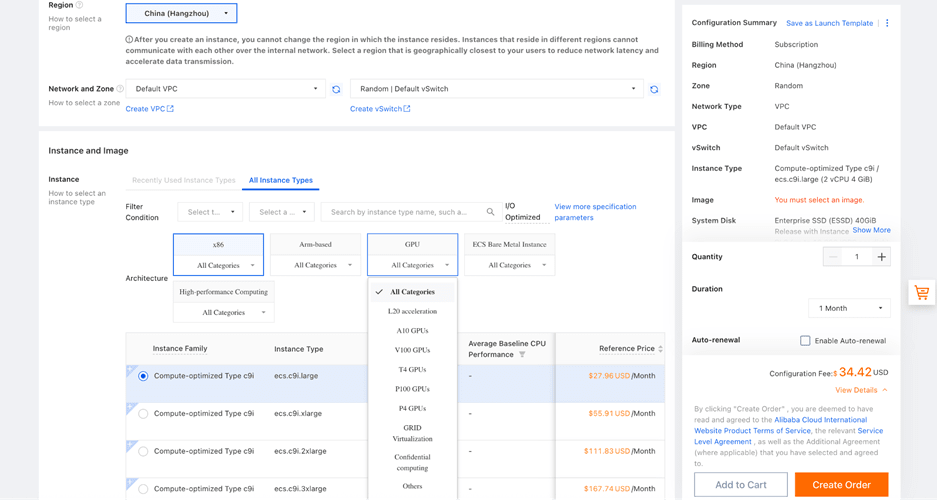

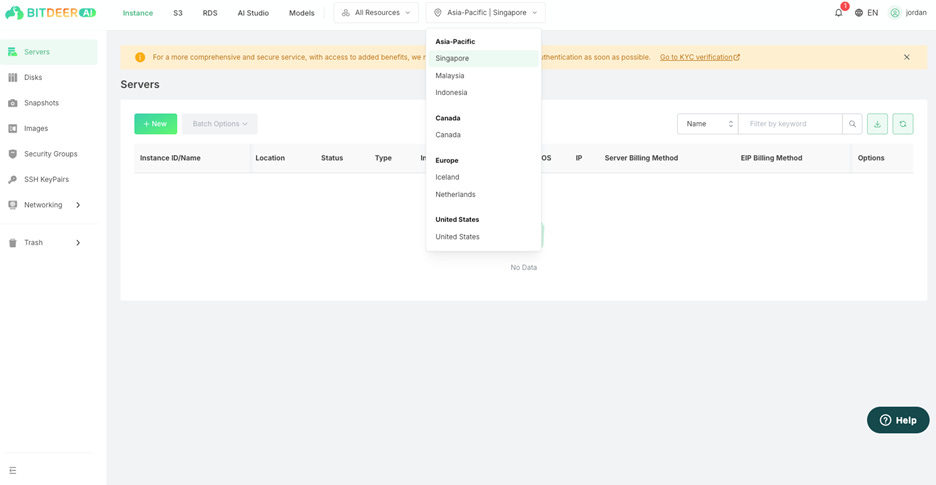

While industry protagonists have mostly been successful at building and leasing AI Datacenters to Neoclouds, some realized that they could increase their realized value per MW by going vertical. Today, many of the top Bitcoin mining companies by hash rate now have a dedicated AI Cloud business. This list includes, but is not limited to, publicly traded American companies such as Terawulf, Cipher Mining, IREN/Iris Energy, Hut 8/Highrise, VCV Digital/Atlas Cloud, BitDeer, Applied Digital, and Core Scientific (acquired by CoreWeave). For a full list of GPU buyers with estimated quarterly GPU count, refer to our industry-leading AI Accelerator & HBM Model.

On the surface, crypto miners are not yet competing at the Platinum or Gold tier for ClusterMAX. However, those who know their Neocloud history will notice that Crusoe started out in bitcoin mining, and that Fluidstack has already partnered with Terawulf and Cipher Mining, backed by Google, to deliver Gold-tier clusters for leading AI labs. We see two ways in which the crypto business model focused on the infrastructure foundation can play out for these companies:

Powered Shell / Colocation: Renting their gigawatt-scale, high-density datacenter space to other Neoclouds or frontier AI labs who prefer to deploy their own hardware, and can effectively lock them out of their own facility if needed.

Wholesale Bare-Metal: Procuring GPUs themselves and offering them as bare-metal clusters, leveraging their low energy costs to compete aggressively on price, putting their people to work, and using their access to capital to purchase tranches of GPUs ahead of demand, resulting in a pricing arbitrage opportunity.

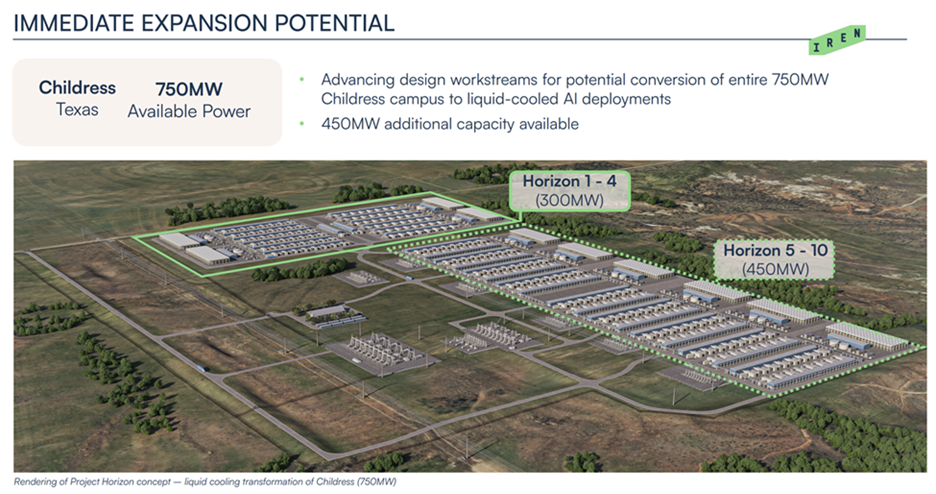

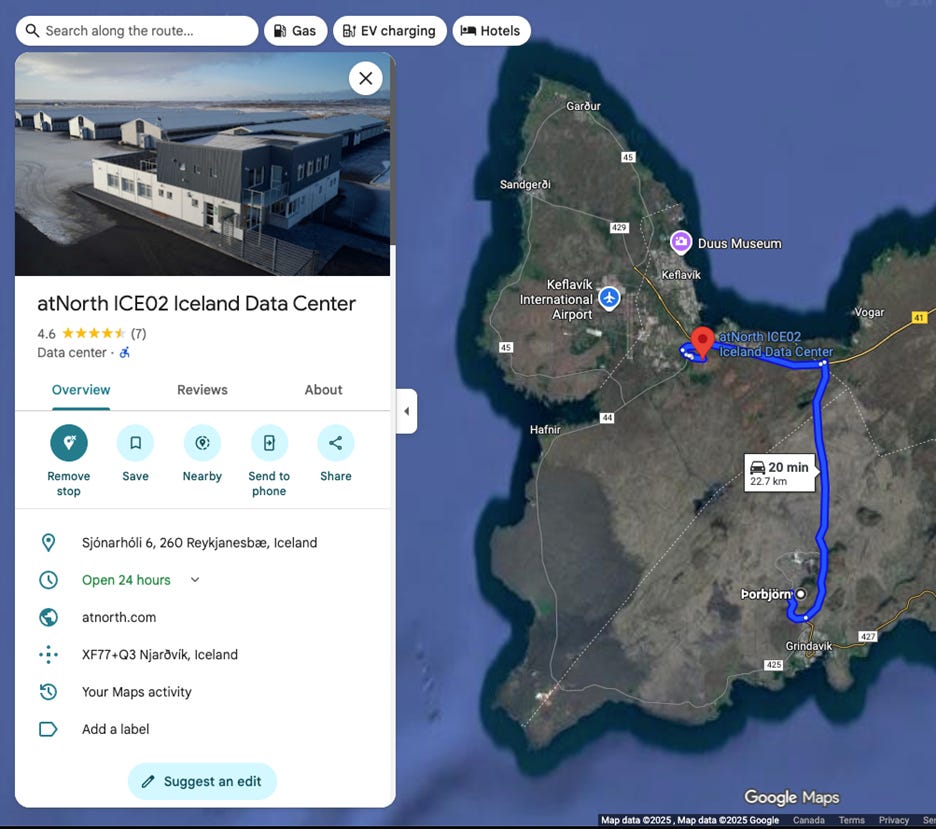

No firm better exemplifies the Bitcoin-to-AI pivot than IREN. It operates one of the world’s largest mines in Childress, TX, shown below.

IREN has successfully pivoted to Bare Metal Wholesale Cloud, scoring a 200MW deal with Microsoft to deploy GB300 GPUs in the same Childress, TX campus. IREN already unveiled a vision to retrofit the whole Bitcoin mine into a massive GPU cluster.

While they’ve been very successful at selling GPU Cloud capacity, their economic returns aren’t what has commonly been depicted by market participants. Our AI Cloud TCO Model (trusted by the world’s largest GPU buyers and financial leaders) estimates the precise economics of the IREN/Microsoft deal, allowing users to compare it with other large deals like Nscale, CoreWeave, Nebius, Oracle, and more. Our AI Tokenomics Model further expands on this work by tracking the flow of compute supply and the different sources of demand to the various token factories that ultimately make use off this compute. AI labs like OpenAI and Anthropic feature prominently as the ultimate users of much of this compute capacity!

Custom Storage Solutions

The rise of massive datasets has created a storage bottleneck. While high-performance POSIX filesystems (Weka, VAST, DDN) are still common, a new trend is emerging: S3-compatible object storage (like CoreWeave’s CAIOS or Nebius Enhanced Throughput object storage) sometimes paired with a massive, distributed local NVMe cache. CoreWeave’s LOTA (Local Object Transfer Accelerator) is the prime example, transparently caching data on the local drives of compute nodes.

In our analysis, we have seen that the initial buildout of compute for LLMs trained primarily on text and code considered storage as an afterthought, with storage representing less than 5% of cluster TCO. However, with data-heavy workloads such as image and video generation, weather prediction, drug discovery, real-time voice, robotics, and world models emerging, we have seen specific cluster designs with storage representing over 20% of the cluster TCO (i.e. over 100 PB for less than 2000 GPUs).

We expect to see more providers contend with the “thundering herd” problem of a shared filesystem that provides enormous aggregate bandwidth at 100PB+ scale.

InfiniBand Security

When it comes to securing a multi-tenant cloud, one of the most critical factors is the backend interconnect network. This is the data plane for all “East-West” traffic between GPU nodes, and for large clouds it generally involves a shared three-tier network architecture. Effectively, providers need to be able to create an isolated “VPC” for each tenant (and in many cases, for sub-tenants within each tenant) that extends to the private, backend interconnect.

While multi-tenant network security is well established through the use of layer-2 VLANs or layer-3 VXLANs via the use of datacenter switch and network management software (such as Arista CloudVision/EOS, Cisco NDFC/NX-OS, and Juniper Apstra/Junos), doing this with InfiniBand is relatively new. Nvidia recently released a blog on the topic which highlights that delivering a secure, multi-tenant InfiniBand network to customers involves more than just configuring PKeys.

Specifically, Partition Keys (PKeys) are the standard InfiniBand mechanism for network segmentation, functionally equivalent to VLANs in an Ethernet world. A PKey is a 16-bit value assigned to an InfiniBand port (on both the HCA and the switch). For a packet to be delivered, the PKey in its header must match a valid entry in the partition table of the receiving port, enforcing isolation at the fabric level. On many different providers, we were given a supposedly “isolated” 4-node or 2-node cluster, where we could see far more than just our 16 or 32 endpoints, due to a misconfiguration of PKeys, or a lack of any configuration at all.

However, PKeys alone are an insufficient security boundary for a robust multi-tenant environment. PKeys are managed by a central Subnet Manager (SM). A compromised tenant node could potentially send malicious Subnet Management Packets (SMPs) to query fabric topology or, in a poorly secured fabric, even attempt to reconfigure PKeys and break tenant isolation.

True, hardware-enforced isolation is described in detail by Nvidia in this blog: https://docs.nvidia.com/networking/display/nvidiamlnxgwusermanualfornvidiaskywayappliancev822302lts/configuring+partition+keys+(pkeys) which we encourage all providers deploying InfiniBand to review in detail.

The blog describes a multi-layered approach to InfiniBand security. The result is a one-time setup that includes:

M_Key: Management key that prevents rogue hosts from altering device configurations. If the key doesn’t match, the request is dropped.

P_Key: Partition key analogous to VLANs. These keys define which devices can “see” or talk to each other, creating strict traffic isolation across the fabric.”

SA_Key: For sensitive operations in the Subnet Administrator (adding or removing records, for example)

VS_Key: For vendor tools like ibdiagnet

C_Key and N2N_Key: Secure communication manager traffic and node-to-node messaging

AM_Key (only when SHARP is enabled): Specific to SHARP aggregation, ensuring data is only reduced by authorized switches

Providers who rely solely on basic PKey configuration without these additional layers are offering a demonstrably weaker security posture. We believe that the additional complexity associated with InfiniBand, due primarily to poor documentation from Nvidia, has led to a significant lack of strong skillsets in the industry amongst full time employees and contractors for hire.

Container Escapes, Embargo Programs, Pentesting and Auditing

Security in the Neocloud space has rapidly escalated from a checkbox item to a critical differentiator. The recent discovery of NVIDIAScape (CVE-2025-23266) by security firm Wiz served as a wake-up call for many in the industry. This vulnerability allows a user running inside a container to “escape” their container and escalate to full root access on the underlying host node, a huge security vulnerability in any multi-tenant environment where customer workloads run alongside each other, only isolated via containers on the same underlying host. Most notably, the vulnerability can be exploited with a simple three-line script, easily baked into a docker image in the software supply chain.

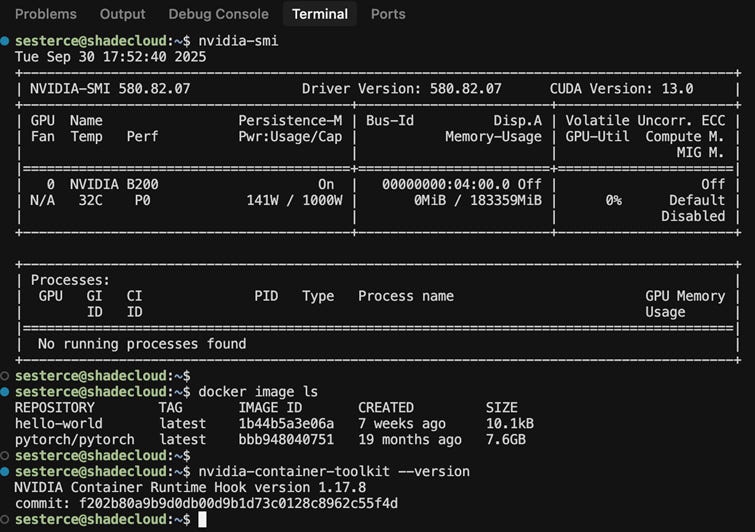

As a POC during our testing, we built this exploit into custom containers built on base images from vLLM, and nvidia pytorch. Running it is dead simple, just pull the container and run. Clearly, any provider running an out-of-date container toolkit version and allowing arbitrary containers from public registries to be executed puts their customers at risk of persistent backdoors, data exfiltration, ransomware, cryptojacking, and more. During our testing, we demonstrated this exploit was effective on over a dozen providers who were not up-to-date on their nvidia-container-toolkit (version 1.17.8 or later as described here: https://nvidia.custhelp.com/app/answers/detail/a_id/5659 ).

Most importantly, this is not just an Nvidia-specific problem. The AMD ecosystem has historically lacked a robust, secure container runtime, leaving many deployments insecure by default. The recent development of the ROCm container-toolkit is a direct response. However, these incidents underscore the critical importance of vendor security embargo programs, customer communication, and remediation.

Top-tier providers are included in the Nvidia embargo program, where they receive advanced notice of vulnerabilities like NVIDIAscape. This allows them to develop and deploy patches before the vulnerability and its exploit are made public, protecting their customers. Following our feedback and direct engagement from multiple providers, AMD has now also instituted a formal security embargo program, allowing their Neocloud partners to prepare effectively. We now consider the rocm container toolkit version provided (shown here: https://github.com/ROCm/container-toolkit ) when testing AMD clusters and standalone AI VMs.

This leads to the broader topics of pentesting and auditing. We cannot audit the internal security practices of every provider we track. This is why our criteria demands, as a bare minimum, third-party attestation like SOC 2 Type I or ISO 27001. For a provider handling proprietary models and sensitive corporate data, this is the absolute lowest bar for proving a formal security process exists. However, we encourage all Neoclouds to adopt a hyperscaler mentality: zero-trust, defense-in-depth strategies with continuous auditing and a proactive security posture. Relying on reactive patching or basic pentesting is not viable to serve the world’s leading frontier AI labs effectively.

What AMD and NVIDIA can do better for GPU Clouds and ML Community

Many Neoclouds are not familiar with InfiniBand due to the continued lack of education and documentation around InfiniBand. The situation was not gotten much better in terms of what Neoclouds have actually implemented since ClusterMAXv1 when we made the same recommendation to Nvidia 8 months ago. There has been some public documentation updates recently but not enough and it does not cover commands for how to verify it and we appreciate the Nvidians that are trying to help improve the situation. We recommend that they should rapidly continue provide a lot more good publicly accessible documentation and do lots of education with their NCPs about the different keys (SMKeys, MKeys, PKeys, VSKeys, CCKeys, AMKeys, etc) needed to secure an InfiniBand network properly. We recommend that Nvidia help their GPU clouds properly secure their InfiniBand network and complete an audit of all GPU clouds that use InfiniBand.

Furthermore, 8 months ago, we made the same recommendation to Nvidia that Nvidia should fix ease of use for SHARP for the GPU provider and recommend that Nvidia should enable it by default. Nvidia has not made any significant impact to SHARP usability and the extremely low usage of InfiniBand SHARP is has not changed.

In ClusterMAXv1, we recommended to AMD should support containers as first class in slurm and they have since enabled initial support though it has lots of bugs. We recommend that AMD further QA their container slurm support such that it is bug free and end users aren’t running into new bugs every week.

For providers that both AMD and Nvidia, the quality of their AMD cloud offering is much worse than their Nvidia cloud offering and missing critical features like health checks or working slurm support like on Crusoe and Oracle, etc. OCI’s slurm offering is much worse on AMD compared to Nvidia. Same thing for Crusoe and other hybrid clouds.

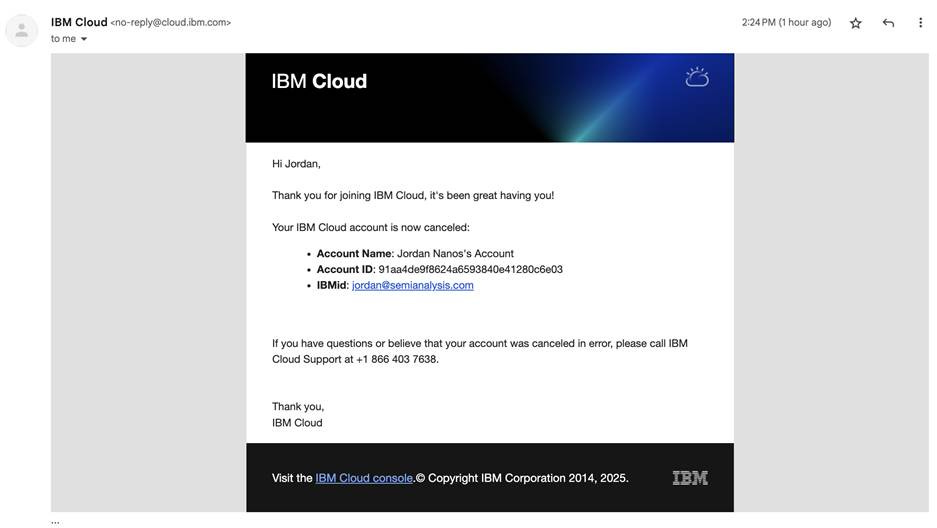

Some Final Comments on NVIDIA’s Cloud Confusion: From Ambition to the Grave. Don’t Believe Your Lyin’ Eyes!

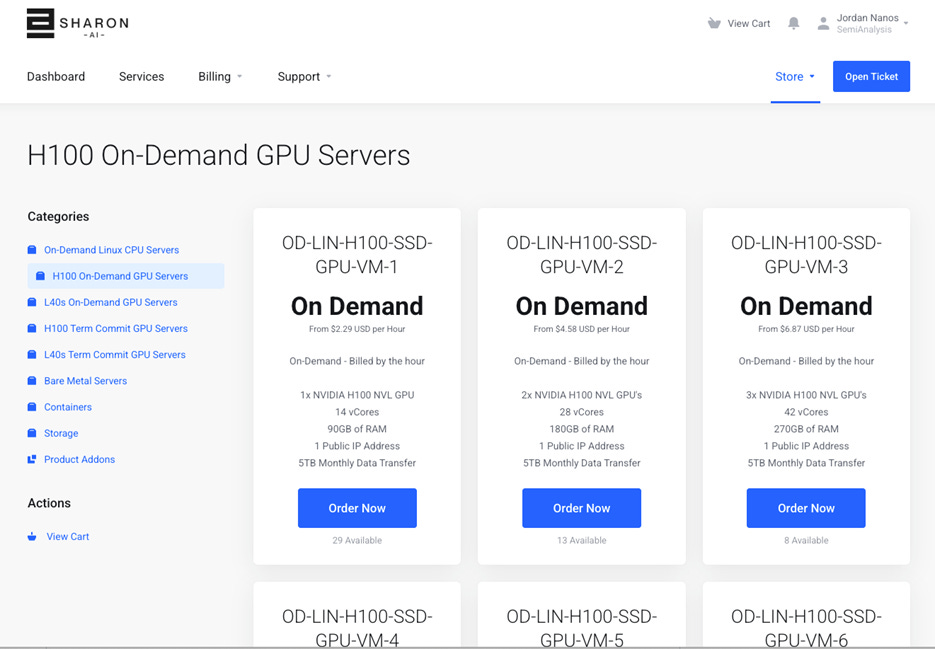

When NVIDIA acquired Lepton (a 20-person startup) for a rumored $300-$900M price tag, Neoclouds were scared. Lepton was a gold-tier ClusterMAX provider, with a unique capability to run across multi-cloud infrastructure, a strong resume (Jia Yangqing being a co-creator of PyTorch) and a knack for digging into the technical details. We were Lepton’s biggest fans!

At this point, it seems the Nvidia is intent on destroying the value of Lepton by having the team focus on features that users don’t care about, making the skin of the product dark-themed and Nvidia branded, and breaking things (like the interface for creating storage volumes, notebooks, and batch jobs, as well as the ability to trigger health checks on underlying nodes), instead of adding anything new. In other words, Lepton by Nvidia now reminds us of the old cuDNN download portal, and joins a long list of Nvidia software acquisitions that have been bundled in to die. To list them:

Nvidia acquired run:ai for orchestration and scheduling in May 2024 for $700M

Nvidia acquired deci for inference optimization in May 2024 for $300M

Nvidia acquired shoreline.io for automatic hardware remediation in July 2024 for $100M

Nvidia acquired brev.dev for cloud developer machines in July 2024 for a rumored $100M

NVIDIA acquired Lepton for clusters, orchestration, monitoring and health checks in April 2025 for a rumored $900M

That’s $2.1B in total, if the rumors are true! There have also been some big announcements:

In March 2023 at GTC, Nvidia launched DGX Cloud.

In August 2023 at Google Cloud Next, Nvidia announced DGX Cloud was coming to Google Cloud.

In November 2023 at AWS re:Invent, Nvidia and AWS announced DGX Cloud was coming to AWS, specifically with GH200 Grace Hopper Superchips.

In November 2023 at Microsoft Ignite, Nvidia announced the AI foundry service on Microsoft Azure, and that Nvidia DGX Cloud was now available on the Azure Marketplace.

In March 2024 at GTC, Nvidia announced DGX Cloud was generally available

In January 2025 at CES, Nvidia announced that Uber was using Nvidia’s DGX Cloud to build models for its robotaxi fleet.

In May at COMPUTEX 2025 in Taipei, Nvidia announced the expansion of the DGX Cloud platform under a new name called “DGX Cloud Lepton”. The announcement described Lepton as a compute marketplace connecting developers to tens of thousands of GPUs via global cloud-partners.

In June at GTC Paris, Nvidia announced the expansion of DGX Cloud Lepton to Europe.

Overall, these announcements seem as empty as Nvidia’s promises to open source the whole of run:ai. We have yet to see anything published on GitHub at that level. Same goes for Lepton (outside of gpud). We await this with bated breath.

Following some of our commentary about the state of the Lepton acquisition, we heard directly from Nvidia that they are absolutely offended to be considered a Neocloud. Apparently, all of these acquisitions are not being used for profits or revenue. Instead, the former DGX Cloud is being taken behind the barn, while the new DGX Cloud Lepton is being promoted. This is good for Neoclouds - it seems that after $2B spent, Jensen has recognized that he doesn’t want to compete with his biggest customers. So DGX Cloud, don’t do that!

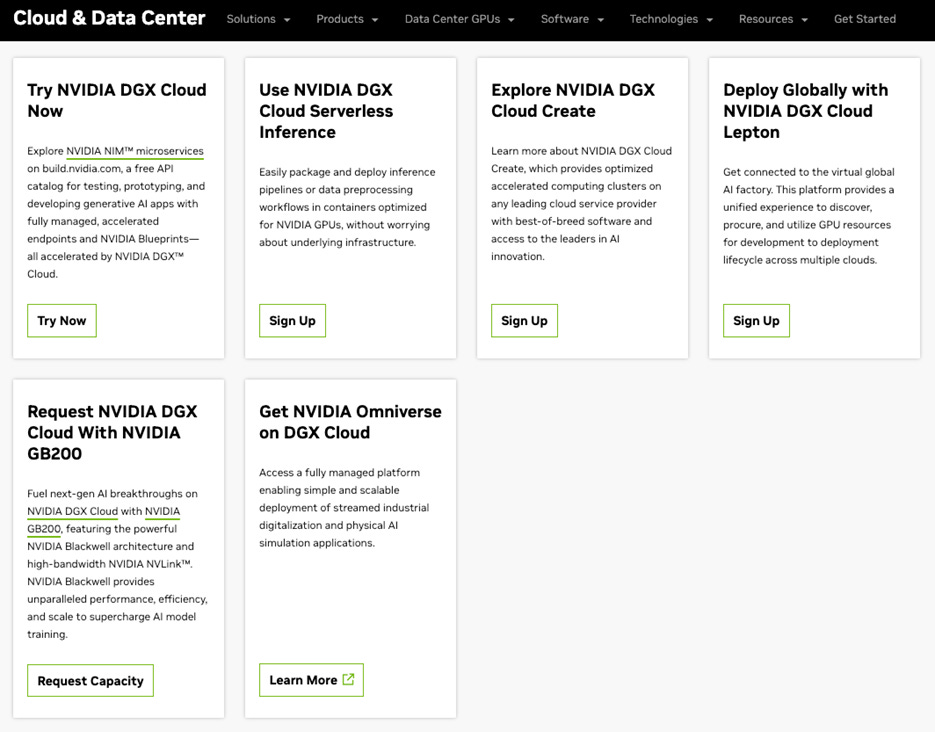

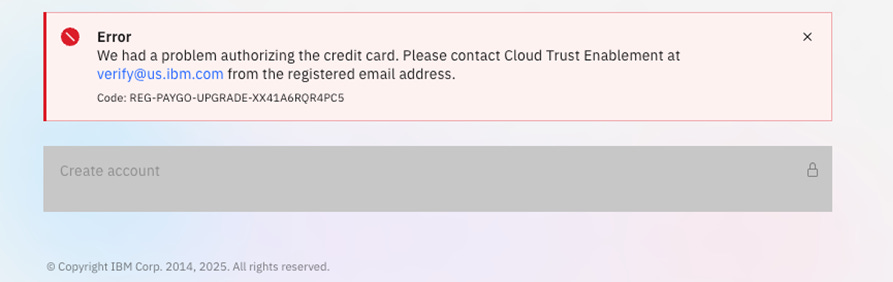

When we tried to get access to DGX Cloud for testing, we needed to have an intro call and a strategy call with Nvidia team members, scheduled on separate weeks. Without this information we were just left with the DGX Cloud website.

The website leads users to the following:

Try Nvidia DGX Cloud Now - NIMs page on NGC, which is an API for model endpoints

Use Nvidia DGX Cloud Serverless Inference - sign-up page for a 30-day preview of serverless inference powered by Nvidia Cloud Functions, which seems to be an API for model endpoints

Explore Nvidia DGX Cloud Create - a sign-up page to “talk to us”

Deploy Globally with Nvidia DGX Cloud Lepton - a sign-up page to apply for access to DGX Lepton. But if you’re a GPU cloud provider (maybe trying to provide your compute to the marketplace?) you can email the NVIDIA Cloud Partner address: dgxc_lepton_ncp_ea@nvidia.com

Request Nvidia DGX Cloud With NVIDIA GB200 - a circular link back to the DGX Cloud homepage

Get Nvidia Omniverse on DGX Cloud - a link to a DGX Cloud tile on the Azure Marketplace, which requires a sign up link, and probably sends your information to someone in Nvidia sales

So, it seems like most of DGX Cloud is not a Neocloud, rather it is a set of future meetings with Nvidia sales teams with unclear agendas.

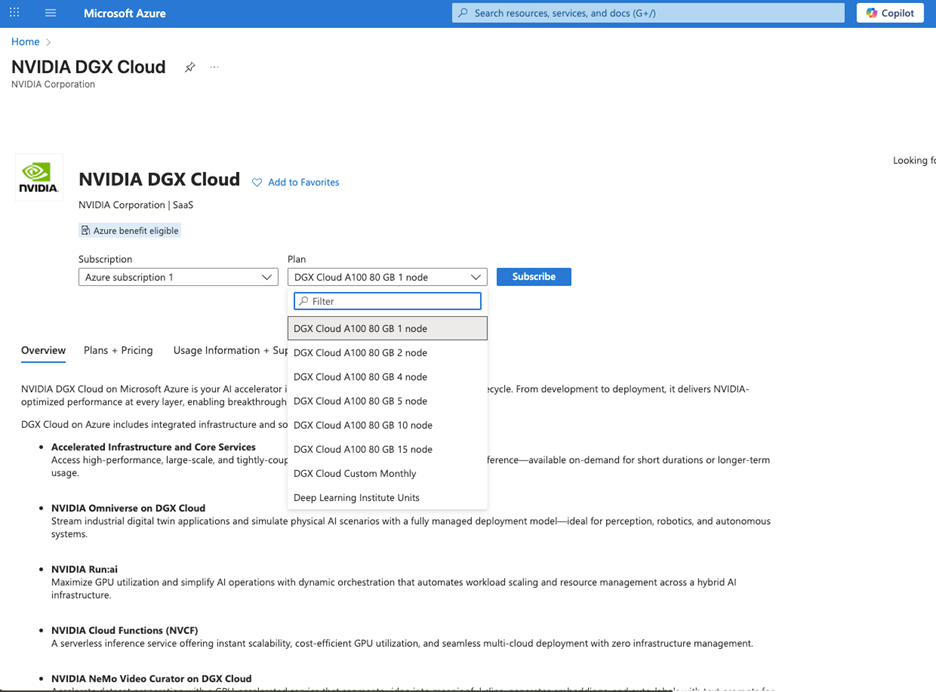

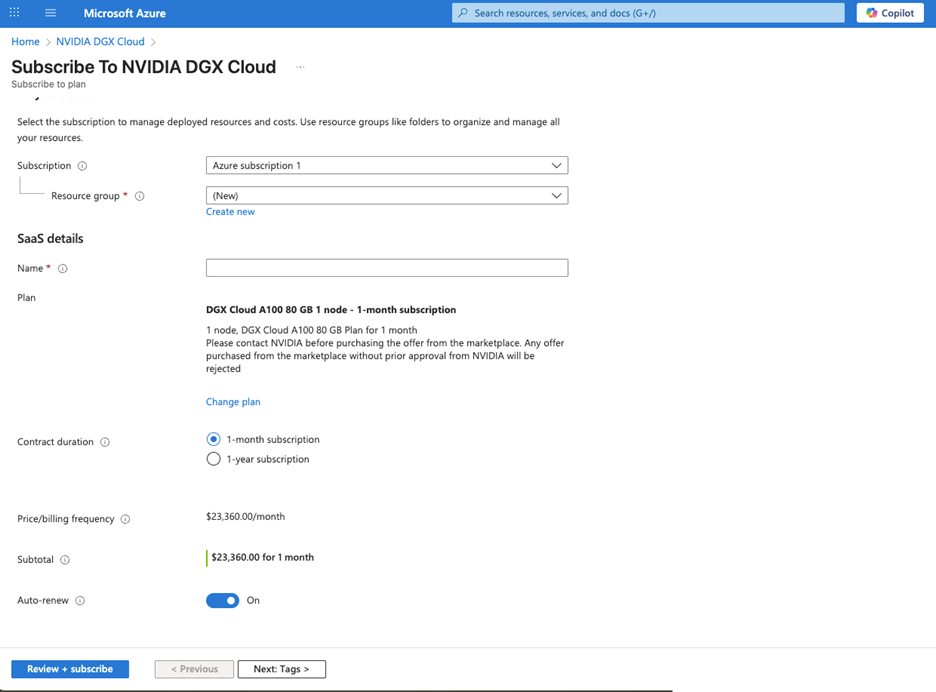

The exception is the last link in the portal, which takes you to Azure, where you can double-MFA your way into an opportunity to purchase a DGX Cloud A100 80GB 1 node – 1-month subscription for the low low price of $23,360.00 (with an option to auto-renew).

Note that this works out to $4.05 per A100-hr, the worst price we have seen anywhere, ever.

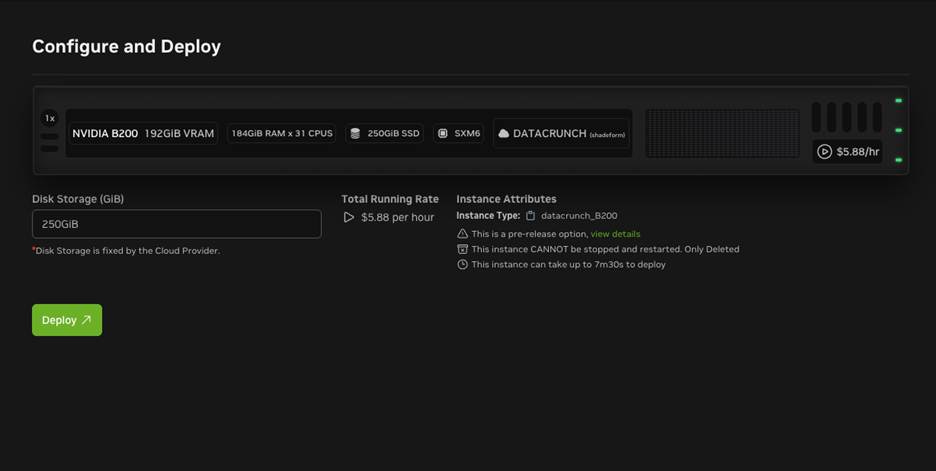

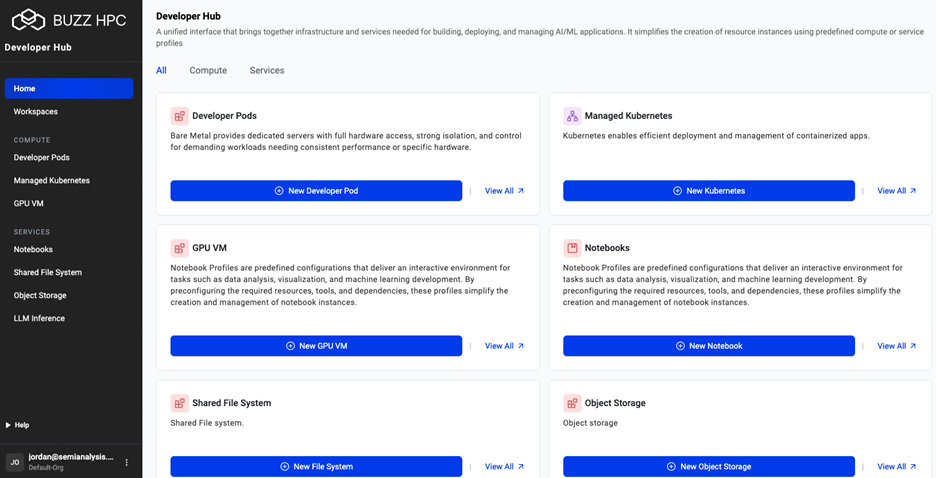

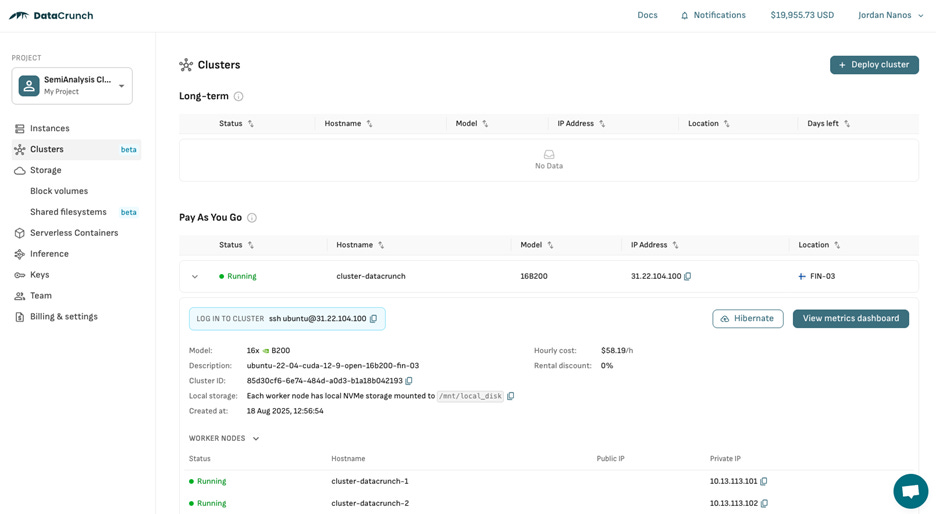

With that said, Nvidia has also acquired Brev, a useful frontend for Neoclouds such as DataCrunch (now called Verda), which seems to actually come via Shadeform’s orchestration layer. However, the console is nice and simple, and is open to the public: https://brev.nvidia.com/environment/new/public

We very much look forward to testing out Lepton, DGX Cloud, and Brev in the future. Nvidia has hired some incredibly hard working and talented engineers, along with a ton of useful IP that their community could benefit from. We hope to see more in the future.

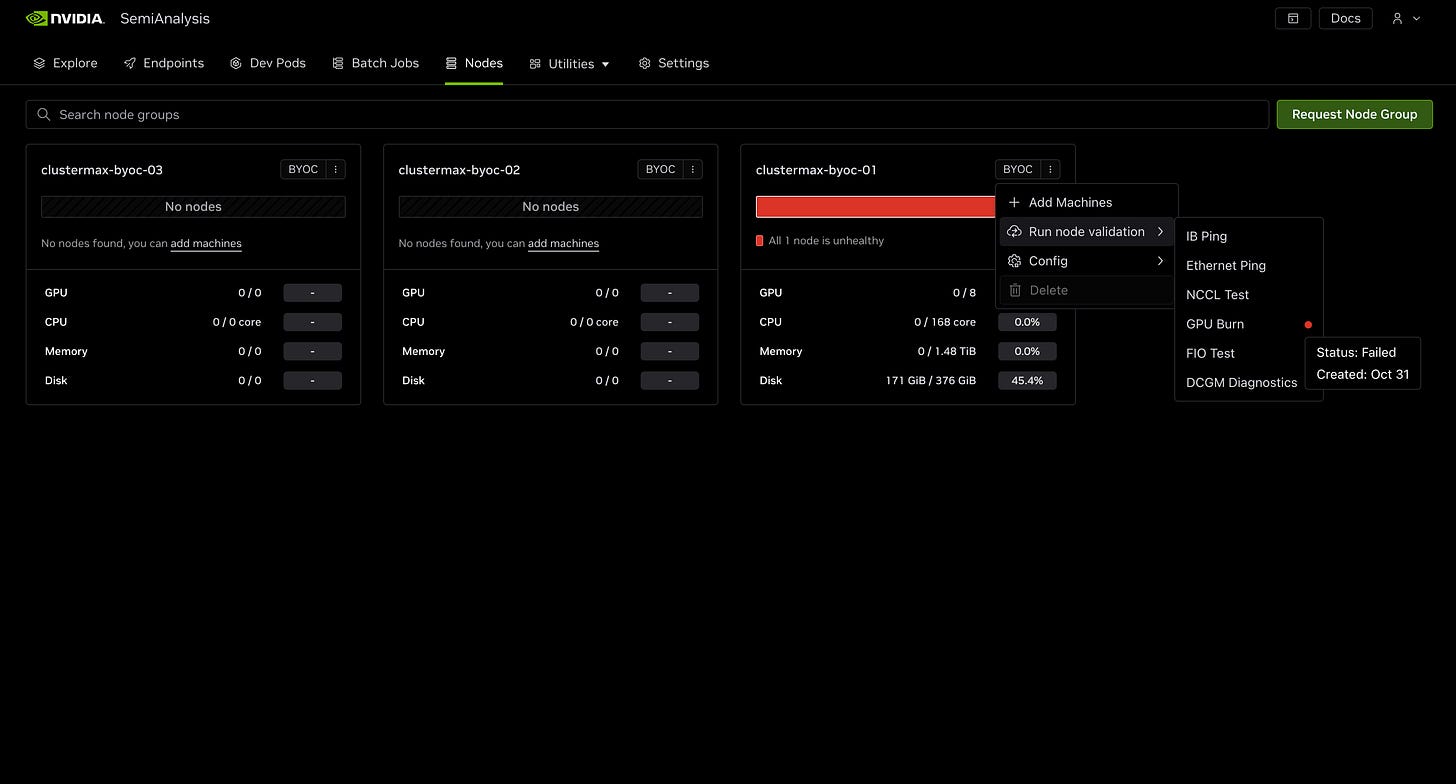

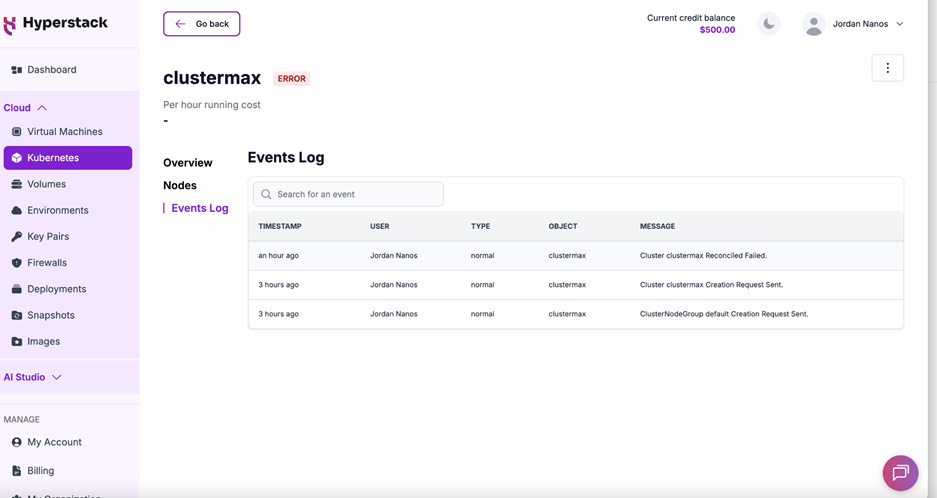

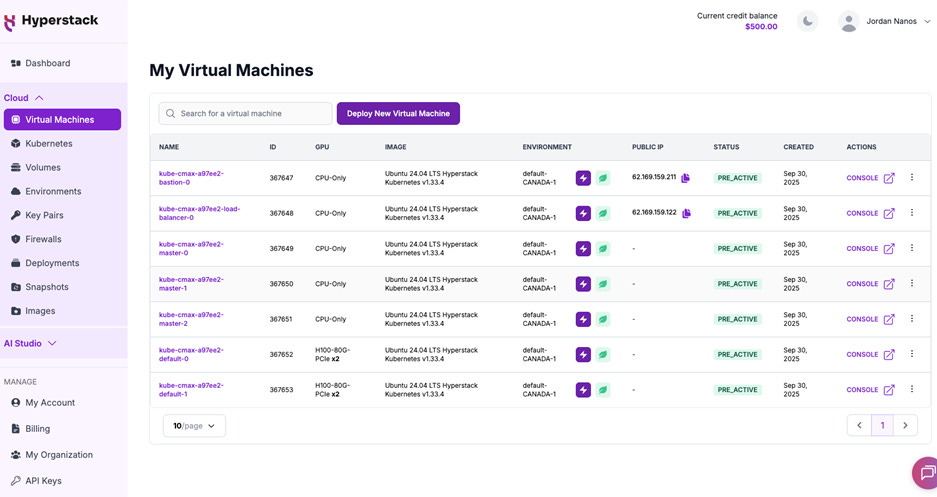

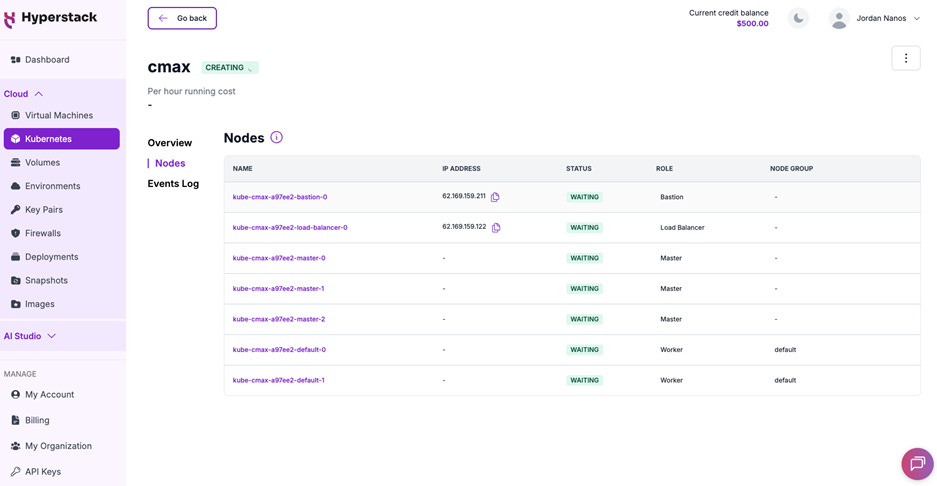

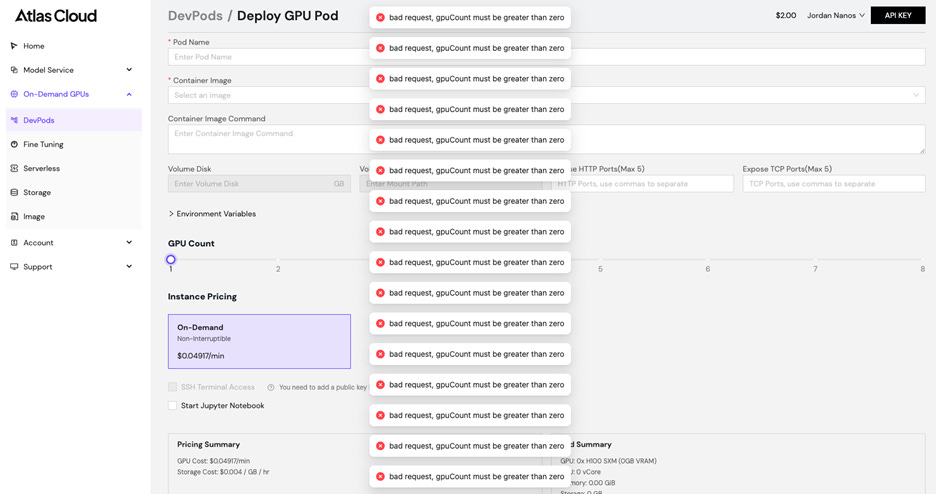

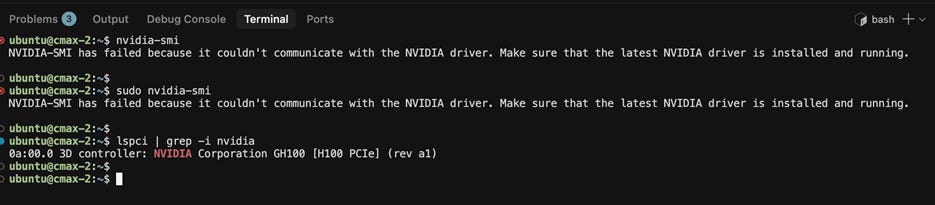

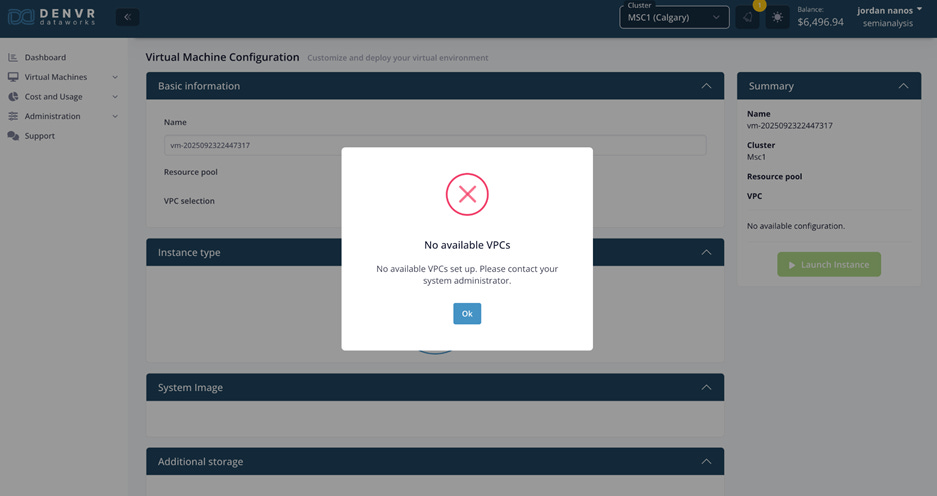

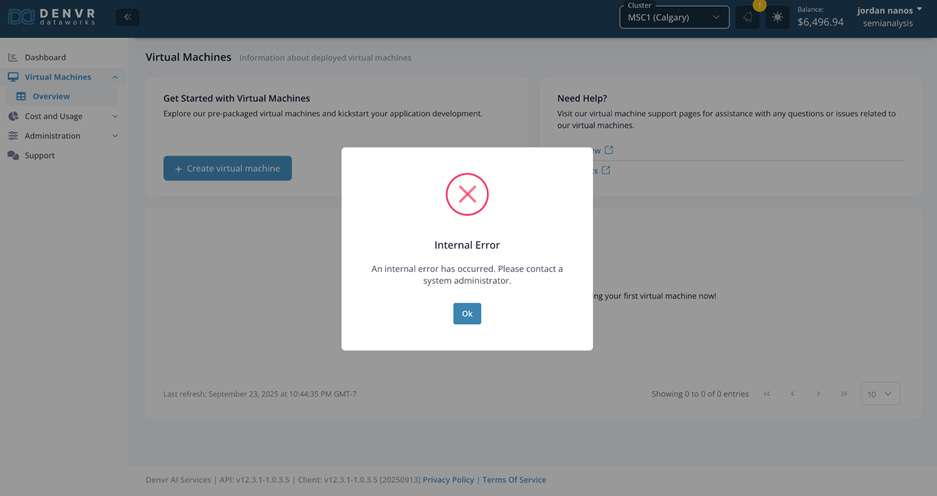

Eventually, we were also provided the opportunity to test Lepton.

During our testing (which occurred over a month after we closed our testing with all Neoclouds due to delays due to meetings), we were not able to successfully create a dev pod, batch job, or run health checks on a node. Though DGX Cloud Lepton is described as a “compute marketplace connecting developers to tens of thousands of GPUs via global cloud-partners”, we were forced to bring our own GPU machines into the console. These machines are always managed and paid for outside of DGX Cloud Lepton. There seems to be no available clouds pre-registered on the platform, only “BYOC” (Bring Your Own Cloud) functionality.

Overall, our experience with Nvidia as a Neocloud or Neocloud-adjacent provider has been very strange. We encourage Nvidia to open source all of the software from lepton, run:ai, deci, shoreline, and brev so that their community of 200+ Neocloud providers can benefit. We hope that Lepton in particular can follow the path of TRT-LLM, NCCL, etc instead of the path of RIVA, Maxine, Isaac and Drive.

Quotes

The following quotes represent insights from industry experts, researchers, and practitioners who work with GPU cloud infrastructure daily. These perspectives help illuminate the real-world challenges and considerations that inform the ClusterMAX™ rating system.

Industry Leaders

“ClusterMAX has become a valuable tool for making data-driven decisions about where and how we deploy compute. As a leading system for benchmarking GPU clouds across performance, reliability, and support, it provides critical insights to our team as we scale OpenAI’s infrastructure through projects like Stargate — helping ensure the benefits of AI reach everyone.”

— Peter Hoeschele, General Manager of OpenAI Stargate

“Meta’s Super Intelligence efforts relies on both internal on prem clusters and cloud providers to build the largest AI fleet for frontier model training & inference efforts. ClusterMAX is delivering a comprehensive rating system that the industry can rely on to evaluate GPU cloud providers.”

— Santosh Janardhan, Head of Global Infrastructure at Meta

“AI is transforming every industry, and the infrastructure behind it needs to be dependable, scalable, and transparent. SemiAnalysis’s industry standard rating system, ClusterMAX, shines a light on what truly matters—real-world performance, operational excellence, and customer support—helping organizations navigate a complex GPU cloud landscape. It’s a valuable resource for anyone renting from GPU clouds.”

— Michael Dell, Chairman and CEO, Dell Technologies

“In the rapidly evolving AI landscape, choosing the right GPU cloud provider is essential for maximizing efficiency and minimizing risks. Supermicro commends SemiAnalysis’s ClusterMAX rating system, which evaluates providers on security, reliability, and performance to deliver unparalleled value in GPU infrastructure for enterprises and startups alike.”

— Charles Liang, President and CEO, Supermicro

“ClusterMAX has become the go-to benchmark for GPU clouds. By focusing on what users care about like managed Kubernetes, SLURM integration, operational reliability, and enterprise class support, SemiAnalysis helps GPU consumers cut through the GPU cloud marketing noise and select the best platform to run their workloads.”

— Hunter Almgren, Distinguished Technologist, Hewlett Packard Enterprise

“Snowflake’s mission is to empower every enterprise to achieve its full potential with data and AI with the performance and scale they need. As we expand our use of GPUs to accelerate AI workloads, objective and transparent benchmarks like ClusterMAX are invaluable. The system provides the clarity required to evaluate GPU cloud providers across performance, reliability, and cost, helping ensure we make the right infrastructure decisions to support our customers at scale.”

— Dwarak Rajagopal, Vice President of AI Engineering and Research, Snowflake

Financial Leaders and Venture Capitalists

“ClusterMAX is the industry standard for evaluating GPU cloud providers—providing desperately needed transparency to empower all GPU end users, large and small, with a clear understanding of the latest GPU cloud landscape.”

— Gavin Baker, Managing Partner & CIO, Atreides Management

“SemiAnalysis has quickly emerged as a trusted source in our industry through hustle and hard work. ClusterMAX is a prime example, delivering a comprehensive rating system that the industry can rely on to evaluate GPU cloud providers.”

— Brad Gerstner, Founder, Chairman, and CEO of Venture Capital Fund, Altimeter Capital

“By rigorously benchmarking providers across critical dimensions that customers care about like performance, reliability, orchestration, and security, ClusterMAX empowers us to be informed about the state of where AI compute is going.”

— Ricard Boada, Senior Vice President of Global AI Infrastructure, Brookfield Asset Management

“For AI startups, especially in early stages, GPUs are often the single biggest line item. Picking the right cloud provider can literally determine whether you make it or not. SemiAnalysis’s ClusterMAX cuts through the noise by benchmarking cost, performance, and reliability - this gives teams the data they need to make the smartest call when choosing GPU clouds.”

— Sonith Sunku, Venture Capitalist, Z Fellows

AI Companies and Startups

“At Periodic Labs, we’re building an AI Scientist to accelerate scientific discovery. To conduct novel research, we rely on GPU clouds that are stable and performant at scale. SemiAnalysis’ ClusterMAX sets the GPU cloud industry standard and has been extremely helpful in our evaluations of cloud providers. Their evaluations on reliability, performance, networking, and managed Kubernetes orchestration align closely with the demands of our workloads.”

— William Fedus, CEO @ Periodic Labs, Ex-VP of Research @ OpenAI

“At Cursor, we’re advancing the frontier of AI-native software development with tools that help engineers build faster and smarter. To support our research, we depend on GPU clouds that are reliable, performant, and easy to scale. We’re glad SemiAnalysis introduced ClusterMAX as the industry standard GPU cloud ranking system. Its evaluation across reliability, quick node swaps, & performance, and reflects what matters for our workloads.”

— Federico Cassano, Research Scientist @ Cursor

“Jua is a frontier AI lab building a foundational world model of Earth - simulating weather, hurricanes, wildfires, and more. We train on managed Slurm and run inference on managed Kubernetes, and ClusterMAX aligns well with our GPU requirements.”

— Marvin Gabler, CEO of Jua

“AdaptiveML is unified platform for enterprise inference, pretraining, and reinforcement learning. We use FluidStack & deploy on GPUs across all 3 major hyperscalers. Our stack runs on Kubernetes. ClusterMAX’s 9 different categories of criterias aligns well with our requirements”

— Daniel Hesslow, CoFounder & Research Scientist, AdaptiveML

“Extropic is pioneering thermodynamic computing — building AI systems grounded in the physics of information itself. Internally, we use SLURM clusters to manage our experiments. We are glad to see SemiAnalyis’s industry standard ClusterMAX system’s criteria includes evaluating SLURM offering across providers”

— Guillaume Verdon, Founder of Extropic, Ex-Quantum ML Lead at Alphabet

“At Mako, we’re liberating developers from the bottlenecks of GPU performance engineering. Our AI coding agent automatically generates, optimizes, and deploys GPU kernels for NVIDIA, AMD, and custom accelerators. ClusterMAX’s industry-standard GPU cloud rating system, with its multi-criteria evaluation aligned to our hardware-agnostic approach, empowers us to deliver seamless, scalable inference and RL post-training for the AI systems of tomorrow.”

— Waleed Atallah, CEO, Mako

“As an emerging AI lab, Nous Research has developed popular models such as Hermes 4. For us, ClusterMAX is the industry standard for evaluating GPU clouds across the critical dimensions we care about: price, performance, and network stability.”

— Emozilla, CEO of Nous Research

“ClusterMAX has been useful for us even if Dylan Patel is annoying”

— Matthew Leavitt, DatologyAI

“At Cartesia, we’re developing the next generation of interactive, multimodal intelligence, which requires infrastructure that is efficient, low-latency, and resilient. Systems like ClusterMAX provide the clarity and technical detail to help organizations better understand how to scale their compute infrastructure.”

— Arjun Desai, Cofounder at Cartesia

Infrastructure and Platform Providers

“At Modal, we’re the serverless platform for AI and ML engineers. High performance and reliable GPU infrastructure is a key demand of our customers. We are glad SemiAnalysis created their industry standard GPU Cloud ranking system, ClusterMAX. It has guided development of our internal GPU reliability system, gpu-health, and provides insights when selecting our GPU Cloud vendor evaluation.”

— Jonathon Belotti, Engineering @ Modal

“Baseten is a high-performance inference platform operating at the frontier of model performance, serving some of the leading AI companies in the market and early adopting enterprises. Our Inference Stack delivers blazing-fast cold starts, autoscaling, and enterprise-grade reliability. As a provider that operates across over 10 clouds, we are happy SemiAnalysis created their industry standard GPU Cloud ranking system, ClusterMAX. This system evaluates across 9 critical criteria that we value most when it comes to operating at the frontier of AI model performance.”

— Ed Shrager, Head of Special Projects, Baseten

“GPU clouds are propelling the AI revolution by democratizing access to enterprise-grade compute. Unlike traditional clouds, GPU clouds require specialized hardware-software optimization that can make or break AI performance. The SemiAnalysis ClusterMAX GPU cloud ranking system provides a holistic evaluation of the full technology stack combined with world-class analysis that provides WEKA with the vital insights needed to architect powerful AI infrastructure solutions for our GPU cloud partners and their customers.”

— Val Bercovici, Chief AI Officer, WEKA

“ClusterMAX has become the industry standard for GPU cloud rankings, and the results align perfectly with our real-world experience at fal.”

— Batuhan Taskaya, Head of Engineering, Fal.ai

ML Community Leaders and Content Creators

“SemiAnalysis created a in depth study & ranking for the GPU cloud industry covering areas that are important to end users like me such as out of box slurm/kubernetes, NCCL performance and high reliability”

— Stas Bekman, Developer & Author of Machine Learning Engineering Open Book (10k+ github stars)